Practical Solutions for LLM Routing

Introduction

Large Language Models (LLMs) offer impressive capabilities but come with varying costs and capabilities. Deploying these models in real-world applications presents a challenge in balancing cost and performance. Researchers from UC Berkeley, Anyscale, and Canva have introduced RouteLLM, an open-source framework that effectively addresses this issue.

Challenges in LLM Routing

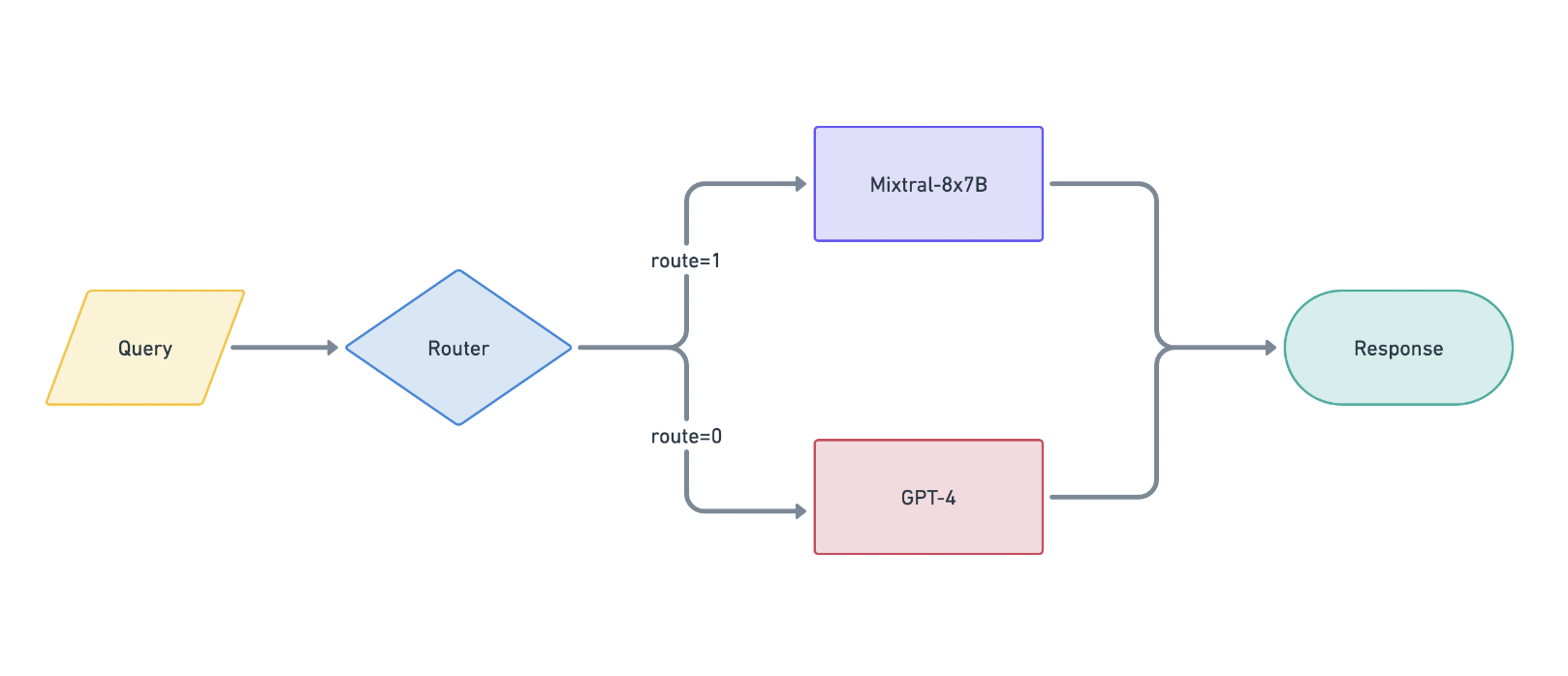

Routing queries to the most capable models ensures high-quality responses but is expensive, while directing queries to smaller models saves costs at the expense of response quality. RouteLLM aims to balance price and performance by determining which model should handle each query to minimize costs while maintaining response quality.

Framework and Methodology

RouteLLM formalizes the problem of LLM routing and explores augmentation techniques to improve router performance. It uses public data from Chatbot Arena and incorporates novel training methods to train four different routers, each with specific functions.

Performance and Cost Efficiency

The routers significantly reduce costs without compromising quality. For example, the matrix factorization router achieved 95% of GPT-4’s performance while making only 26% of the calls to GPT-4, resulting in a 48% cost reduction compared to the random baseline. Augmenting the training data further improved the routers’ performance, reducing the number of GPT-4 calls required to just 14% while maintaining the same performance level.

Comparison with Commercial Offerings

RouteLLM achieved similar performance to commercial routing systems while being over 40% cheaper, demonstrating its cost-effectiveness and competitive edge.

Generalization to Other Models

RouteLLM was tested with different model pairs and maintained strong performance without retraining, indicating its generalizability to new model pairs.

Conclusion

RouteLLM provides a scalable and cost-effective solution for deploying LLMs by effectively balancing cost and performance. The framework’s use of preference data and data augmentation techniques ensures high-quality responses while significantly reducing costs—the open-source release of RouteLLM, along with its datasets and code.