Differentially Private Stochastic Gradient Descent (DP-SGD)

DP-SGD is an important method for training machine learning models while keeping data private. It enhances the standard gradient descent by:

- Clipping individual gradients to a fixed size.

- Adding noise to the combined gradients from mini-batches.

This process protects sensitive information during training and is widely used in fields like image recognition, language processing, and medical imaging. The level of privacy depends on factors such as noise, dataset size, and training iterations.

Batch Training with DP-SGD

In DP-SGD, data is shuffled and divided into fixed-size mini-batches. This method differs from theoretical approaches that create mini-batches randomly, which can lead to privacy risks. Despite these risks, shuffle-based batching is preferred for its efficiency and compatibility with modern deep-learning systems.

Research Insights on Batch Sampling

Researchers from Google Research studied the privacy impacts of different batch sampling methods in DP-SGD. Their findings show:

- Shuffling is common but complicates privacy analysis.

- Poisson subsampling provides clearer privacy metrics but is less scalable.

Using Poisson metrics for shuffling can underestimate privacy loss, highlighting the importance of accurate analysis in DP-SGD implementations.

Understanding Differential Privacy (DP)

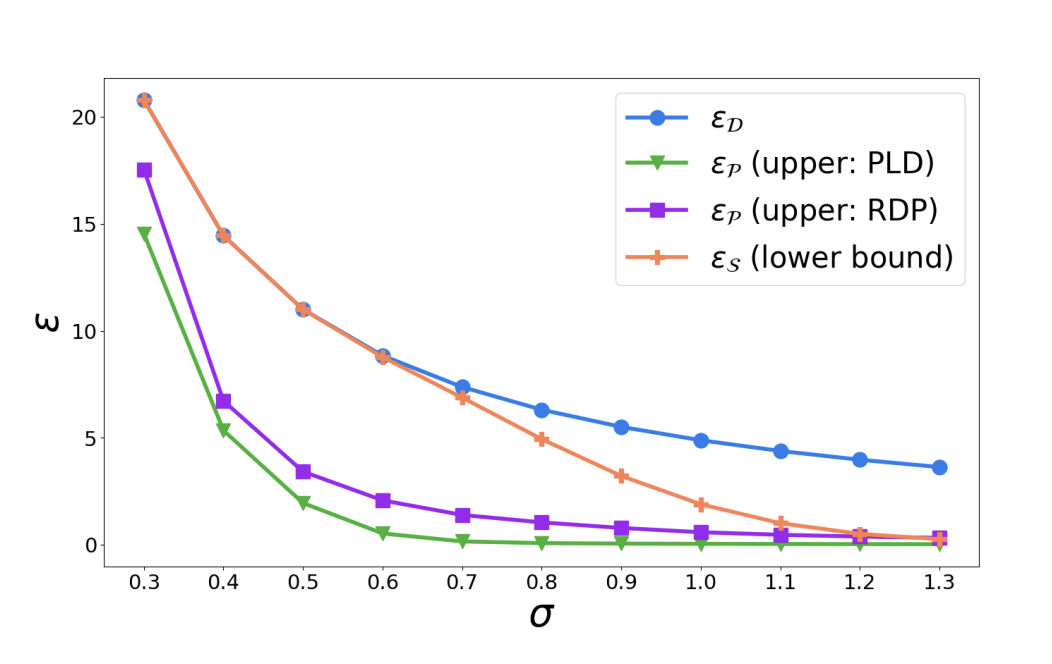

DP mechanisms ensure privacy by limiting the chances of identifying changes in individual records. The Adaptive Batch Linear Queries (ABLQ) mechanism uses batch samplers and Gaussian noise for privacy. The study shows:

- ABLQS offers better privacy than ABLQD.

- ABLQP provides stronger protection than ABLQS, especially for small ε.

Conclusion and Future Directions

This research identifies gaps in privacy analysis for adaptive batch linear query mechanisms. Key points include:

- Shuffling improves privacy over deterministic sampling.

- Poisson sampling may offer worse guarantees at large ε.

Future work will focus on improving privacy accounting methods and exploring new techniques for real-world applications.

Get Involved

For more insights, check out the research paper. Follow us on Twitter, join our Telegram Channel, and connect on LinkedIn. If you enjoy our work, subscribe to our newsletter and join our 55k+ ML SubReddit.

Transform Your Business with AI

Stay competitive by leveraging AI solutions. Here’s how:

- Identify Automation Opportunities: Find areas in customer interactions that can benefit from AI.

- Define KPIs: Ensure measurable impacts from your AI initiatives.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start with a pilot project, gather data, and expand wisely.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights, follow us on Telegram or Twitter.