The Challenge

LLMs have made significant progress but face limitations in handling long input sequences, hindering their applicability in tasks like document summarization, question answering, and machine translation.

The Solution

Introducing HashHop Evaluation Tool

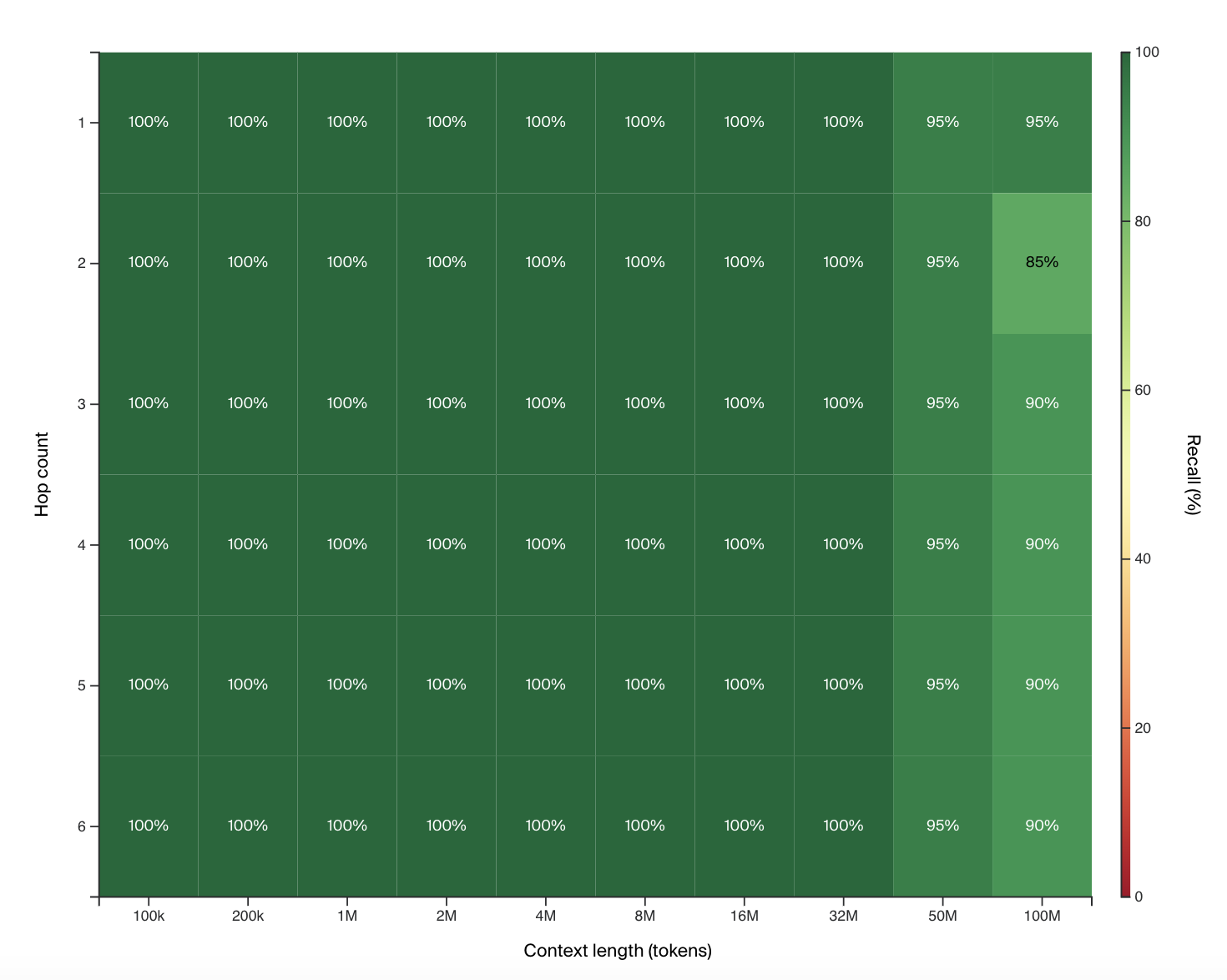

HashHop uses random, incompressible hash pairs to measure a model’s ability to recall and reason across multiple hops without relying on semantic hints. This ensures a more accurate evaluation of a model’s capability to handle extensive context effectively.

Long-Term Memory (LTM) Model

Magic has developed an LTM model capable of handling up to 100 million tokens in context, offering improved memory efficiency and processing power compared to existing models.

The Value

The LTM-2-mini model, trained using the HashHop method, demonstrates promising results in handling large contexts far more efficiently than traditional models. It operates at a fraction of the cost of other models, making it more practical for real-world applications, particularly in software development.

Conclusion

Magic’s LTM-2-mini model, evaluated using the newly proposed HashHop method, offers a reliable and efficient approach to processing extensive context windows, resolving limitations in current models and evaluation methods. This presents a promising solution for enhancing code synthesis and other applications requiring deep contextual understanding.