This article discusses the evolution of Data/ML platforms and their support for complex MLOps practices. It explains how data infrastructures have evolved from simple systems like online services and OLTP/OLAP databases to more sophisticated setups like data lakes and real-time data/ML infrastructures. The challenges and solutions at each stage are described, as well as the importance of MLOps principles for managing ML systems. The article concludes by highlighting emerging trends in the ML landscape, such as serviceless platforms and AI-driven feature engineering.

How Data/ML platforms evolve and support complex MLOps practices

Data/ML platforms have become a popular topic in the tech landscape. In this article, we will explore the evolution of these platforms and how they support complex MLOps practices. We will also discuss the challenges and practical solutions in building and managing ML projects.

Starting of the Journey: Online Service + OLTP + OLAP

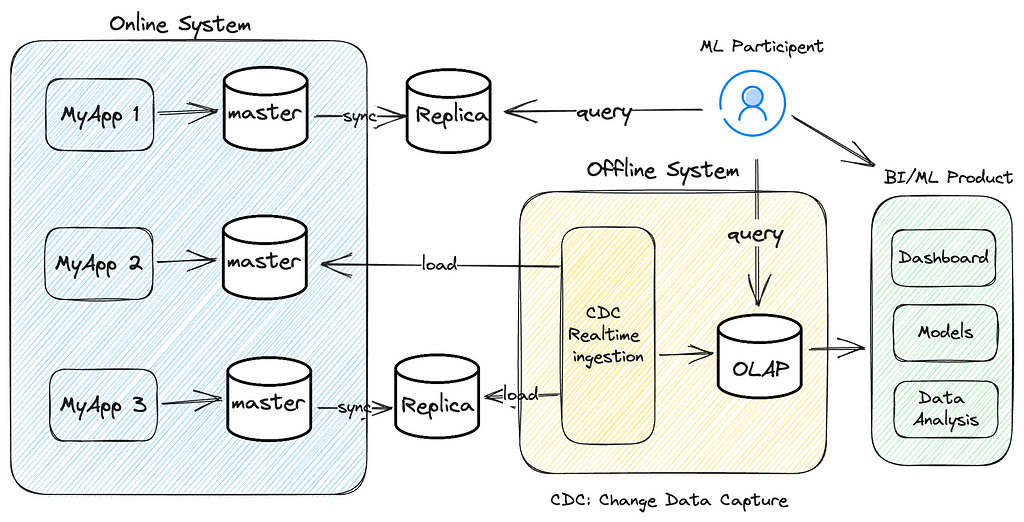

In the beginning, data infrastructures were fairly simple. Analytical queries were sent to the read replica of an online OLTP database or a separate OLAP database served as a data warehouse.

There are multiple ways of doing data analysis at this stage:

- Submitting queries to the OLTP database’s replica node (not recommended)

- Enabling Change Data Capture (CDC) of the OLTP database and ingesting the data to the OLAP database

- Running ML workloads in a local environment, using a Jupyter notebook and loading structured data from the OLAP database

The challenges of this architecture include managing unstructured or semi-structured data, performance regression with massive data processing, limited support for various compute engines, and high storage costs.

Data lake: Storage-Compute Separation + Schema on Write

To overcome the challenges of the previous stage, companies often build a data lake. A data lake allows for the storage of unstructured and semi-structured data, reducing costs by using specialized storage solutions and scaling up compute clusters as needed.

Cloud providers offer established storage solutions for data lakes, and additional tasks such as managing table metadata and providing data visibility need to be implemented. Choosing the right file format and file sizes is crucial for optimizing the value and efficiency of the data lake.

Realtime Data/ML Infra: Data River + Data Streaming + Feature Store + Metric Server

Building a realtime data infrastructure requires a joint effort from multiple departments. The architecture includes a data river, data streaming pipeline, online feature store, and metric server.

Data streaming pipelines process realtime data, which can be used directly in the online feature store or synced to a metric server for further processing. Flink is a popular choice for building data streaming pipelines.

The online feature store, backed by a key-value database, helps enrich requests sent to the model serving service with a feature vector. This decouples the dependency between backend service teams and ML engineer teams, allowing for independent updates of ML features and models.

MLOps: Abstraction, Observability and Scalability

MLOps is a set of principles for managing ML systems. It addresses challenges such as data change monitoring, managing ML features across offline and online environments, and ensuring a reproducible ML pipeline.

ML pipeline configuration should be concise and abstract away infrastructure details. Kubernetes is a popular solution for orchestrating ML workloads, and using configuration files like YAML can help provide a clear interface between users and the platform.

Implementing MLOps helps manage technical debt, reduces cognitive load for ML engineers, and ensures scalability and observability of ML systems.

What Next

MLOps is an ongoing process, and there are many sub-topics to explore, such as hyperparameter optimization and distributed training architecture. The ML landscape is constantly evolving, with trends such as serviceless data platforms, AI-driven feature engineering, and Model-as-a-Service.

To stay competitive and leverage AI for your company’s advantage, consider implementing MLOps principles and exploring AI solutions that align with your needs.

For more information on AI solutions and how they can redefine your sales processes and customer engagement, visit itinai.com.

List of Useful Links:

- AI Lab in Telegram @aiscrumbot – free consultation

- From Data Platform to ML Platform

- Towards Data Science – Medium

- Twitter – @itinaicom