“`html

In this blog post we walk you through our journey creating an LLM-based code writing agent from scratch – fine tuned-for your needs and processes – and we share our experience of how to improve it iteratively.

Introduction

This article is the second part in our series on Coding Agents. The first part provides an in-depth look at existing solutions, exploring their unique attributes and inherent limitations. We recommend starting there for the full picture.

Riding the wave of AutoGPT’s initial popularity surge, we embarked on a mission to uncover its potential for more complex software development projects. Our focus was Data Science, a domain close to our hearts.

Upon realizing the pitfalls of AutoGPT and other Coding Agents, we decided to create an innovative tool of our own. However, it turned out that other powerful solutions had entered the arena in the meantime, nudging us to test all the agents on a common benchmark.

In this article, we invite you on a journey through the evolution of our own AI Agent solutions, from humble beginnings with a basic model to advanced context retrieval. We’ll evaluate both our agents and those currently available to the public, and we’ll also reveal the challenges and limitations we encountered during the development process. Finally, we’ll share the insights and lessons learned from our experience. So join us as we navigate the realm of Coding Agent development, detailing the peaks, the valleys, and all the intricate details in between.

Creating a Data Scientist Agent

All of the agents that we presented in the previous article were mainly evaluated on pure software engineering tasks such as building simple games or writing web servers with Rest API. But when tested on traditional Data Science problems such as image classification, object detection or sentiment analysis, their performance was far from perfect. That’s why we decided to build our own Agent to be better at solving Data Science problems.

From the beginning, we determined the two principal approaches to be explored:

Approach A: The agent will initially generate the complete plan and subsequently execute it.

Approach B: The agent will iteratively create a plan for problem-solving.

We utilized the GPT-3.5 and GPT-4 models to engineer our coding agents. We did explore other models, like Claude 2, but their output paled in comparison to the quality of code produced by the OpenAI models.

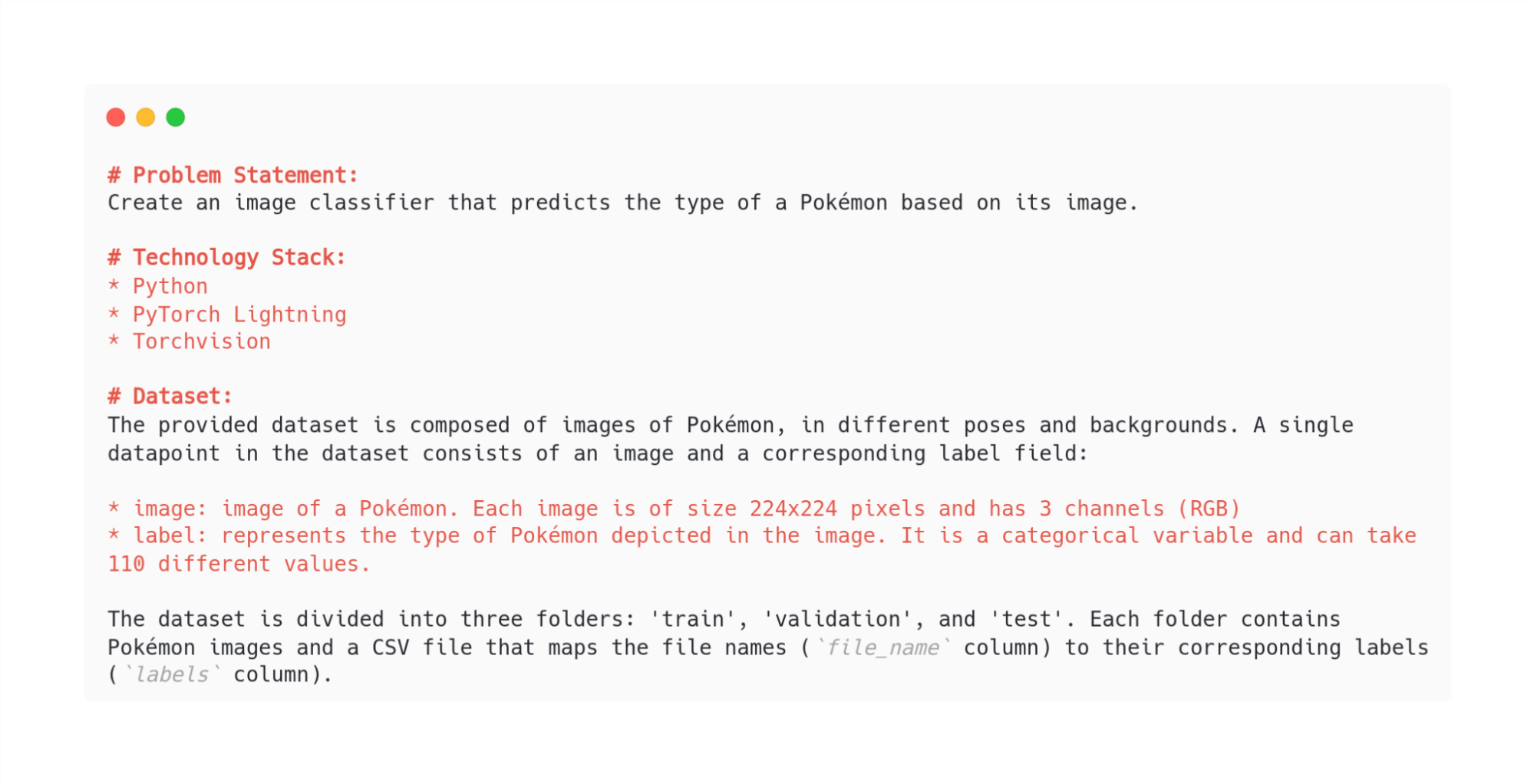

We evaluated numerous benchmarks, all of which included only initial objective descriptions. Illustrated in Figure 1 is the description of the simplest benchmark among the seven we tested. This particular benchmark will serve as the example for demonstrating how the agents function in the next sections of this blog post.

…

Approach A: Generating the plan upfront

In this section, we’ll walk you through how our AI Agent evolved over time. We started with a basic implementation and gradually improved it until we reached a point where the agent could successfully write code and tests, refactor previously written code, and much more.

Baseline

We started with the implementation of a baseline agent to quickly assess whether this path made sense. Our baseline represents one of the simplest approaches you can create. It relies heavily on the underlying models and the initial problem description provided by the user. For example, we asked the models to produce a plan in the form of a valid JSON, only to discover that the models failed to do so, returning JSON that couldn’t be parsed. As a result, the entire program would fail (and you can imagine how challenging it is to carry out a Data Science project without a proper plan!).

…

Improving planning and context retrieval

In the next iteration, we paid more attention to reducing the size of the input context, thus optimizing latency and cost. This time, instead of returning the full files in the context, we decided to divide the files into chunks and return only the useful ones to the model.

…

Approach B: Generating a plan on the fly

This approach is different from the others, because it is inspired by the fact that developers are never able to plan every little step that will be taken when starting a new project. Python scripts are not written once from top to bottom, but instead are constantly changed and improved.

…

Evaluation

In order to measure and compare our open-source AI agent and others, we have prepared a set of benchmark tasks. We’ve evaluated coding writing agents over a total of seven small-scale data science projects, which included tasks like:

…

Once the agents completed their tasks, we evaluated the outcomes of the projects they generated. The evaluation was undertaken in two steps:

…

Limitations

While AI Agents show surprising autonomy in the coding task performance, they still face several limitations, with some critical ones being:

…

Lessons learned

We wish to share some of our insights and useful tricks that we learned from our experiments that you may find useful when experimenting with your own AI agents:

…

Creating Coding Agents: final thoughts

In this article, we presented the process of creating our unique Coding Agents and compared them to the currently available solutions. Initially, our solution surpassed the available counterparts. However, the dynamic nature of technology soon brought new contenders like MetaGPT, which at the time of writing exceeds the efficiency of all others and also stands as an equal rival for our agent.

…

Interested in streamlining your projects with AI-based software development? Feel free to reach out to us for comprehensive solutions tailored to your specific needs.

The post Creating your own code writing agent. How to get results fast and avoid the most common pitfalls appeared first on deepsense.ai.

Spotlight on a Practical AI Solution

Consider the AI Sales Bot from itinai.com/aisalesbot designed to automate customer engagement 24/7 and manage interactions across all customer journey stages.

…

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.

“`