Challenges in AI Decision-Making

In the fast-changing world of artificial intelligence, a key challenge is enhancing language models’ decision-making skills beyond simple interactions. While traditional large language models (LLMs) are good at generating responses, they often struggle with complex, multi-step problem-solving and adapting to changing environments. This limitation arises from training data that does not accurately represent the structured interactions found in real-world situations. Additionally, gathering real-world data can be expensive and risky. Therefore, there is a need for methods that enable LLMs to explore, gather information, and make informed decisions safely.

PAPRIKA: A New Approach

Researchers at Carnegie Mellon University have introduced PAPRIKA, a method designed to enhance language models’ general decision-making abilities across various environments. Instead of relying solely on traditional training data, PAPRIKA utilizes synthetic interaction data generated from a variety of tasks, including guessing games and customer service simulations. This diverse training helps the model learn to adapt its behavior based on contextual feedback without needing further updates, promoting a flexible learning strategy applicable to new tasks.

Technical Details and Benefits

PAPRIKA employs a two-stage fine-tuning process. The first stage exposes the LLM to a wide range of synthetic trajectories using a method called Min-p sampling, ensuring diverse and coherent training data. The second stage refines the model through supervised fine-tuning and preference optimization, allowing it to learn from successful decision-making behaviors.

Additionally, PAPRIKA incorporates a curriculum learning strategy that selects tasks based on their learning potential, enhancing data efficiency and improving the model’s ability to generalize its decision-making strategies across different contexts.

Results and Insights

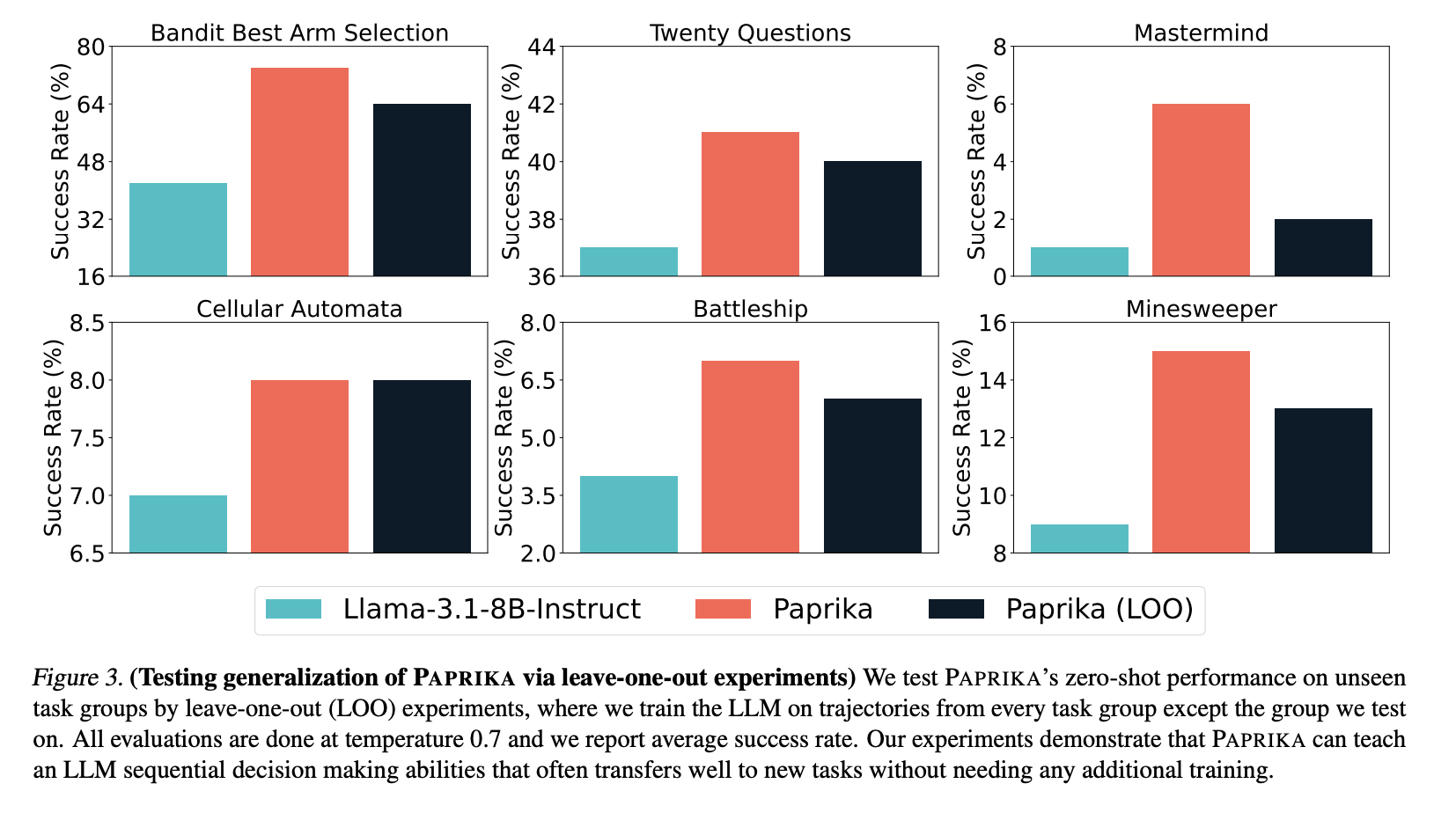

The effectiveness of the PAPRIKA method is evident in its results. For instance, in a task requiring strategic decision-making, PAPRIKA significantly improved the success rate. Overall, training on diverse task trajectories led to a 47% performance increase compared to baseline models.

Further evaluations showed that the decision-making strategies learned through PAPRIKA could be applied to new tasks, indicating that the model’s capabilities are transferable across different scenarios. Curriculum learning also demonstrated that selectively sampling tasks based on difficulty can lead to further improvements.

Conclusion

PAPRIKA offers a strategic approach to bridging the gap between static language understanding and dynamic decision-making. By using synthetic interaction data and a structured fine-tuning process, CMU researchers have shown that LLMs can become more adaptable decision-makers. This method prepares models to tackle new challenges with minimal additional training, enhancing their ability to operate autonomously in complex environments.

While challenges remain, such as ensuring a solid starting model and managing the costs of synthetic data generation, PAPRIKA represents a promising direction for developing versatile AI systems capable of sophisticated decision-making.

Explore Further

Check out the Paper, GitHub Page, and Model on Hugging Face. All credit for this research goes to the project researchers. Follow us on Twitter and join our 80k+ ML SubReddit.

Transform Your Business with AI

Explore how AI can enhance your work processes:

- Identify areas for automation in customer interactions.

- Determine key performance indicators (KPIs) to measure the impact of AI investments.

- Select customizable tools that align with your business objectives.

- Start with a small project, assess its effectiveness, and gradually expand AI usage.

If you need assistance in managing AI in your business, contact us at hello@itinai.ru or reach out via Telegram, X, or LinkedIn.