Understanding Multimodal Large Language Models (MLLMs)

Multimodal Large Language Models (MLLMs) are advanced AI systems that can understand both text and visual information. However, they struggle with detailed tasks like object detection, which is essential for applications such as self-driving cars and robots. Current models, like Qwen2-VL, show low performance, detecting only 43.9% of objects in the COCO dataset. This issue arises from conflicting tasks in perception and understanding, along with limited training data.

Challenges in Current Models

Traditional methods for improving perception in MLLMs often involve using bounding box coordinates. While these methods work with text understanding, they lead to errors and inaccuracies in object detection. Current frameworks may change how objects are detected but are not robust enough for real-world applications. Additionally, the training datasets do not effectively support both perception and understanding tasks.

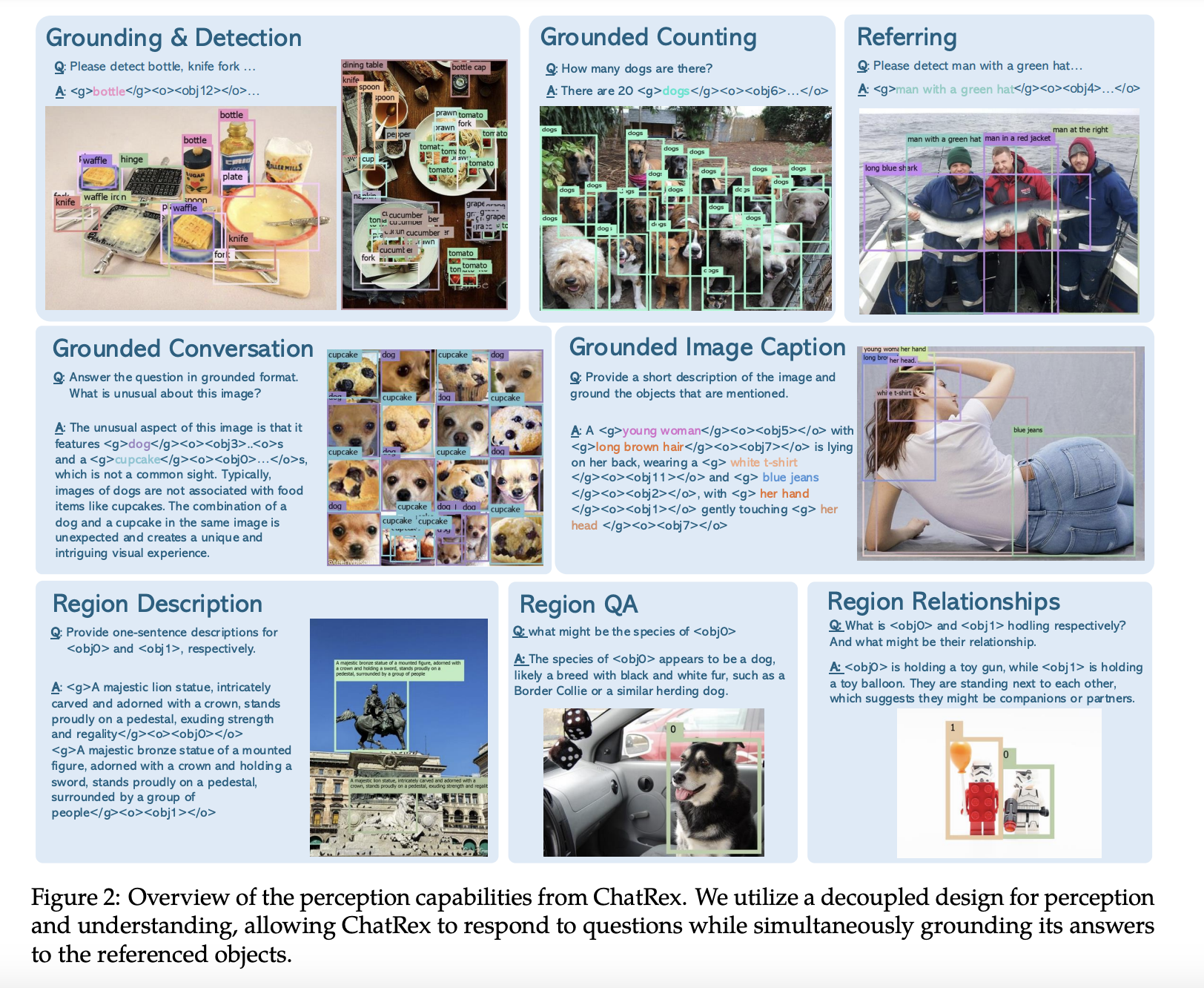

Introducing ChatRex

To address these challenges, researchers from the International Digital Economy Academy (IDEA) created ChatRex. This advanced MLLM features a unique design that separates perception from understanding tasks. ChatRex uses a retrieval-based framework for object detection, treating it as a process of retrieving bounding box indices rather than predicting coordinates directly. This innovative approach reduces errors and improves detection accuracy.

Key Features of ChatRex

- Universal Proposal Network (UPN): Generates detailed bounding box proposals, addressing ambiguities in object representation.

- Dual-Vision Encoder: Combines high-resolution and low-resolution visual features to enhance object detection precision.

- Rexverse-2M Dataset: A large collection of over two million annotated images that ensures balanced training across perception and understanding tasks.

Performance and Applications

ChatRex outperforms existing models in object detection, achieving higher precision, recall, and mean Average Precision (mAP) scores on datasets like COCO and LVIS. It accurately connects descriptive text to the right objects, excels at generating relevant image captions, and handles complex interactions between text and visuals effectively.

Why Choose ChatRex?

ChatRex is the first MLLM to effectively resolve the long-standing issues between perception and understanding tasks. Its innovative design and extensive training dataset set a new benchmark for MLLMs, enabling precise object detection and rich contextual understanding. This dual capability opens up new possibilities in dynamic environments, showcasing how effective integration of perception and understanding can maximize the potential of multimodal systems.

Get Involved and Learn More

Check out the Paper and GitHub Page. All credit for this research goes to the dedicated researchers behind it. Stay connected with us on Twitter, join our Telegram Channel, and participate in our LinkedIn Group. If you appreciate our work, subscribe to our newsletter. Join our thriving community of over 55k on ML SubReddit.

Transform Your Business with AI

Stay competitive by leveraging ChatRex: A Multimodal Large Language Model (MLLM) with a Decoupled Perception Design. Discover how AI can revolutionize your work processes:

- Identify Automation Opportunities: Find key customer interactions that can benefit from AI.

- Define KPIs: Ensure measurable impacts from your AI initiatives.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start with a pilot project, collect data, and expand AI usage wisely.

For AI KPI management advice, connect with us at hello@itinai.com. For ongoing insights into leveraging AI, follow us on Telegram or Twitter.

Enhance Your Sales and Customer Engagement

Discover how AI can transform your sales processes and improve customer interactions. Explore solutions at itinai.com.