Introduction to Retrieval-Augmented Generation (RAG)

Retrieval-Augmented Generation (RAG) is a robust methodology that enhances the capabilities of large language models (LLMs) by merging their creative generation skills with retrieval systems’ factual accuracy. This integration addresses a common issue in LLMs: hallucination, or the generation of false information.

Business Applications

Implementing RAG can significantly improve the accuracy of responses in various business contexts, such as:

- Domain-specific assistants

- Customer support systems

- Any application where reliable information from documents is crucial

Step-by-Step Guide to Building a RAG System

Step 1: Setting Up the Environment

Begin by installing necessary libraries, preferably using Google Colab for ease of setup. Install the following packages:

- transformers

- sentence-transformers

- faiss-cpu

- accelerate

- einops

- langchain

- pypdf

Step 2: Creating a Knowledge Base

For demonstration, create a knowledge base focused on AI concepts. In practical scenarios, this could involve importing data from PDFs, web pages, or databases. Sample topics could include:

- Vector databases

- Embeddings

- RAG systems

Step 3: Loading and Processing Documents

Load the documents into your system and process them into manageable chunks for retrieval purposes.

Step 4: Creating Embeddings

Convert document chunks into vector embeddings using a reliable embedding model. This converts textual data into formats that are machine-readable and conducive for retrieval.

Step 5: Building the FAISS Index

Utilize FAISS to create an index for your embeddings, improving the efficiency of your retrieval process.

Step 6: Loading a Language Model

Select a lightweight open-source language model from Hugging Face that is optimized for CPU use, ensuring accessibility regardless of computing resources.

Step 7: Creating the RAG Pipeline

Develop a function that integrates the retrieval and generation processes, allowing your system to respond to queries effectively by referencing the appropriate documents.

Step 8: Testing the RAG System

Conduct tests using predetermined questions to assess the response quality of your RAG system. Evaluate the relevance and accuracy of the retrieved information.

Step 9: Evaluating and Improving the RAG System

Implement an evaluation function to gauge response quality based on various metrics, including response length and source relevance.

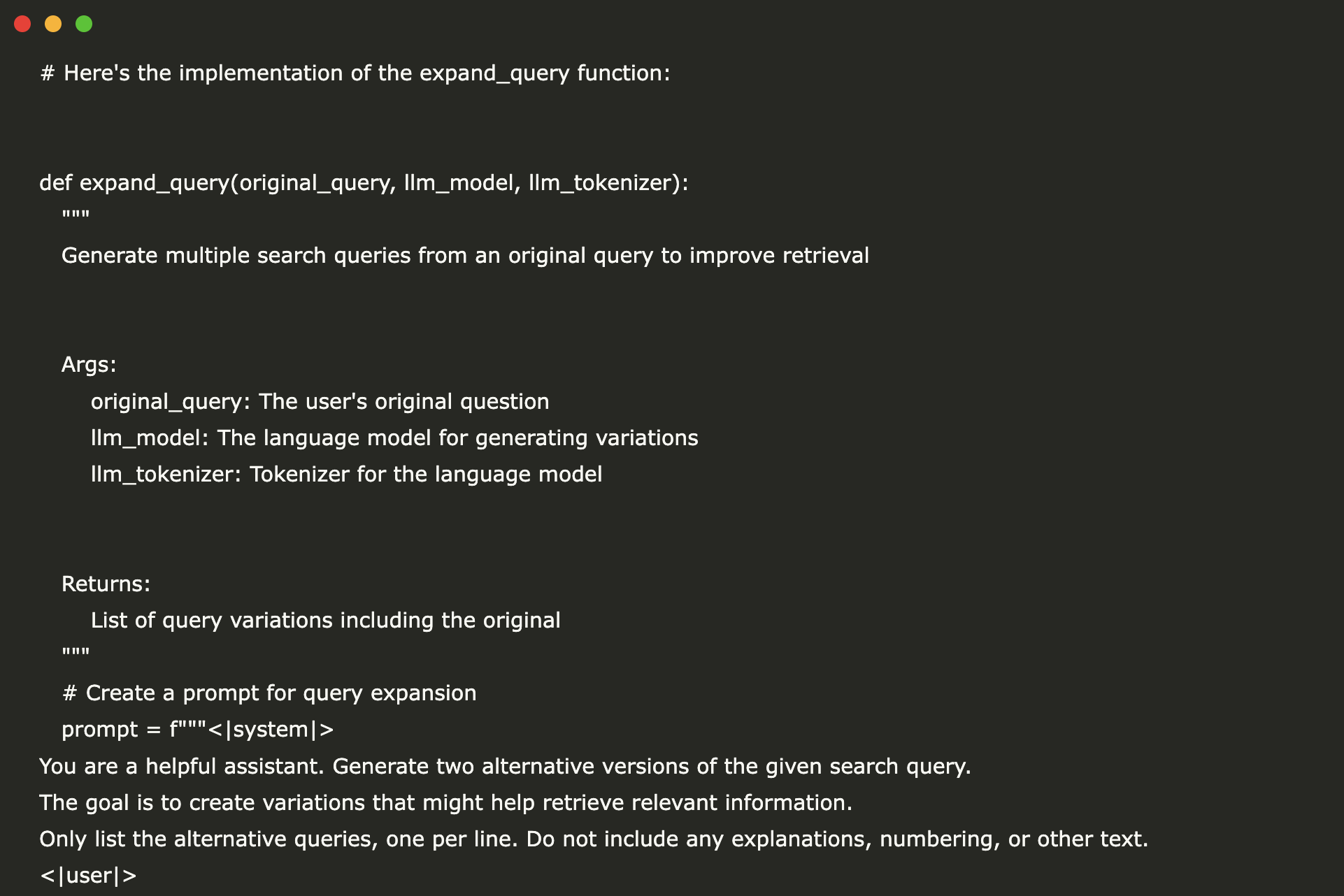

Step 10: Advanced RAG Techniques – Query Expansion

Enhance your retrieval capabilities by implementing query expansion techniques to generate alternative search queries, thus improving the chances of retrieving relevant documents.

Step 11: Continuous Improvement

Regularly assess and refine your RAG system through the implementation of advanced features such as query reranking, metadata filtering, and model fine-tuning for specific domains.

Conclusion

In summary, this tutorial outlines the essential components of building a RAG system using FAISS and an open-source LLM, detailing methods for document processing, embedding generation, and performance evaluation.

Next Steps

Consider exploring additional enhancements to your RAG system, such as:

- Creating a user-friendly web interface

- Scaling with advanced FAISS indexing methods

- Fine-tuning the language model on specific data

Contact Us

If you require assistance with managing AI for your business, please reach out at hello@itinai.ru. You can also connect with us on various platforms:

“`