Understanding Multimodal AI Agents

Multimodal AI agents can handle different types of data like images, text, and videos. They are used in areas such as robotics and virtual assistants, allowing them to understand and act in both digital and physical spaces. These agents aim to combine verbal and spatial intelligence, making interactions across various fields more effective.

Challenges with Current AI Models

Many AI systems focus on either vision-language understanding or robotic manipulation, but they often struggle to merge these skills into one model. Most existing models are tailored for specific tasks, which limits their use in different applications. The main challenge is to create a unified model that can understand and act in diverse environments.

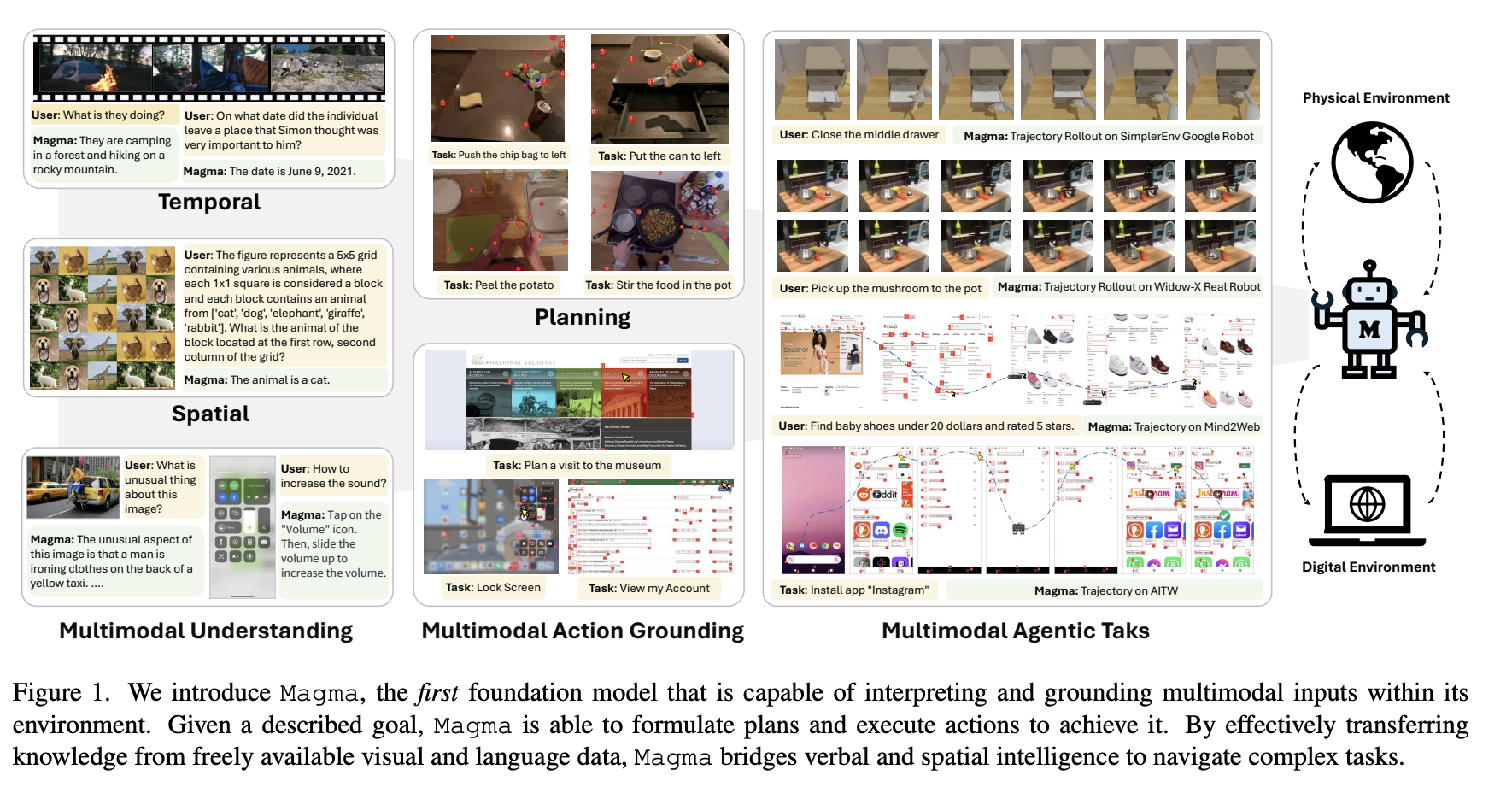

Introducing Magma

Researchers from several universities have developed Magma, a new model that combines multimodal understanding with action execution. This model aims to address the limitations of current Vision-Language-Action (VLA) models by using a comprehensive training approach that integrates understanding, action grounding, and planning.

Key Features of Magma

- Set-of-Mark (SoM): This feature helps the model identify actionable visual objects, like buttons in user interfaces.

- Trace-of-Mark (ToM): This allows the model to track object movements and plan future actions.

Training and Performance

Magma was trained on a diverse dataset of 39 million samples, including UI navigation tasks, robotic actions, and instructional videos. It uses advanced deep learning techniques to enhance its performance across various domains.

Impressive Results

Magma has shown remarkable success in various tasks:

- 57.2% accuracy in selecting UI elements.

- 52.3% success in robotic manipulation tasks.

- 80.0% accuracy in visual question-answering tasks.

- Superior performance in spatial reasoning and video-based reasoning tasks.

Key Takeaways

- Magma combines vision, language, and action in one model.

- It outperforms existing models in various benchmarks.

- Magma is adaptable and does not require fine-tuning for different tasks.

- Its capabilities can significantly enhance decision-making in robotics, UI automation, and digital assistants.

Explore AI Solutions for Your Business

To stay competitive, consider how Magma and similar AI models can transform your operations:

- Identify Automation Opportunities: Find areas where AI can improve customer interactions.

- Define KPIs: Ensure measurable impacts from your AI initiatives.

- Select an AI Solution: Choose tools that fit your needs and allow for customization.

- Implement Gradually: Start small, gather data, and expand your AI usage wisely.

For more information on AI KPI management, contact us at hello@itinai.com. Stay updated on AI insights by following us on Telegram or Twitter @itinaicom.

Discover how AI can redefine your sales processes and customer engagement at itinai.com.