Understanding Model Merging with TIME Framework

What is Model Merging?

Model Merging combines the strengths of specialized models into one powerful system. It involves training different versions of a base model on separate tasks until they become experts, then merging these experts together. However, as new tasks and domains emerge rapidly, some may not be sufficiently covered during the initial training. Temporal Model Merging solves this by incorporating expert knowledge as it becomes available.

Key Questions in Temporal Model Merging

When considering Temporal Model Merging, several important questions arise:

– How does the choice of training initialization impact results?

– What are the best techniques over time?

– Is it advantageous to switch strategies between training and deployment?

This article reviews recent research addressing these questions and explores various aspects of model merging over time.

Introducing TIME Framework

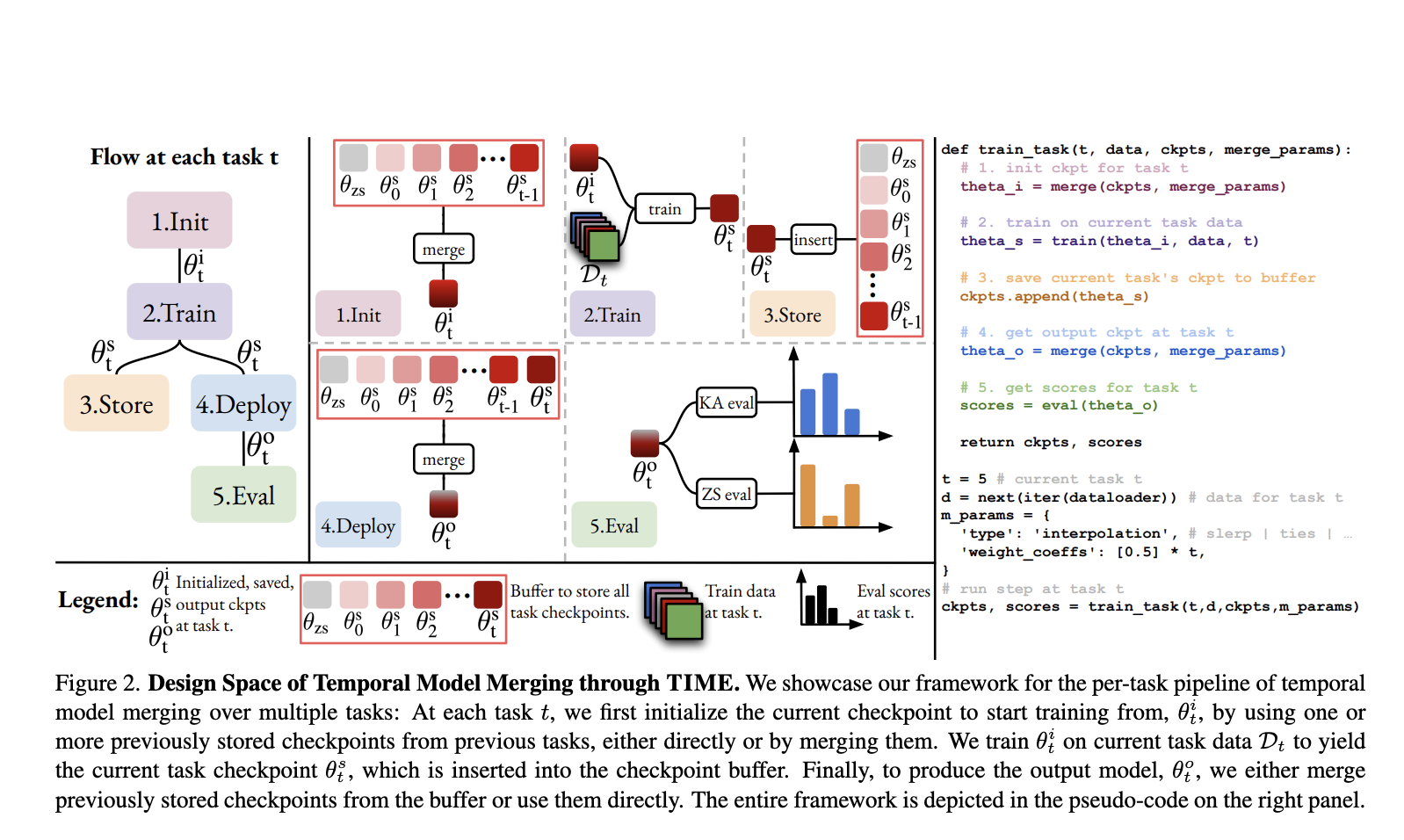

Researchers from the University of Tübingen developed the “TIME” (Temporal Integration of Model Expertise) framework. This framework focuses on three main areas of temporal model merging:

1. Initialization of experts

2. Merging for deployment at specific times

3. Techniques for merging over time

TIME evaluates existing techniques systematically along these axes, covering both standard model merging and continual pretraining.

Five-Stage Update Pipeline

The authors propose a five-stage update process for each task in temporal model merging:

1. **Init**: Choose an initialization protocol to create weights at time t.

2. **Train**: Use these weights to train the model on a specific task, creating an expert.

3. **Store**: Save the trained weights as part of the expert model storage.

4. **Deploy**: Select a deployment protocol to generate output weights.

5. **Eval**: Use the deployed model for applications and evaluation.

Research Findings

The research utilized the FOMO-in-Flux benchmark for continual pretraining, focusing on visual and semantic shifts. The foundational model used was ViT-B/16 CLIP, with evaluation metrics including Knowledge Accumulation and Zero-Shot Retention.

Initial studies on static offline merging showed minimal differences among strategies, highlighting the limitations in knowledge acquisition. Continual training outperformed offline methods. One effective solution was applying data replay to offline merging, boosting performance from 54.6% to 58.2%. Another approach involved non-uniform weighting for recent tasks, increasing performance to 58.9%.

The findings confirmed that the merging technique’s choice is less critical than selecting the best initialization and deployment strategies. The authors introduced the “BEST-IN-TIME” strategy, which demonstrated efficient scaling of temporal model merging across different model sizes and tasks.

Conclusion

The TIME framework provides a systematic approach to temporal multimodal model merging, particularly for emerging tasks. It highlights the importance of initialization, deployment, and merging strategies, showing that merging techniques have a minimal impact on overall results. The study emphasizes the effectiveness of continual training over offline merging.

Get Involved

Explore the research paper for more insights. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Don’t forget to join our 60k+ ML SubReddit.

Transform Your Business with AI

To stay competitive and leverage AI effectively, consider the TIME Framework:

– **Identify Automation Opportunities**: Find key areas in customer interactions that can benefit from AI.

– **Define KPIs**: Ensure measurable impacts on business outcomes from your AI initiatives.

– **Choose the Right AI Solution**: Select tools that fit your needs and allow customization.

– **Implement Gradually**: Start with pilot projects, gather data, and expand AI usage wisely.

For AI KPI management advice, contact us at hello@itinai.com. For continuous insights into leveraging AI, follow us on Telegram or Twitter.

Discover how AI can enhance your sales processes and customer engagement at itinai.com.