Transforming AI with Tokenformer

Unmatched Performance in AI

Transformers have revolutionized artificial intelligence, excelling in natural language processing (NLP), computer vision, and integrating various data types. They are particularly good at recognizing patterns in complex data thanks to their attention mechanisms.

Challenges in Scaling

However, scaling these models is challenging due to high computational costs. As transformer models grow, they require more hardware resources and longer training times, which can become unmanageable. Researchers are working on innovative methods to improve efficiency without losing performance.

Limitations of Traditional Models

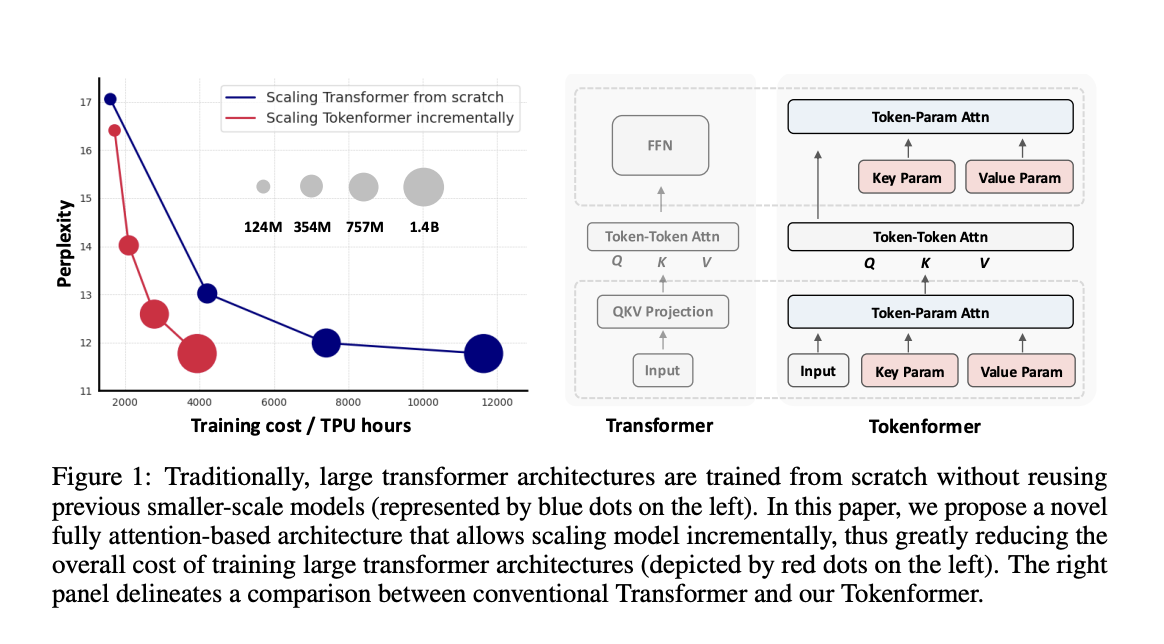

The main issue with scaling transformers is the fixed parameters in their linear layers. This rigid structure makes it costly to expand the model without complete retraining. Traditional models often require extensive retraining when changes are made, leading to high costs and inflexibility.

Innovative Solutions

Past methods for scaling, like duplicating weights, often disrupt pre-trained models and slow down training. Although some progress has been made, these methods still struggle to maintain model integrity.

Introducing Tokenformer

Researchers from the Max Planck Institute, Google, and Peking University have developed Tokenformer, a new architecture that treats model parameters like tokens. This allows for dynamic interactions and incremental scaling without the need for retraining, significantly lowering training costs.

How Tokenformer Works

Tokenformer features a unique component called the token-parameter attention (Pattention) layer. This layer uses input tokens as queries and model parameters as keys and values, allowing for efficient scaling by adding new parameter tokens while keeping dimensions constant.

Key Benefits of Tokenformer

– **Substantial Cost Savings**: Tokenformer reduces training costs by over 50% compared to traditional transformers.

– **Incremental Scaling**: The model can add new parameter tokens without modifying the core architecture, allowing for flexibility.

– **Preservation of Knowledge**: It retains information from smaller pre-trained models, speeding up training.

– **Enhanced Performance**: Tokenformer performs competitively across various tasks, making it a versatile foundational model.

– **Optimized Efficiency**: It manages longer sequences and larger models more effectively.

Conclusion

Tokenformer represents a breakthrough in transformer design, achieving scalability and efficiency by treating parameters as tokens. This innovative approach reduces costs while maintaining performance across tasks, paving the way for sustainable and efficient large-scale AI models.

Explore More

Check out the Paper, GitHub Page, and Models on HuggingFace. Follow us on Twitter, join our Telegram Channel, and LinkedIn Group. If you enjoy our work, subscribe to our newsletter and join our 55k+ ML SubReddit.

Get Involved

If you’re looking to evolve your company with AI, consider Tokenformer for seamless and cost-effective scaling. Discover how AI can redefine your work and identify automation opportunities. For AI KPI management advice, connect with us at hello@itinai.com. Stay updated with insights on our Telegram or Twitter.

Transform Your Sales Processes

Discover how AI can enhance your sales and customer engagement by exploring solutions at itinai.com.