Introduction to Archon

Artificial intelligence has advanced significantly with Large Language Models (LLMs), impacting areas like natural language processing and coding. To enhance LLM performance during use, effective inference-time techniques are essential. However, the research community is still working on the best ways to integrate these techniques into a unified system.

Challenges in LLM Optimization

One major challenge is identifying which inference-time techniques work best for different tasks. With various functions like instruction-following and reasoning, different combinations of techniques may be needed. Understanding how techniques like ensembling, repeated sampling, and ranking interact is vital for maximizing performance. Researchers require a system that can efficiently explore and optimize these combinations based on specific tasks and computing resources.

Current Approaches

Traditional methods have focused on applying individual techniques to LLMs, such as:

- Generation Ensembling: Querying multiple models at once to find the best response.

- Repeated Sampling: Querying a single model multiple times.

While these methods show promise, they often lead to limited improvements when used alone. Frameworks like Mixture-of-Agents (MoA) and LeanStar have tried to combine techniques but still face challenges in performance across tasks. This highlights the need for a modular, automated approach to optimize LLM systems.

Introducing Archon

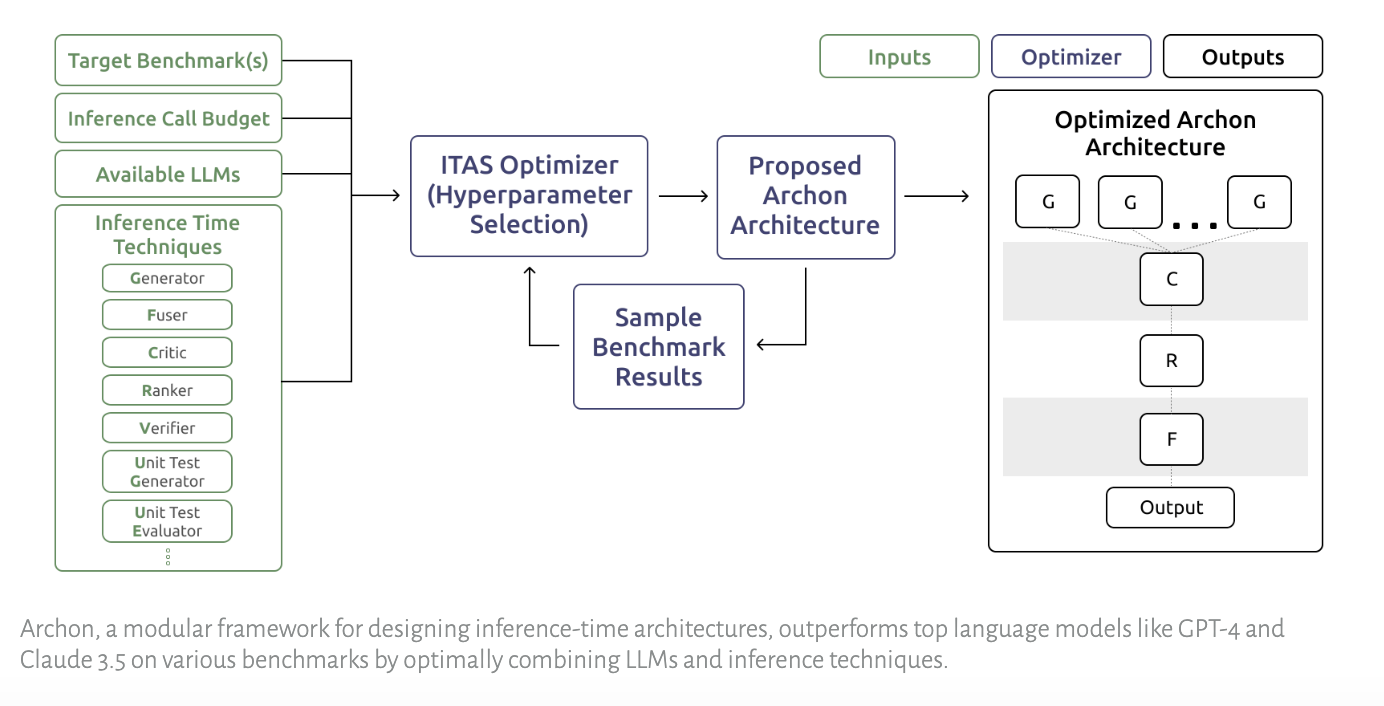

Researchers from Stanford University and the University of Washington have created Archon, a modular framework that automates LLM architecture search using inference-time techniques. Archon combines various LLMs and methods into a cohesive system that outperforms traditional models.

How Archon Works

Archon operates as a multi-layered system, where each layer applies a different inference-time technique. For example:

- The first layer generates multiple candidate responses using an ensemble of LLMs.

- Subsequent layers refine these responses through ranking, fusion, or verification.

Using Bayesian optimization, Archon searches for the best configurations to maximize accuracy, speed, and cost-effectiveness within a given compute budget.

Performance Results

Archon was tested on various benchmarks, achieving impressive results:

- Average Accuracy Increase: 15.1 percentage points compared to top models like GPT-4o and Claude 3.5 Sonnet.

- Coding Tasks Improvement: 56% boost in accuracy through unit test generation.

- Open-Source Models: Surpassed single-call state-of-the-art models by 11.2 percentage points.

Key Takeaways

- Performance Boost: Archon significantly enhances accuracy across benchmarks.

- Diverse Applications: Excels in instruction-following, reasoning, and coding tasks.

- Effective Techniques: Combines ensembling, fusion, ranking, and verification for superior performance.

- Scalability: Modular design allows easy adaptation to new tasks.

Conclusion

Archon meets the need for an automated system that optimizes LLMs by effectively combining various techniques. This framework simplifies the complexities of inference-time architecture design, enabling developers to create high-performing LLM systems tailored to specific tasks. Archon sets a new standard for LLM optimization, offering a systematic approach to achieve top-tier results.

Get Involved

Check out the Paper and GitHub. Follow us on Twitter, join our Telegram Channel, and LinkedIn Group. If you enjoy our work, subscribe to our newsletter and join our 50k+ ML SubReddit.

Upcoming Event

RetrieveX – The GenAI Data Retrieval Conference on Oct 17, 2023.

Transform Your Business with AI

Stay competitive by using Archon for your AI needs:

- Identify Automation Opportunities: Find key customer interaction points for AI benefits.

- Define KPIs: Ensure measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that fit your needs.

- Implement Gradually: Start small, gather data, and expand wisely.

For AI KPI management advice, connect with us at hello@itinai.com. For continuous insights, follow us on Telegram or Twitter @itinaicom.

Explore AI Solutions

Discover how AI can enhance your sales processes and customer engagement at itinai.com.