Zyphra Unveils Zamba2-mini: A State-of-the-Art Small Language Model Redefining On-Device AI with Unmatched Efficiency and Performance

State-of-the-Art Performance in a Compact Package

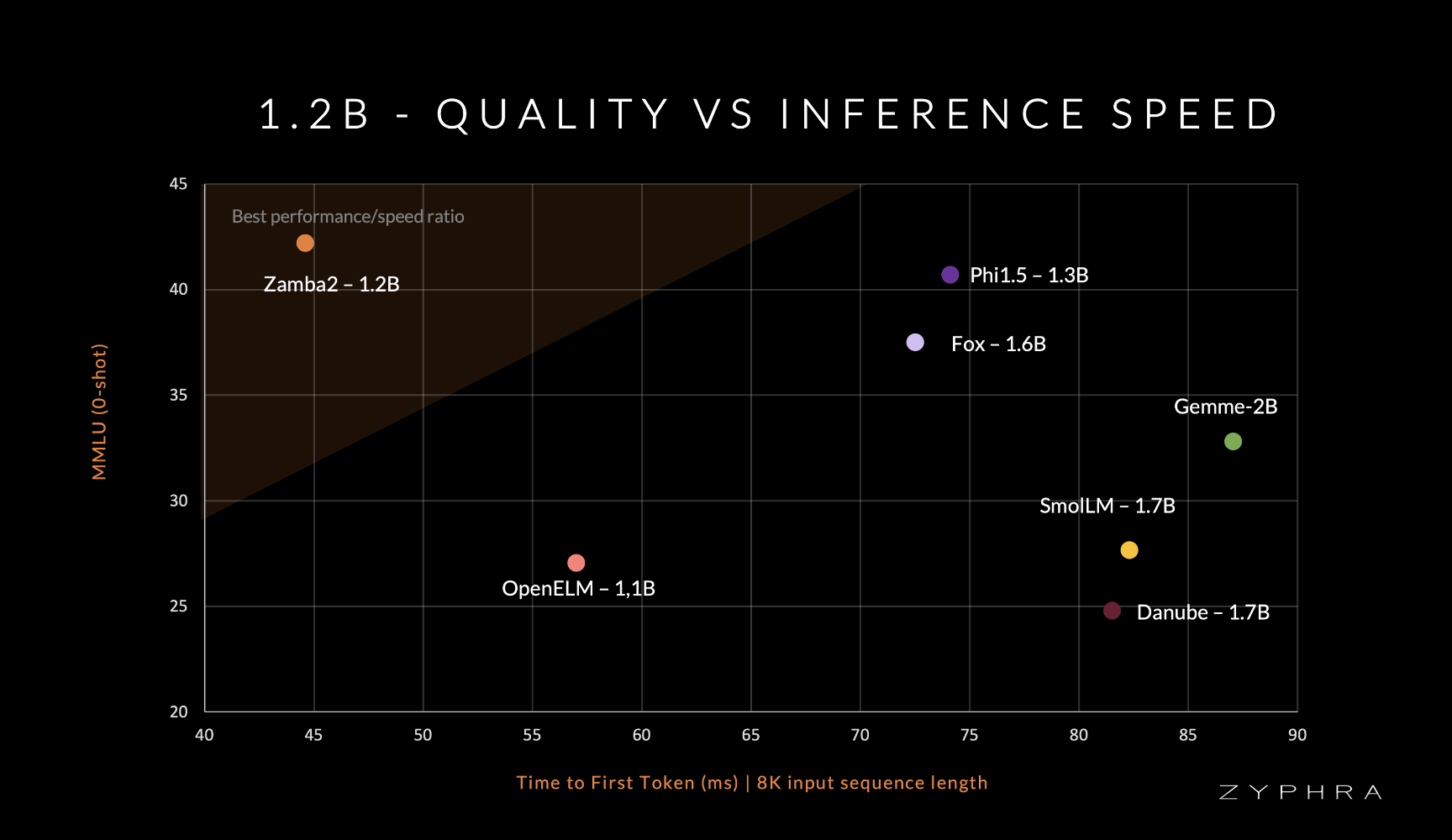

Zyphra has released Zamba2-mini 1.2B, a small language model designed for on-device applications. It offers remarkable efficiency and high performance, outpacing larger models in tasks such as inference and generation latency.

Innovative Architectural Design

Zamba2-mini employs a hybrid architecture combining transformer and RNN elements, achieving high-quality output with reduced computational and memory demands. It utilizes shared attention layers and LoRA projection matrices to enhance expressivity and specialization.

Open Source Availability and Future Prospects

Zyphra has made Zamba2-mini open-source under the Apache 2.0 license, enabling developers and researchers to leverage its capabilities. This move is expected to drive further research and development in efficient language models.

Conclusion

Zamba2-mini represents a milestone in small language model development for on-device applications, offering state-of-the-art architecture, rigorous training, and open-source availability.

If you want to evolve your company with AI, stay competitive, and leverage Zamba2-mini, discover how AI can redefine your way of work. Connect with us for AI KPI management advice and continuous insights into leveraging AI.

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.