Zamba2-2.7B: Revolutionizing Small Language Models

Enhanced Performance and Efficiency

Zyphra’s Zamba2-2.7B sets a new standard in small language models, achieving remarkable efficiency and performance. Trained on a substantial dataset, it matches larger models while reducing resource requirements, making it ideal for on-device applications.

Practical Solutions and Value

The model delivers initial responses twice as fast as competitors, crucial for real-time applications like virtual assistants and chatbots. It also reduces memory overhead by 27%, making it suitable for devices with limited memory resources. Lower generation latency enhances smooth interactions, ideal for customer service bots and educational tools.

Superior Performance and Innovation

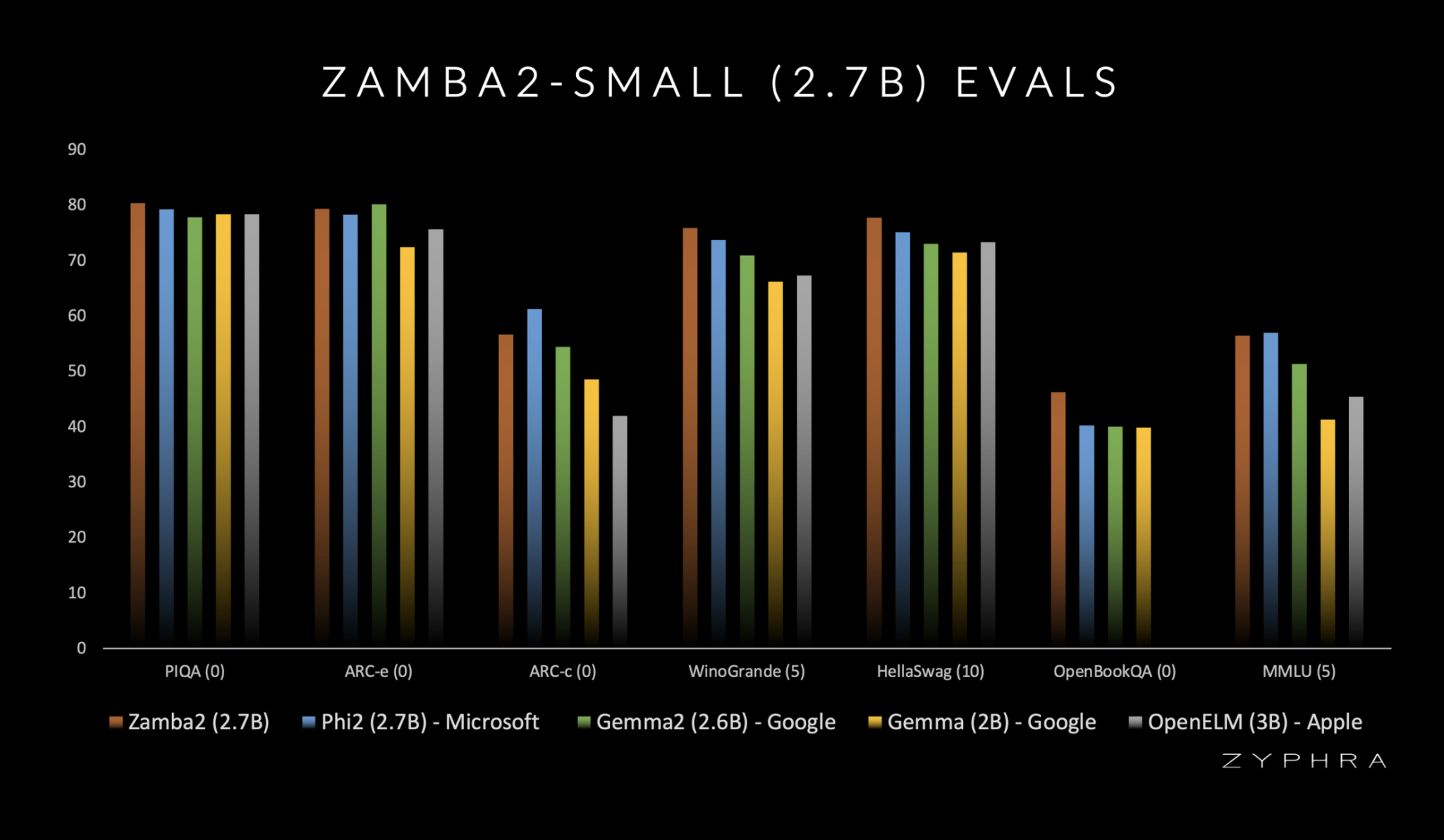

Benchmark comparisons confirm Zamba2-2.7B’s superior performance and innovative approach, exceeding expectations for small language models. Advanced architecture and improved interleaved shared attention scheme contribute to faster, smarter, and more efficient AI solutions.

Driving AI Evolution

Zamba2-2.7B marks a new era in AI technology, combining efficiency and performance seamlessly. It provides a robust solution for developers and businesses looking to integrate sophisticated AI capabilities into their products, paving the way for more advanced and responsive AI-driven experiences.