XVERSE-MoE-A36B: Revolutionizing AI Language Modeling

Key Innovations and Practical Solutions

XVERSE Technology has introduced the XVERSE-MoE-A36B, a large multilingual language model based on the Mixture-of-Experts (MoE) architecture. This model offers remarkable scale, innovative structure, advanced training data approach, and diverse language support, positioning XVERSE Technology at the forefront of AI innovation.

Enhanced Architecture and Multilingual Capabilities

The XVERSE-MoE-A36B is built on a decoder-only transformer network, introducing an enhanced version of the Mixture-of-Experts approach. With a total parameter scale of 255 billion, the model stands out with its selective activation mechanism, fine-grained experts, and shared and non-shared expert integration. Its multilingual capabilities, trained on over 40 languages, make it excel in Chinese and English and perform well in other languages.

Innovative Training Strategy and Computational Efficiency

The model’s innovative training strategy involves dynamic data-switching and adjustments to the learning rate scheduler, ensuring continuous refinement of language understanding. To overcome computational challenges, XVERSE Technology has optimized memory consumption and communication overhead, making the model practical for real-world applications.

Performance and Benchmarking

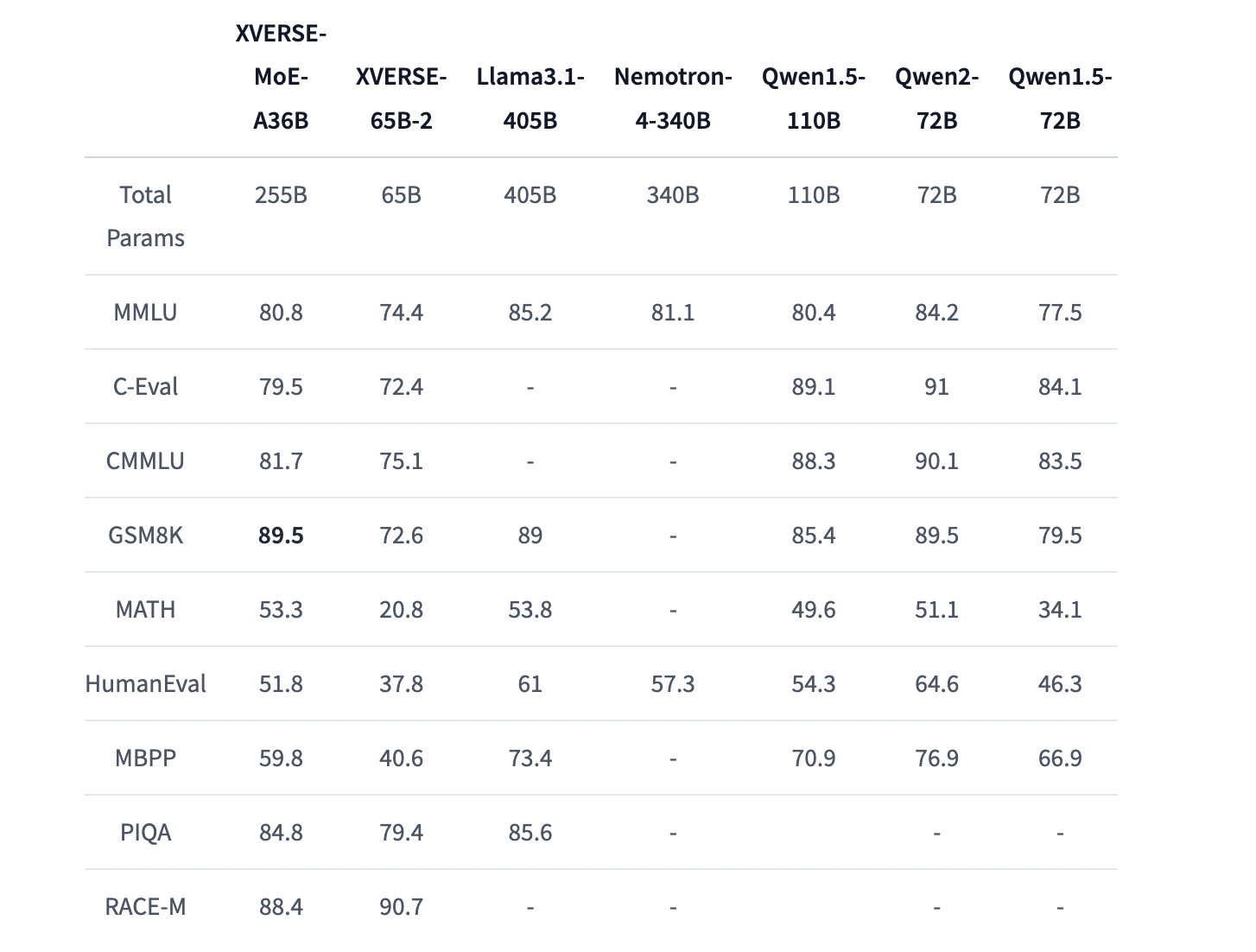

Extensive testing across various benchmarks has demonstrated the model’s superior performance, consistently outperforming other models of similar scale in tasks ranging from general language understanding to specialized reasoning.

Applications and Responsible Use

The XVERSE-MoE-A36B model is designed for various applications, particularly in multilingual communication and specialized domains. XVERSE Technology emphasizes responsible use and ethical considerations, urging users to conduct thorough safety tests before deploying the model in sensitive applications.

Conclusion

The release of XVERSE-MoE-A36B marks a significant milestone in AI language modeling, offering groundbreaking innovations and multilingual capabilities. While it holds promise for AI-driven communication and problem-solving solutions, ethical and responsible use is paramount.