Practical Solutions and Value of Windows Agent Arena (WAA)

Enhancing Human Productivity with AI Agents

AI agents powered by large language models can automate tasks within the Windows operating system, offering immense value for personal and professional productivity in the digital realm.

Challenges in Evaluating AI Agent Performance

Existing benchmarks fail to capture the complexity of real-world tasks on platforms like Windows, making large-scale evaluations slow and inefficient.

Introducing WindowsAgentArena Benchmark

WindowsAgentArena is a comprehensive benchmark designed for evaluating AI agents in a Windows OS environment. It leverages cloud infrastructure to parallelize evaluations, allowing for rapid and realistic testing of agent behavior.

Diverse Tasks and Innovative Evaluation Metrics

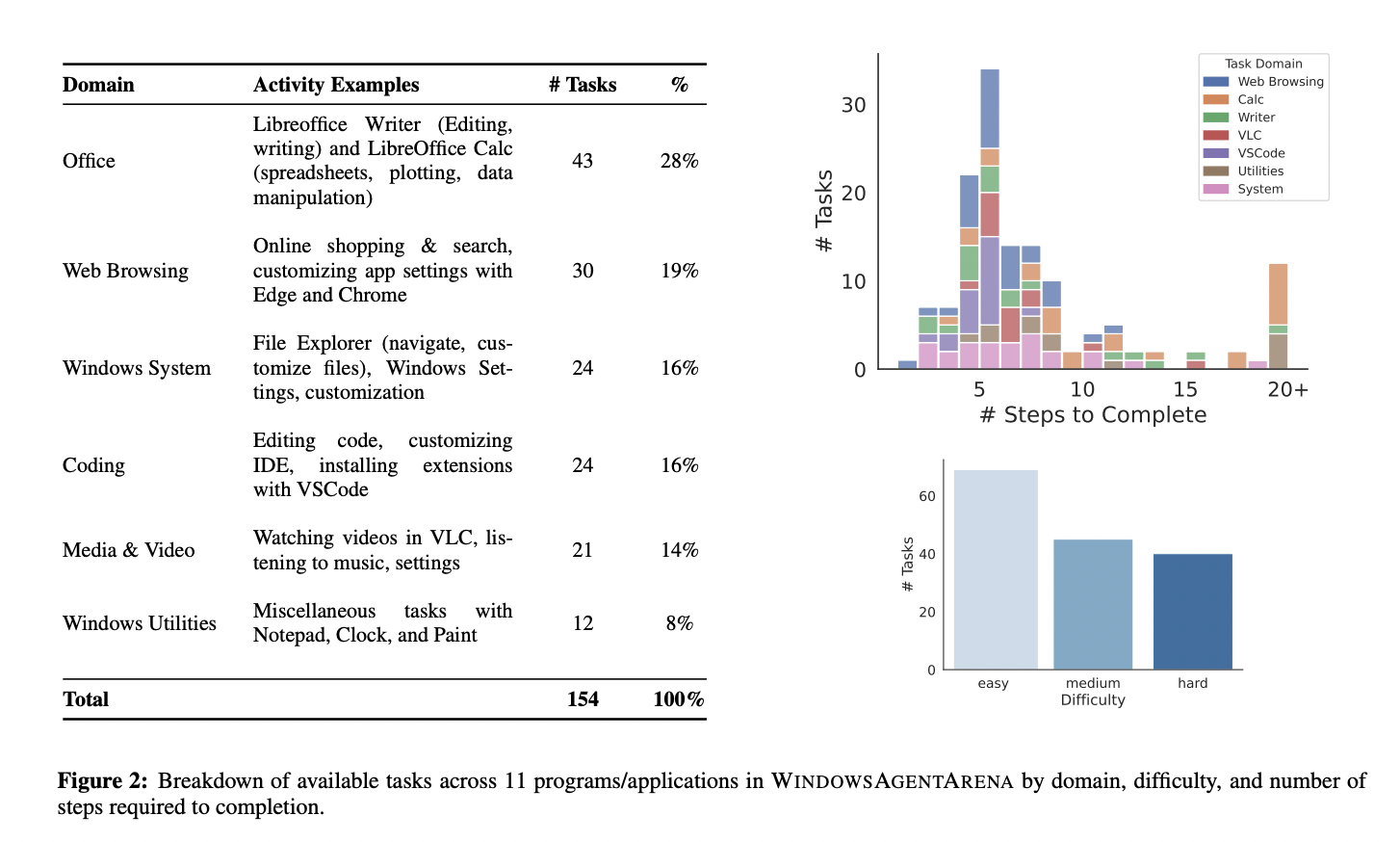

The benchmark suite includes over 154 diverse tasks mirroring everyday Windows workflows, with a novel evaluation criterion rewarding agents based on task completion. It seamlessly integrates with Docker containers for secure testing and scalability.

Performance of Navi AI Agent

The Navi AI agent achieved a success rate of 19.5% on the WindowsAgentArena benchmark, showcasing the potential for improvement as AI technologies evolve. Navi also demonstrated strong performance in a secondary web-based benchmark, Mind2Web.

Advanced Perception Techniques

Navi benefits from visual markers and screen parsing techniques, such as Set-of-Marks (SoMs) and UIA tree parsing, enabling more precise agent interactions and paving the way for more capable and efficient AI agents in the future.

Evolve Your Company with AI

WindowsAgentArena offers a scalable, reproducible, and realistic testing platform for AI agents in the Windows OS ecosystem, providing researchers and developers with the tools to push the boundaries of AI agent development.