Challenges in Assessing GPU Performance for Large Language Models (LLMs)

Reevaluating Performance Metrics for LLM Training and Inference Tasks

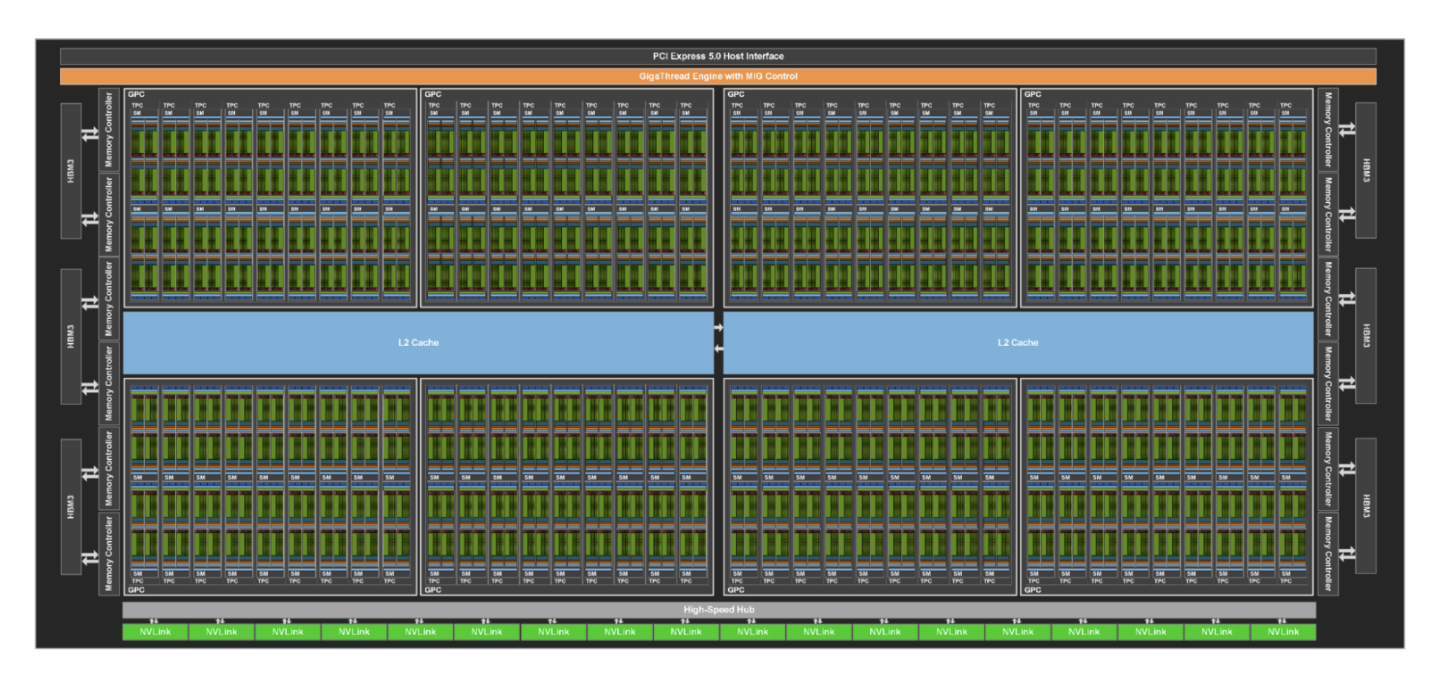

Large Language Models (LLMs) have led to the need for efficient GPU utilization in machine learning tasks. However, accurately assessing GPU performance has been a critical challenge. The commonly used metric, GPU Utilization, has proven to be unreliable in measuring actual computational efficiency. This has prompted researchers to seek more accurate ways to measure and optimize GPU performance for LLM tasks.

Introducing Model FLOPS Utilization (MFUs) for Accurate Representation of GPU Performance

Researchers have introduced alternative metrics such as Model FLOPS (Floating point Operations Per Second) utilization to provide a more accurate representation of GPU performance. Despite their complexity, MFUs have revealed significant discrepancies between GPU utilization and actual computational efficiency, highlighting the need for a deeper understanding of GPU performance metrics.

Practical Solutions and Value

Optimizing LLM Training Efficiency

Trainy AI researchers successfully optimized LLM training efficiency by implementing performance-tuning techniques recommended for PyTorch. This involved adjusting dataloader parameters, utilizing mixed precision training, employing fused optimizers, and utilizing specialized instances and networking for training tasks. By applying these methods, they achieved 100% GPU utilization and improved computational efficiency, leading to significant performance improvements.

Profiling and Fusing Kernels for Improved Performance

To address performance bottlenecks, researchers used PyTorch Profiler to analyze the training loop and identified opportunities for kernel fusion. By fusing layers within the transformer block and implementing fused kernels, they achieved a 4x speedup in training time and increased Model FLOPS Utilization from 20% to 38%, resulting in improved performance and reduced memory usage.

Recommendations for Accurate Performance Measurement

Researchers recommend tracking SM Efficiency and GPU Utilization on GPU clusters for accurate performance measurement. They emphasize the importance of looking beyond GPU utilization and provide insights into monitoring SM efficiency for identifying optimization opportunities in LLM training.

Unlocking the Potential of AI

Discover how AI can redefine your way of work, redefine sales processes, and customer engagement. Connect with us to identify automation opportunities, define KPIs, select AI solutions, and implement AI for business advantage.