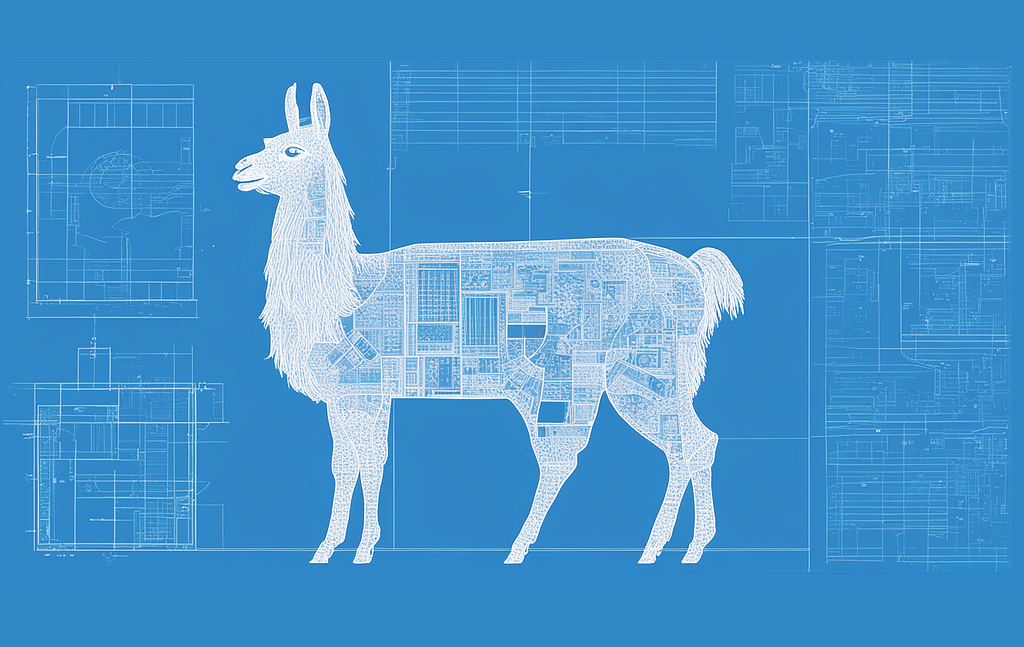

The article discusses prompt engineering techniques and introduces the concept of prompt architecture for interacting with Large Language Models (LLMs). It highlights the importance of specific prompts and explores different prompt architectures such as role prompting, chain of thought prompting, self-consistency prompting, step-back prompting, and chain of verification prompting. The article also suggests choosing the right prompt architecture based on the desired application and provides insights into the future of interacting with LLMs.

What is Prompt Architecture in LLMs?

Prompt architecture is a technique that enhances the capabilities of Large Language Models (LLMs) by using complex prompt engineering methods. It allows LLMs to perform tasks that were previously impossible with traditional prompt engineering techniques.

Why is Prompt Architecture Important?

Prompt engineering teaches us that more specific prompts lead to better results. By including more details and context in the prompts, we can guide LLMs to provide more accurate and effective answers.

With in-context learning, LLMs can learn new tasks by being given the task description in its context window. This zero-shot learning approach eliminates the need for thousands of labeled examples to fine-tune a language model for a new task.

Role prompting is a specific prompt engineering technique that assigns a specific role to the AI system at the start of the prompt. This additional context enhances the model’s comprehension and leads to more effective responses.

Chain of Thought prompting is another technique that helps LLMs with logical reasoning and multi-step problem-solving. It prompts the model to show its work and reason through problems step-by-step, improving performance on tasks like math and logic puzzles.

Prompt architecture goes beyond prompt engineering by introducing multiple elements and inference phases. It includes techniques like self-consistency prompting, step-back prompting, and chain of verification prompting, which further enhance the reasoning capabilities of LLMs.

Practical Applications of Prompt Architecture

Prompt architecture enables complex applications that were previously impossible with traditional prompt engineering. For example, ReAct prompting allows LLMs to interact with the world by mixing thoughts and actions, making them suitable for building autonomous agents.

Choosing the Right Prompt Architecture

For conversational chatbots, simpler prompt engineering techniques are often sufficient. However, if more advanced reasoning capabilities are required, methods like step-back prompting or self-consistency prompting can be used.

If reducing hallucinations is a priority, techniques like chain of verification prompting or retrieval augmented generation (RAG) can be employed. RAG is particularly useful for real applications.

It’s important to consider the cost and complexity of implementing advanced prompt architectures, as they may require more tokens and resources to generate responses.

The Future of Interacting with LLMs

Prompt architecture opens up new possibilities for LLMs and allows us to understand their capabilities better. As models continue to evolve, prompts will become even more complex, enabling more advanced use cases.

Experience and intuition play a crucial role in determining which prompt architecture techniques work best in different situations.

Overall, prompt architecture is a powerful tool for leveraging the capabilities of LLMs and redefining the way we work with AI.

For more information on AI solutions and how they can benefit your company, contact us at hello@itinai.com or visit our website at itinai.com.

Spotlight on a Practical AI Solution:

Consider the AI Sales Bot from itinai.com/aisalesbot. It is designed to automate customer engagement and manage interactions across all customer journey stages. Discover how AI can redefine your sales processes and customer engagement by exploring our solutions at itinai.com.