Understanding the Importance of Databases

Databases are crucial for storing and retrieving organized data. They support various applications in business intelligence and research. Typically, querying databases requires SQL, which can be complicated and varies between systems. While large language models (LLMs) can automate queries, they often struggle with translating natural language to SQL accurately due to differences in syntax.

Emerging Solutions for Better Database Queries

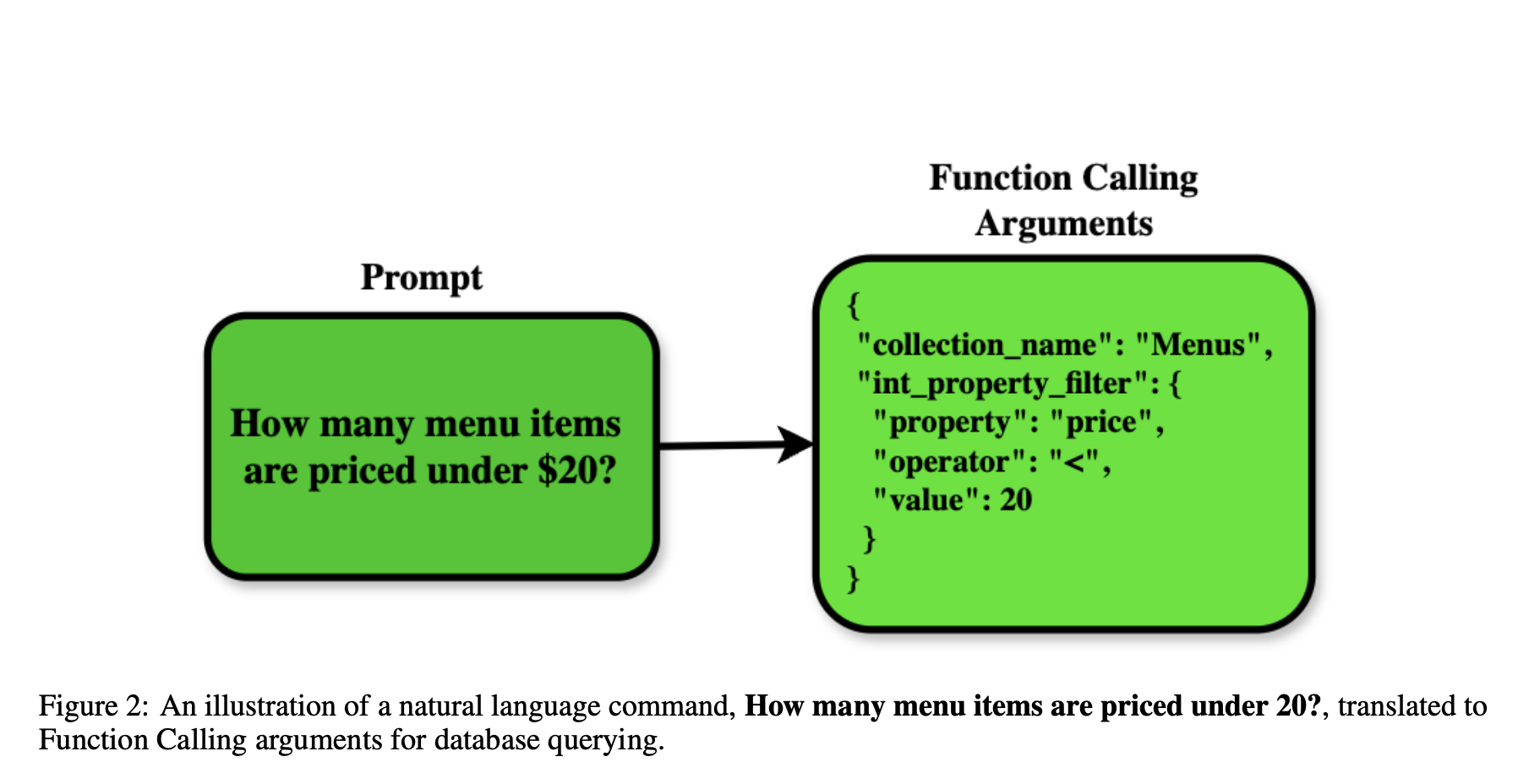

A new function-based API approach is being developed to enable LLMs to interact with structured data more effectively across different database systems. This method aims to enhance the accuracy and efficiency of LLM-driven database queries.

Challenges with Current Text-to-SQL Solutions

Current text-to-SQL solutions face several challenges:

- Different database management systems (DBMS) use unique SQL dialects, making generalization difficult.

- Real-world queries often involve complex filtering and aggregations, which many models struggle to handle.

- Correctly targeting database collections is essential, especially in multi-collection scenarios.

- Performance varies with query complexity, and standardized evaluation benchmarks are needed.

Introducing the DBGorilla Benchmark

Researchers from Weaviate, Contextual AI, and Morningstar have introduced a structured function-calling approach that allows LLMs to query databases without relying on SQL. This method defines API functions for searching, filtering, and aggregating data, leading to improved accuracy and fewer errors.

Details of the DBGorilla Dataset

The DBGorilla dataset consists of 315 queries across five database schemas, each with three related collections. It includes various filters and aggregation functions. Performance is evaluated based on:

- Exact Match accuracy

- Abstract Syntax Tree (AST) alignment

- Collection routing accuracy

Performance Evaluation of LLMs

The study tested eight LLMs, including Claude 3.5 Sonnet and GPT-4o, across three key metrics:

- Claude 3.5 Sonnet had the highest exact match score at 74.3%.

- Boolean property filters were handled with 87.5% accuracy.

- Collection routing accuracy was consistently high, ranging from 96% to 98%.

Impact of Function Call Configurations

Additional experiments showed that different function call configurations had minimal impact on performance, with structured querying remaining effective across various setups.

Key Findings and Conclusion

The study concluded that Function Calling is a promising alternative to text-to-SQL methods for database querying:

- Top models achieved over 74% Exact Match accuracy.

- Routing accuracy exceeded 96%, ensuring correct collection targeting.

- LLMs need improvement in distinguishing between structured filters and search queries.

Stay Updated and Evolve with AI

For companies looking to leverage AI, consider these steps:

- Identify automation opportunities in customer interactions.

- Define measurable KPIs for AI initiatives.

- Select AI solutions that fit your needs.

- Implement gradually, starting with pilot projects.

For more insights and to connect, reach out to us at hello@itinai.com or follow us on our social media platforms.