VideoLLaMA 2: Advancing Multimodal Research in Video-Language Modeling

Introduction

Recent AI advancements have significantly impacted various sectors, particularly in image recognition and photorealistic image generation. However, there is a need for improvement in video understanding and generation, especially in Video-LLMs.

Practical Solutions and Value

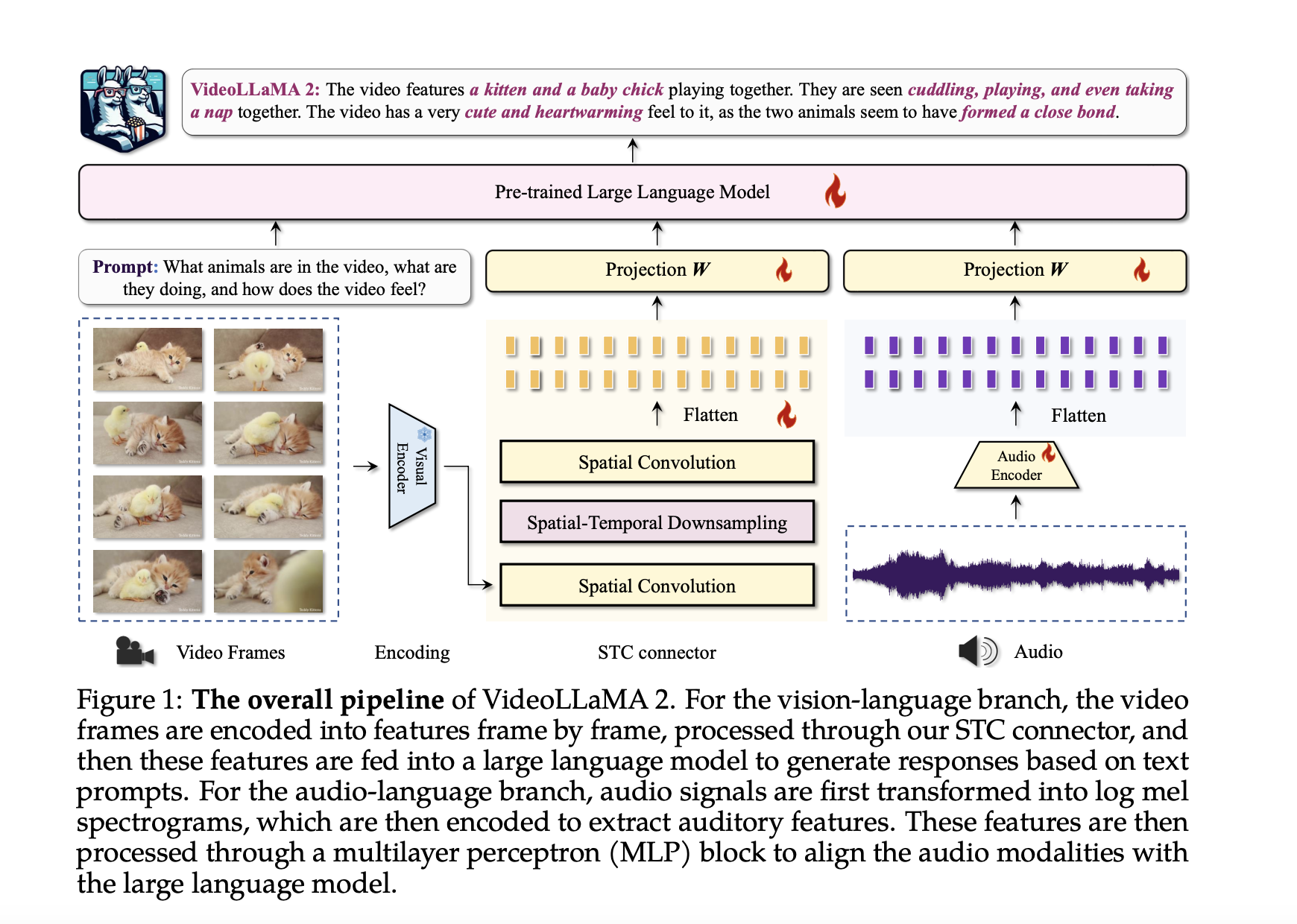

VideoLLaMA 2, developed by researchers at DAMO Academy, Alibaba Group, introduces advanced Video-LLMs designed to enhance spatial-temporal modeling and audio understanding in video-related tasks. This model excels in video question answering, video captioning, and audio-based tasks, showcasing its potential for complex video analysis and multimodal research challenges.

Key Features

VideoLLaMA 2 features a custom Spatial-Temporal Convolution (STC) connector to better handle video dynamics and an integrated Audio Branch for enhanced multimodal understanding. It outperforms many open-source models and competes closely with proprietary ones, making it a new standard in intelligent video analysis.

Performance

VideoLLaMA 2 consistently outperforms similar open-source models and competes closely with proprietary models across multiple benchmarks. It excels in tasks like video question answering, video captioning, and audio-based tasks, particularly in multi-choice video question answering and open-ended audio-video question answering.

Availability and Further Development

The models are publicly available for further development. Researchers are encouraged to check out the Paper, Model Card on HF and GitHub for more information.

AI Solutions for Business

If you want to evolve your company with AI, stay competitive, and advance your multimodal research, consider leveraging VideoLLaMA 2 to redefine your way of work. It offers practical solutions for automation opportunities, KPI management, and sales processes, enabling businesses to benefit from AI.

Connect with Us

For AI KPI management advice and continuous insights into leveraging AI, connect with us at hello@itinai.com or stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom.