Understanding Neural Networks and Activation Functions

Neural networks, inspired by the human brain, are crucial for tasks like image recognition and language processing. They learn complex patterns through activation functions. However, many existing activation functions encounter significant challenges:

Common Challenges:

- Vanishing gradients slow down learning in deep networks.

- “Dead neurons” occur when parts of the network stop learning.

- Some functions are inefficient or show inconsistent performance.

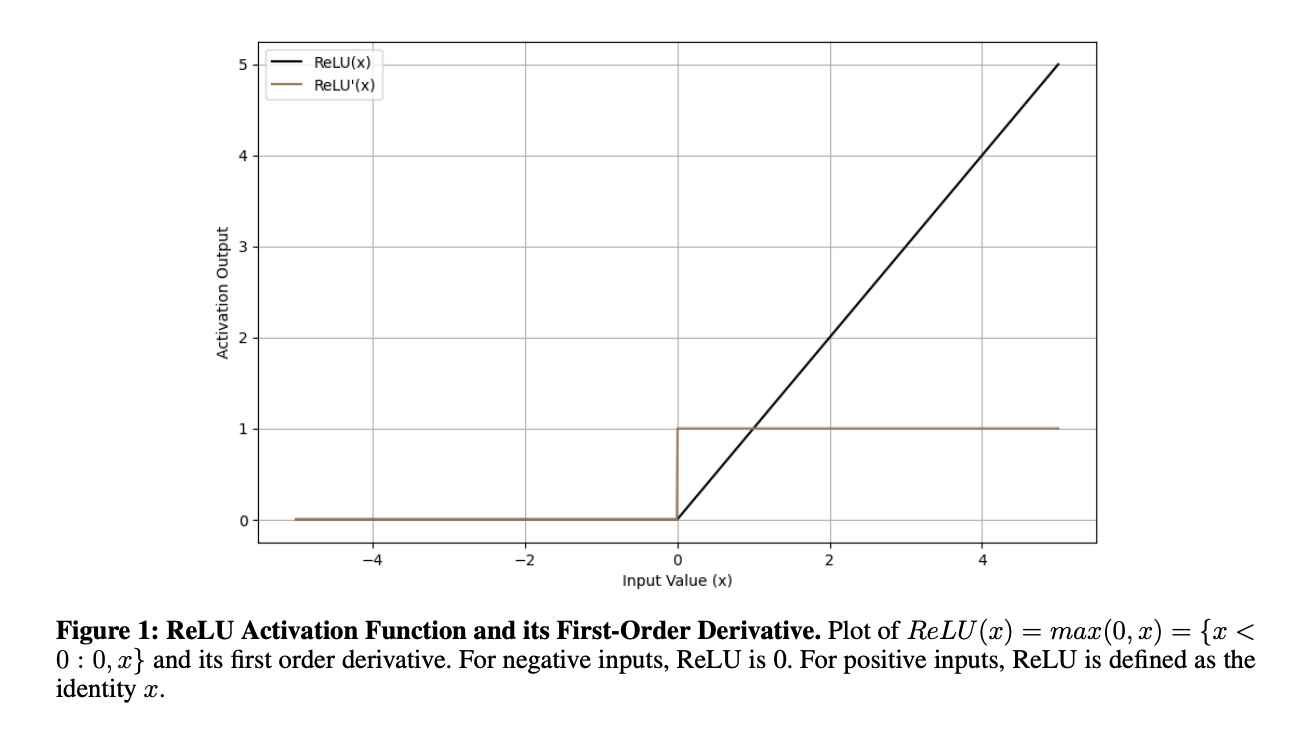

Modern alternatives have been developed but still face drawbacks. For instance, while ReLU improves some gradient issues, it introduces the “dying ReLU” problem. Variants like Leaky ReLU and PReLU attempt to address this but can lead to regularization challenges. Advanced functions like ELU, SiLU, and GELU enhance non-linearities but increase complexity.

Introducing TeLU Activation Function

Researchers from the University of South Florida have proposed a new activation function called TeLU. This function combines the efficiency of ReLU with the stability of smoother functions. Here’s what makes TeLU valuable:

- It provides smooth transitions in outputs, ensuring gradual changes as inputs vary.

- It maintains near-zero-mean activations and robust gradient dynamics.

- TeLU enhances performance consistency across various tasks and architectures.

Benefits of TeLU:

- Quick convergence during training.

- Robustness against unseen data.

- Ability to approximate any continuous target function.

- Helps avoid issues like exploding gradients.

Performance Evaluation

TeLU has been tested against other activation functions and shows promising results:

- It effectively prevents the vanishing gradient problem, essential for training deep networks.

- Tests on large datasets like ImageNet and Text8 reveal faster convergence and improved accuracy compared to ReLU.

- TeLU is computationally efficient and integrates well with ReLU-based configurations.

- It demonstrates stability across various neural network architectures.

Conclusion

The TeLU activation function addresses major challenges faced by existing functions. Its successful application on benchmarks demonstrates faster convergence, enhanced accuracy, and stability in deep learning models. TeLU has the potential to serve as a baseline for future research in activation functions.

Get Involved and Stay Updated

Check out the research paper for more details. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Don’t forget to engage with our 60k+ ML SubReddit.

Transform Your Business with AI

Discover how AI can enhance your operations:

- Identify automation opportunities in customer interactions.

- Define measurable KPIs for AI initiatives.

- Select AI solutions that fit your needs.

- Implement AI gradually, starting with pilot projects.

For AI KPI management advice, contact us at hello@itinai.com. Stay informed on AI developments through our Telegram and Twitter channels.