UniBench: A Comprehensive Evaluation Framework for Vision-Language Models

Overview

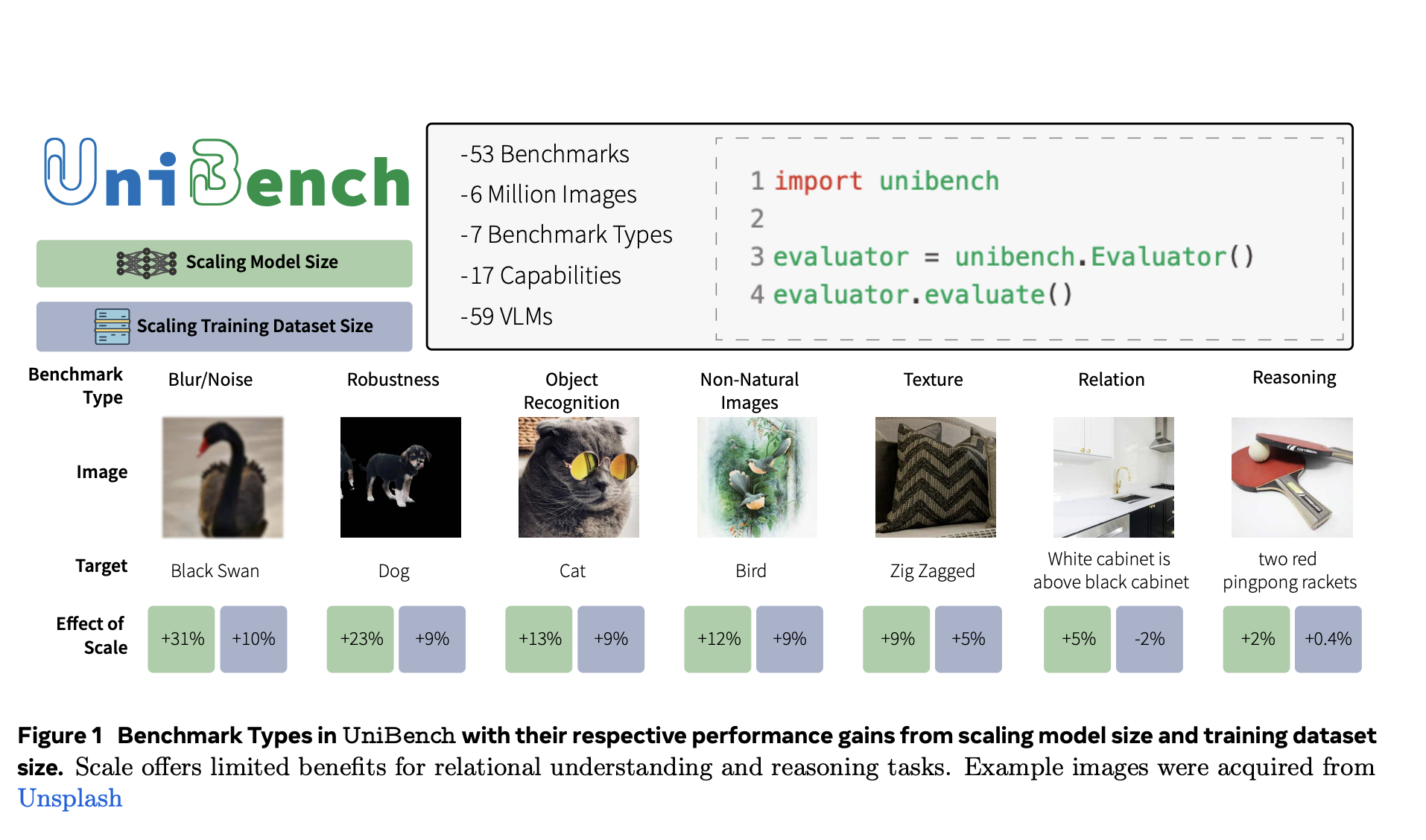

Vision-language models (VLMs) face challenges in evaluation due to the complex landscape of benchmarks. UniBench addresses these challenges by providing a unified platform that implements 53 diverse benchmarks in a user-friendly codebase, categorizing them into seven types and seventeen capabilities.

Key Insights

- Performance varies widely across tasks, with VLMs excelling in some areas but struggling with others.

- Scaling model size and training data improves performance in many areas, but offers limited benefits for visual relations and reasoning tasks.

- VLMs surprisingly struggle with simple numerical tasks like MNIST digit recognition.

- Data quality is emphasized over quantity, and tailored learning objectives can significantly impact performance.

Practical Solutions

UniBench provides a distilled set of representative benchmarks that can be run quickly on standard hardware. This efficient approach aims to streamline VLM evaluation, enabling more meaningful comparisons and insights into effective strategies for advancing VLM research.

UniBench: A Python Library to Evaluate Vision-Language Models VLMs Robustness Across Diverse Benchmarks

If you want to evolve your company with AI, stay competitive, and use UniBench to redefine your way of work. Identify Automation Opportunities, Define KPIs, Select an AI Solution, and Implement Gradually. For AI KPI management advice, connect with us at hello@itinai.com. Follow us on Twitter and join our Telegram Channel and LinkedIn Group for continuous insights into leveraging AI.