The LoRA approach presents a parameter-efficient method for fine-tuning large pre-trained models. By decomposing the update matrix during fine-tuning, LoRA effectively reduces computational overhead. The method involves representing the change in weights using lower-rank matrices, reducing trainable parameters and offering benefits like reduced memory usage and faster training. The approach has broad applicability across different domains. [50 words]

“`html

Understanding LoRA — Low Rank Adaptation For Finetuning Large Models

Math behind this parameter efficient finetuning method

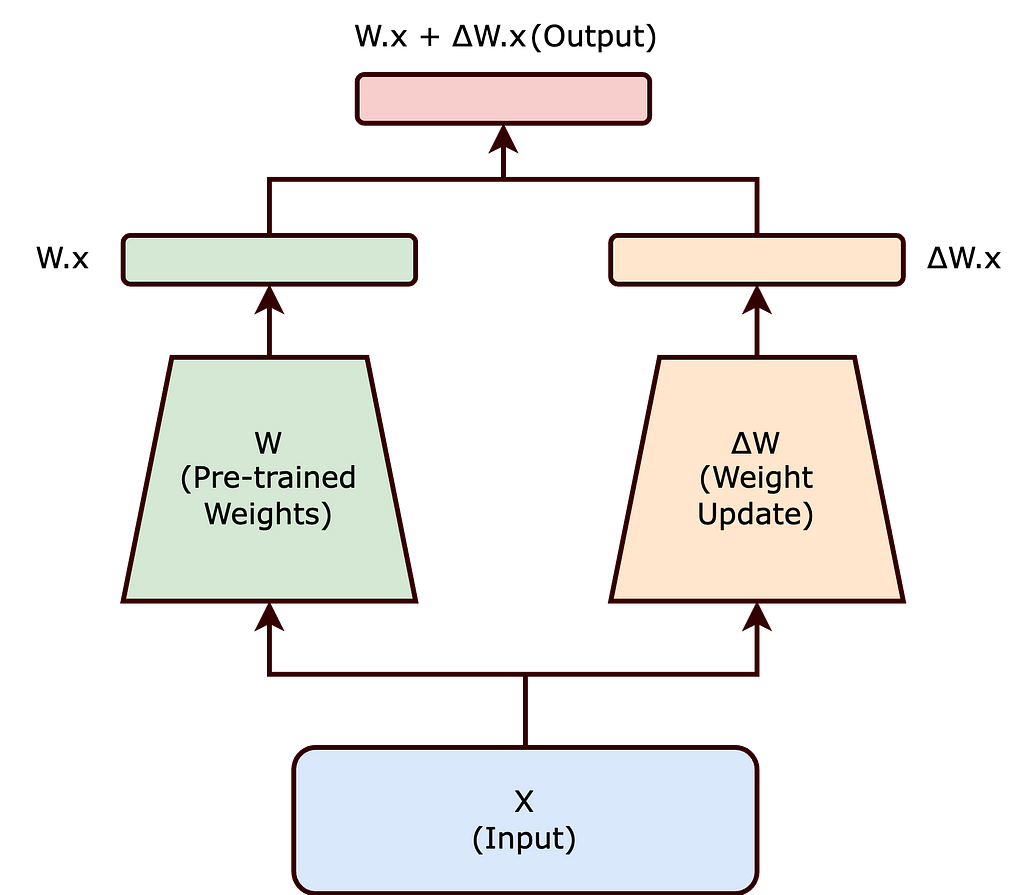

Fine-tuning large pre-trained models can be computationally challenging, involving adjustment of millions of parameters. LoRA presents an effective solution to this problem by decomposing the update matrix during finetuning, reducing the computational overhead associated with adapting these models to specific tasks.

LoRA proposes representing the update matrix (ΔW) as the product of two smaller matrices, (A) and (B), with a lower rank. This approach significantly reduces the number of trainable parameters, resulting in benefits such as reduced memory footprint, faster training, feasibility for smaller hardware, and scalability to larger models.

The concept of rank plays a pivotal role in determining the efficiency and effectiveness of the adaptation process. The intrinsic rank concept ensures that the essence of the model’s learning capability is preserved with significantly fewer parameters.

Practical Applications of LoRA

LoRA’s approach to decomposing (ΔW) into a product of lower rank matrices offers practical solutions for adapting large pre-trained models:

- Reduced Memory Footprint: LoRA decreases memory needs by lowering the number of parameters to update, aiding in the management of large-scale models.

- Faster Training and Adaptation: By simplifying computational demands, LoRA accelerates the training and fine-tuning of large models for new tasks.

- Feasibility for Smaller Hardware: LoRA’s lower parameter count enables the fine-tuning of substantial models on less powerful hardware.

- Scaling to Larger Models: LoRA facilitates the expansion of AI models without a corresponding increase in computational resources, making the management of growing model sizes more practical.

Conclusion

LoRA’s approach to decomposing (ΔW) into a product of lower rank matrices effectively balances the need to adapt large pre-trained models to new tasks while maintaining computational efficiency. The intrinsic rank concept ensures that the essence of the model’s learning capability is preserved with significantly fewer parameters.

AI Solutions for Middle Managers

Discover how AI can redefine your way of work and sales processes. Consider the AI Sales Bot from itinai.com/aisalesbot designed to automate customer engagement 24/7 and manage interactions across all customer journey stages.

If you want to evolve your company with AI, stay competitive, and use Understanding LoRA — Low Rank Adaptation For Finetuning Large Models for your advantage. Connect with us at hello@itinai.com for AI KPI management advice and continuous insights into leveraging AI.

“`