Understanding GUI Agents and Their Importance

Graphical User Interface (GUI) agents play a vital role in automating how we interact with software, just like humans do with keyboards and touchscreens. These agents make complex tasks easier by autonomously navigating and manipulating GUI elements. They are designed to understand their environment through visual inputs, allowing them to interpret digital interfaces better. Recent advancements in artificial intelligence aim to enhance these agents, making them more efficient and human-like.

Challenges with Current GUI Agents

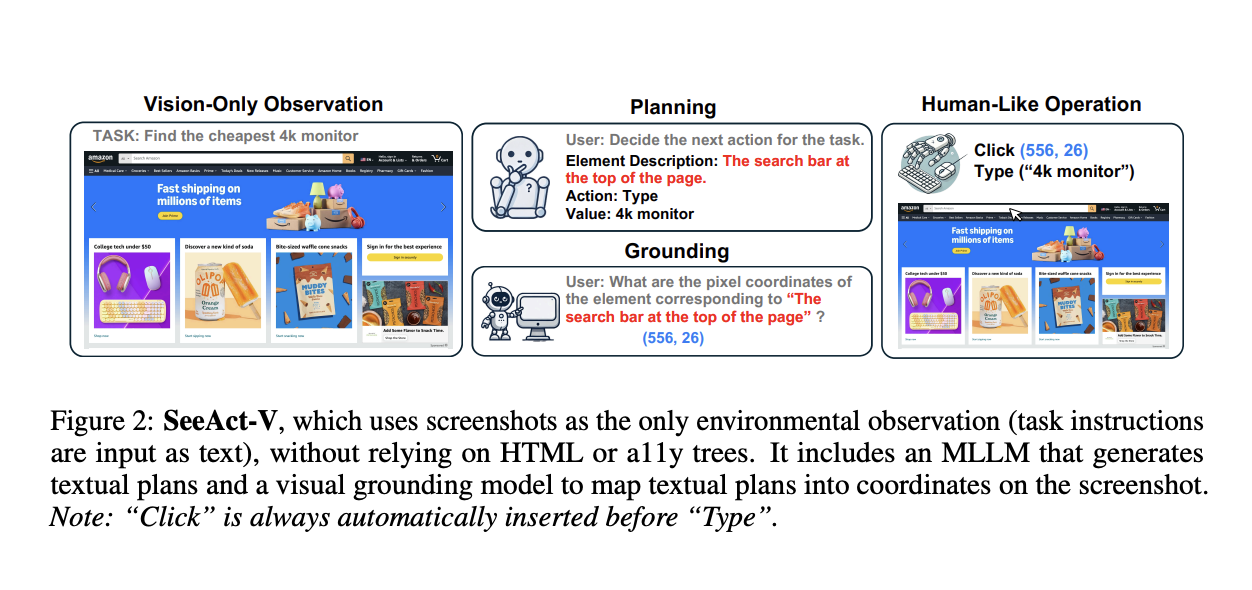

The main issue with existing GUI agents is their reliance on text-based representations like HTML or accessibility trees. These methods can introduce unnecessary complexity and may lack complete or accurate information. As a result, agents often struggle with speed and efficiency when navigating various platforms, such as mobile apps and desktop software.

Introducing UGround: A New Solution

Researchers from Ohio State University and Orby AI have developed a groundbreaking model called UGround. This model eliminates the need for text-based inputs and operates directly on the visual aspects of the GUI. By focusing solely on visual perception, UGround can mimic human interactions more accurately, allowing agents to perform tasks without relying on text data.

How UGround Works

UGround was built using a large dataset of 10 million GUI elements from over 1.3 million screenshots. This extensive collection covers various layouts and types, helping the model learn from diverse visual representations. As a result, UGround can effectively handle different platforms, including web, desktop, and mobile.

Performance Highlights

UGround significantly outperforms existing models in benchmark tests, achieving up to 20% higher accuracy in visual grounding tasks. For example, it scored 82.8% accuracy in mobile environments and 63.6% in desktop settings. This demonstrates that UGround’s visual-only approach allows for better performance than models that rely on both visual and text inputs.

Superior Results Across Platforms

In various evaluations, GUI agents using UGround showed remarkable improvements. For instance, UGround achieved a 29% performance increase over previous models in the ScreenSpot agent setting. It also excelled in benchmarks like AndroidControl and OmniACT, indicating its robustness in handling diverse GUI tasks.

Conclusion: The Future of GUI Interaction

UGround addresses the limitations of current GUI agents by using a human-like visual perception approach. Its ability to operate without text inputs marks a significant step forward in human-computer interaction. This model not only enhances the efficiency and accuracy of GUI agents but also paves the way for future advancements in automated GUI navigation.

Get Involved

Check out the Paper, Code, and Model on Hugging Face. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. If you appreciate our work, consider subscribing to our newsletter and joining our 50k+ ML SubReddit.

Upcoming Event

RetrieveX – The GenAI Data Retrieval Conference on Oct 17, 2023.

Transform Your Business with AI

Stay competitive and leverage UGround to redefine your work processes. Here are some practical steps:

- Identify Automation Opportunities: Find customer interaction points that can benefit from AI.

- Define KPIs: Ensure your AI initiatives have measurable impacts.

- Select an AI Solution: Choose tools that fit your needs and allow for customization.

- Implement Gradually: Start with a pilot program, gather data, and expand wisely.

For AI KPI management advice, reach out to us at hello@itinai.com. For ongoing insights, follow us on Telegram at t.me/itinainews or Twitter at @itinaicom.

Discover how AI can transform your sales processes and customer engagement at itinai.com.