Understanding Vision-and-Language Models (VLMs)

Vision-and-language models (VLMs) are powerful tools that use text to tackle various computer vision tasks. These tasks include:

- Recognizing images

- Reading text from images (OCR)

- Detecting objects

VLMs approach these tasks by answering visual questions with text responses. However, their effectiveness in processing and combining images and text is still being explored.

Current Limitations

Most VLM methods focus on either text or image inputs, missing the potential of integrating both. In-context learning (ICL), a feature of large language models (LLMs), allows models to adapt to tasks with minimal examples. VLMs can also combine visual and text data using two methods:

- Late-fusion: Using pre-trained components

- Early-fusion: End-to-end training

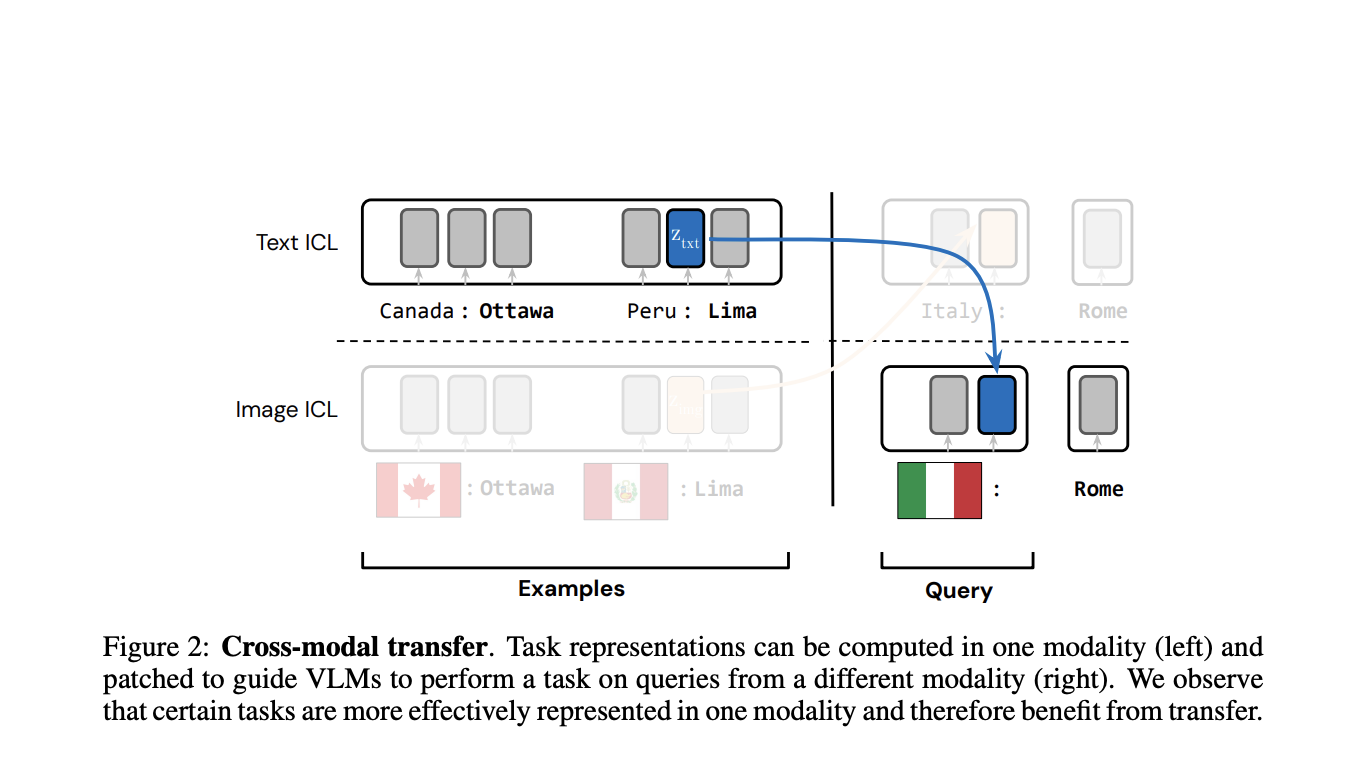

Research shows that task representations can transfer between modalities, enhancing performance when combining image and text inputs.

Research Insights from UC Berkeley

Researchers from the University of California, Berkeley, studied how task vectors are encoded and transferred in VLMs. They discovered that VLMs create a shared task representation space for inputs, whether defined by text, images, or instructions.

Experimentation and Findings

Six tasks were created to test the behavior of VLMs with task vectors. The study revealed a three-phase process in VLMs:

- Encoding input

- Forming a task representation

- Generating outputs

Key findings include:

- Cross-modal patching (xPatch) improved accuracy by 14–33% over text ICL and 8–13% over image ICL.

- Text-based task vectors were more efficient than image-based ones.

- Combining instruction-based and exemplar-based task vectors enhanced task representation by 18%.

- Task transfer from text to image achieved up to 52% accuracy compared to baselines.

Conclusion and Future Directions

VLMs can effectively encode and transfer task representations across different modalities, paving the way for more versatile AI models. The research indicates that transferring tasks from text to images is more effective, likely due to the focus on text during VLM training.

Unlock AI Solutions for Your Business

To stay competitive and leverage AI effectively, consider the following steps:

- Identify Automation Opportunities: Find customer interaction points that can benefit from AI.

- Define KPIs: Ensure measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start with a pilot, gather data, and expand AI usage wisely.

For AI KPI management advice, connect with us at hello@itinai.com. For continuous insights, follow us on Telegram or @itinaicom.

Explore More

Discover how AI can transform your sales processes and customer engagement. Visit itinai.com for more solutions.