Reinforcement Learning (RL) Overview

Reinforcement Learning is widely used in science and technology to improve processes and systems. However, it struggles with a key issue: Sample Inefficiency. This means RL often requires thousands of attempts to learn tasks that humans can master quickly.

Introducing Meta-RL

Meta-RL addresses sample inefficiency by allowing an agent to use past experiences. It remembers previous episodes to adapt to new situations, making learning faster and more efficient. Meta-RL can explore and develop complex strategies better than standard RL, such as learning new skills or conducting experiments.

Challenges with Meta-RL

Despite its benefits, Meta-RL has limitations. Traditional methods focus on maximizing rewards over time, balancing exploration and exploitation. However, they often get stuck in local optima, especially when agents must sacrifice short-term rewards for long-term gains.

New Approach: First-Explore, Then Exploit

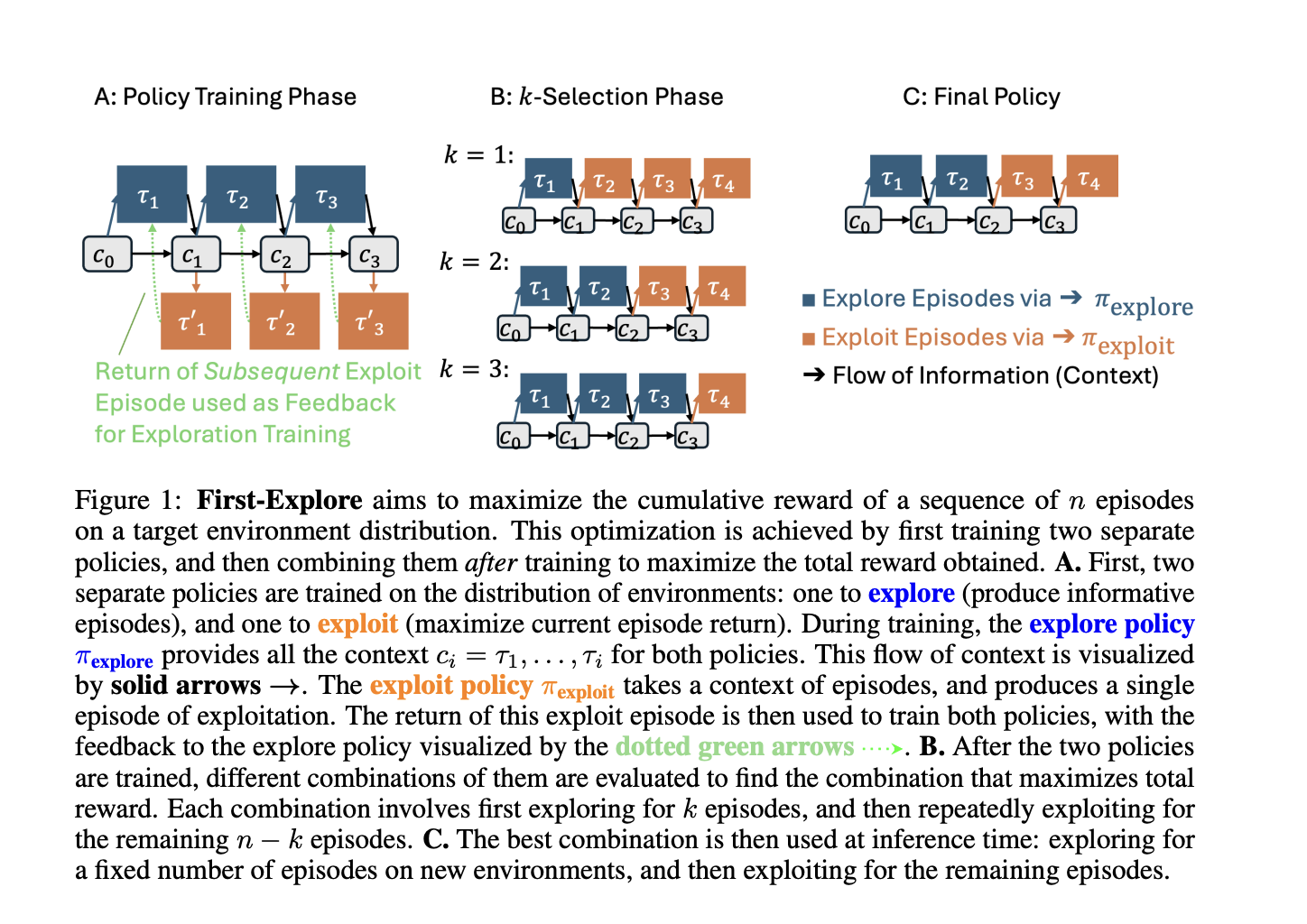

Researchers at the University of British Columbia introduced a new method called First-Explore, Then Exploit. This approach separates exploration and exploitation by using two distinct policies:

- The Explore Policy gathers information to inform the Exploit Policy.

- The Exploit Policy then maximizes rewards based on the information from the Explore Policy.

This separation allows for better exploration without the immediate pressure of maximizing rewards.

Implementation and Results

First-Explore uses a GPT-2-style causal transformer architecture. The researchers tested it in three challenging environments:

- Fixed Arm Bandit: A problem that requires forgoing immediate rewards.

- Dark Treasure Rooms: A grid world where the agent searches for hidden rewards.

- Ray Maze: A complex maze with multiple reward positions.

First-Explore achieved impressive results, earning:

- Twice the rewards of traditional Meta-RL in the Fixed Arm Bandit.

- Ten times more in the Dark Treasure Rooms.

- Six times more in the Ray Maze.

Conclusion

First-Explore effectively tackles the immediate reward problem in Meta-RL by creating two independent policies that work together for better overall performance. However, it still faces challenges that need addressing, such as future exploration and negative rewards.

How AI Can Transform Your Business

To stay competitive and leverage AI effectively, consider these steps:

- Identify Automation Opportunities: Find customer interactions that can benefit from AI.

- Define KPIs: Ensure measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start small, gather data, and expand usage wisely.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights, follow us on Telegram or @itinaicom.

Explore how AI can redefine your sales processes and customer engagement at itinai.com.