Practical Solutions for Enhancing Information Extraction with AI

Improving Information Extraction with Large Language Models (LLMs)

Large Language Models (LLMs) have shown significant progress in Information Extraction (IE) tasks in Natural Language Processing (NLP). By combining LLMs with instruction tuning, they can be trained to annotate text according to predetermined standards, improving their ability to generalize to new datasets.

Challenges with Low-Resource Languages

LLMs face difficulties in low-resource languages due to the lack of both unlabeled text for pre-training and labeled data for fine-tuning models. This hinders their performance in these languages.

Introducing the TransFusion Framework

The TransFusion framework, developed by researchers from the Georgia Institute of Technology, addresses the challenges of low-resource languages by utilizing data translated from these languages into English. It aims to enhance IE in low-resource languages by integrating external Machine Translation (MT) systems.

Key Steps in the TransFusion Framework

The TransFusion framework involves three primary steps: translation during inference, fusion of annotated data, and constructing a TransFusion reasoning chain to integrate annotation and fusion into a single decoding pass.

Introducing GoLLIE-TF

GoLLIE-TF is an instruction-tuned LLM designed to reduce the performance gap between high- and low-resource languages in IE tasks. It aims to enhance LLMs’ efficiency when handling low-resource languages.

Experimental Results and Performance Improvements

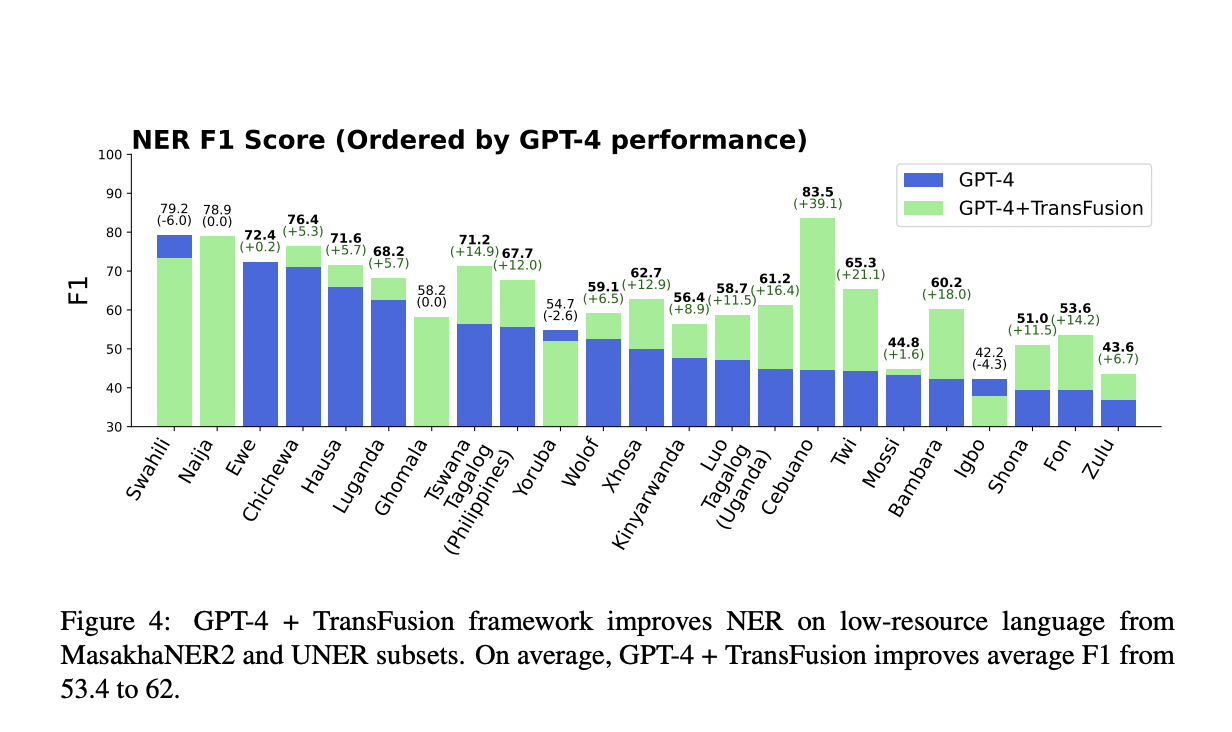

Experiments on multilingual IE datasets have shown that GoLLIE-TF performs well, demonstrating greater zero-shot cross-lingual transfer compared to the basic model. TransFusion applied to proprietary models such as GPT-4 has considerably improved the performance of low-resource language named entity recognition (NER).

Conclusion and Impact

TransFusion and GoLLIE-TF together provide a potent solution for enhancing IE tasks in low-resource languages, reducing the performance gap between high- and low-resource languages. These advancements show notable improvements across various models and datasets.

Connect with Us for AI Solutions

If you want to evolve your company with AI and explore automation opportunities, connect with us at hello@itinai.com. Stay tuned for continuous insights into leveraging AI on our Telegram t.me/itinainews or Twitter @itinaicom.