Enhancing Transformer Models with Advanced Positional Understanding

Introduction to Transformers and Positional Encoding

Transformers have become essential tools in artificial intelligence, particularly for processing sequential and structured data. A key challenge they face is understanding the order of tokens or inputs, as Transformers do not have an inherent mechanism for encoding sequence order. Rotary Position Embedding (RoPE) has emerged as a popular solution, especially in language and vision tasks, by efficiently encoding absolute positions to enhance relative spatial understanding.

Challenges in Scaling RoPE

As the complexity of models increases, so does the need for a more expressive and flexible RoPE. The challenge lies in scaling RoPE from simple one-dimensional sequences to multidimensional spatial data while preserving two critical features:

- Relativity: The ability to distinguish positions relative to one another.

- Reversibility: The guarantee of unique recovery of original positions.

Current approaches often treat each spatial axis independently, which can lead to an incomplete understanding of positions in complex environments.

Innovative Solutions from the University of Manchester

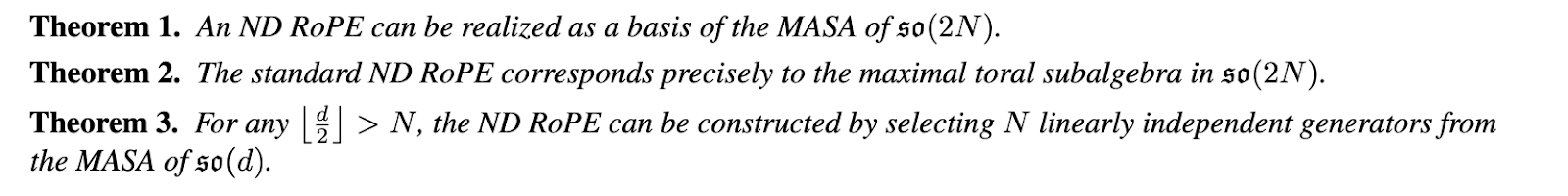

Researchers at the University of Manchester have introduced a groundbreaking method that extends RoPE into N dimensions using Lie group and Lie algebra theory. Their approach ensures that positional encodings meet the requirements of relativity and reversibility by defining valid RoPE constructions within a maximal abelian subalgebra of the special orthogonal Lie algebra.

Key Features of the New Framework

The methodology involves:

- Defining RoPE transformations as matrix exponentials of skew-symmetric generators.

- Generalizing the approach to N dimensions by selecting linearly independent generators.

- Incorporating a learnable orthogonal matrix to enable dimensional interactions while maintaining mathematical properties.

This innovative framework not only retains the essential properties of relativity and reversibility but also allows for flexible adaptations to higher dimensions.

Potential Applications and Benefits

The implications of this research are significant for various applications, including:

- Improved performance in complex spatial and multimodal environments.

- Enhanced expressiveness of Transformer architectures.

- The ability to learn inter-dimensional relationships without sacrificing foundational properties.

While empirical results for downstream tasks are not yet reported, the theoretical framework confirms the robustness of the proposed method.

Conclusion

This research from the University of Manchester presents a mathematically rigorous solution to the limitations of current RoPE approaches. By grounding their method in algebraic theory, they provide a pathway for learning inter-dimensional relationships, thus closing a significant gap in positional encoding. This advancement not only applies to traditional 1D and 2D inputs but also scales effectively to more complex N-dimensional data, paving the way for more sophisticated Transformer architectures.

Next Steps for Businesses

To leverage the advancements in AI and Transformer models, businesses should consider the following steps:

- Identify processes that can be automated with AI.

- Determine key performance indicators (KPIs) to measure the impact of AI investments.

- Select customizable tools that align with business objectives.

- Start with small projects, gather data on their effectiveness, and gradually expand AI usage.

For guidance on managing AI in business, please contact us at hello@itinai.ru or connect with us on Telegram, X, and LinkedIn.