DeepCoder-14B-Preview: A Breakthrough in Code Reasoning

Introduction

The increasing complexity of software and the demand for enhanced developer productivity have led to a significant need for intelligent code generation and automated programming solutions. Despite advancements in natural language processing, the coding sector has faced challenges in developing robust models due to the lack of high-quality, verifiable datasets necessary for effective training.

Overview of DeepCoder-14B-Preview

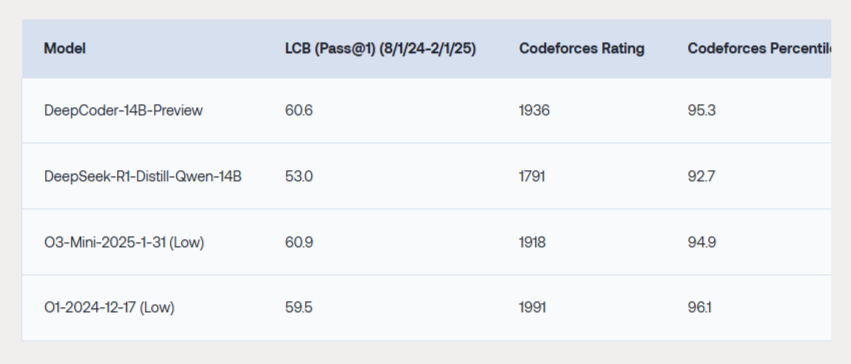

Recently, Together AI, in partnership with the Agentica team, released DeepCoder-14B-Preview. This model is a fully open-source code reasoning system that rivals existing models like o3-mini, utilizing just 14 billion parameters. It has demonstrated a remarkable performance with a 60.6% Pass@1 accuracy on the LiveCodeBench (LCB) benchmark, effectively closing the gap with more resource-intensive models.

Key Performance Metrics

- DeepCoder-14B-Preview achieves 60.6% Pass@1 accuracy on LCB, comparable to o3-mini’s 60.9%.

- The model shows an 8% improvement in accuracy over its base model, DeepSeek-R1-Distilled-Qwen-14B, which scored 53.0% on LCB.

- It reached a Codeforces rating of 1936, placing it in the 95.3 percentile, indicating strong real-world coding abilities.

Training Methodology

DeepCoder was trained over 2.5 weeks on 32 H100 GPUs using a meticulously curated dataset of 24,000 coding problems. This dataset was built to ensure quality and diversity, combining various verified coding problems, thereby maximizing the model’s training integrity.

Importance of Dataset Quality

The quality of the dataset plays a crucial role in the model’s effectiveness. DeepCoder utilized a selection process that emphasized:

- Programmatic verification of test cases.

- A minimum of five unit tests per problem.

- Deduplication of data to enhance training accuracy.

Innovative Training Environment

The training of DeepCoder incorporated a dual-sandbox environment, which allowed for large-scale parallel evaluations of over 1,000 coding problems at each reinforcement learning step. This ensured that every model-generated solution was rigorously tested, minimizing errors and promoting genuine reasoning over memorization.

System Optimization

To further enhance the training process, the architecture supporting DeepCoder was optimized through the “verl-pipe.” This upgrade effectively doubled the training speed and provided a modular framework that can be utilized for future model development in open-source ecosystems.

Key Takeaways

- DeepCoder-14B-Preview performs competitively with fewer parameters.

- Carefully curated datasets were essential for effective training, avoiding noise and reward hacking.

- The model was trained efficiently, emphasizing reproducibility.

- Accurate verification processes were integral to the training phase.

- Optimized systems facilitated rapid development cycles and future scalability.

Conclusion

DeepCoder-14B-Preview represents a significant advancement in code reasoning technologies, achieving high performance with a lean parameter profile. Its open-source nature fosters community collaboration and innovation, making it a valuable tool for businesses aiming to integrate AI into their coding processes. Embracing such technologies can transform workflow efficiency and enhance productivity across various domains.

For further insights and guidance on managing AI in your business, feel free to reach out via email or through our social media channels.