Understanding Large Language Models (LLMs)

Large Language Models (LLMs) excel in tasks like machine translation and question-answering. However, we still need a better understanding of how they work and generate relevant text. A major challenge is that LLMs have limits like fixed vocabulary and context windows, which restrict their potential. Solving these issues is crucial for improving LLM efficiency and expanding their real-world use.

Current Research Gaps

Earlier studies highlighted the success of LLMs, particularly transformer-based models. However, they’ve mostly simplified the models for analysis or ignored the time-based relationships in sequences. This results in gaps in our understanding of how LLMs learn beyond their training data. There is also a lack of theoretical frameworks to provide generalization insights for LLMs handling time-dependent sequences.

New Framework for LLMs

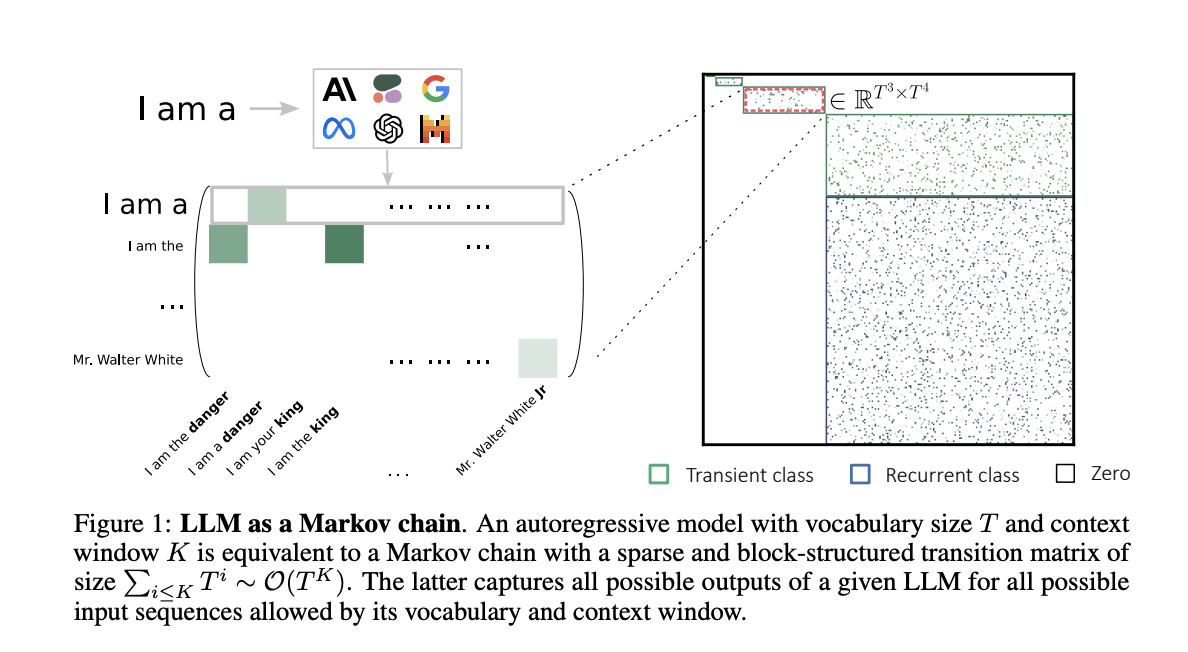

A research team has proposed a fresh framework, viewing LLMs as Markov chains, where each token sequence represents a state. The likelihood of moving from one state to another is based on predicting the next token. This model enables a better analysis of LLM behaviors, helping to understand their prediction capabilities and how they handle sequences effectively.

Key Insights from the Framework

The researchers created a transition matrix to represent LLMs, allowing them to capture possible output sequences. This approach reveals long-term prediction behavior and highlights how temperature settings can affect the LLM’s efficiency in navigating state spaces. Experiments validated the theory with improvements in speed and effectiveness.

Benefits of Using This Approach

Modeling LLMs as Markov chains results in:

- Faster convergence to a stable prediction state.

- Better space exploration that enhances performance in real-world applications.

- Improved understanding of sequence generation, leading to more coherent outputs.

Future Research Directions

This new framework not only improves LLM efficiency but also lays the groundwork for further studies on how LLMs process and produce text in various contexts. This enhancement can revolutionize performance across natural language processing tasks.

Connect with Us

For more insights, check out the Paper. Follow us on Twitter, join our Telegram Channel, and our LinkedIn Group. Subscribe to our newsletter for more updates and connect with us at hello@itinai.com for personalized AI solutions.

Discover AI Solutions for Your Business

Unlock the potential of AI in your work environment:

- Identify automation opportunities in customer interactions.

- Define measurable KPIs to track your AI impact.

- Select ideal AI solutions tailored to your business needs.

- Implement gradually starting with pilot projects.

Stay ahead in the competitive landscape with AI solutions that tailor to your needs.