Understanding Embodied Artificial Intelligence

Embodied AI creates agents that can work independently in physical or simulated environments to complete tasks. These agents use large datasets and advanced models to make decisions and optimize their actions. Unlike simpler AI applications, embodied AI needs to handle complex data and interactions effectively.

Key Benefits of Embodied AI

- Autonomous Task Execution: Agents can perform tasks without human intervention.

- Data-Driven Decision Making: They leverage extensive datasets to improve their performance.

- Adaptability: Agents can adjust their actions based on changing environments.

The Challenge of Scaling

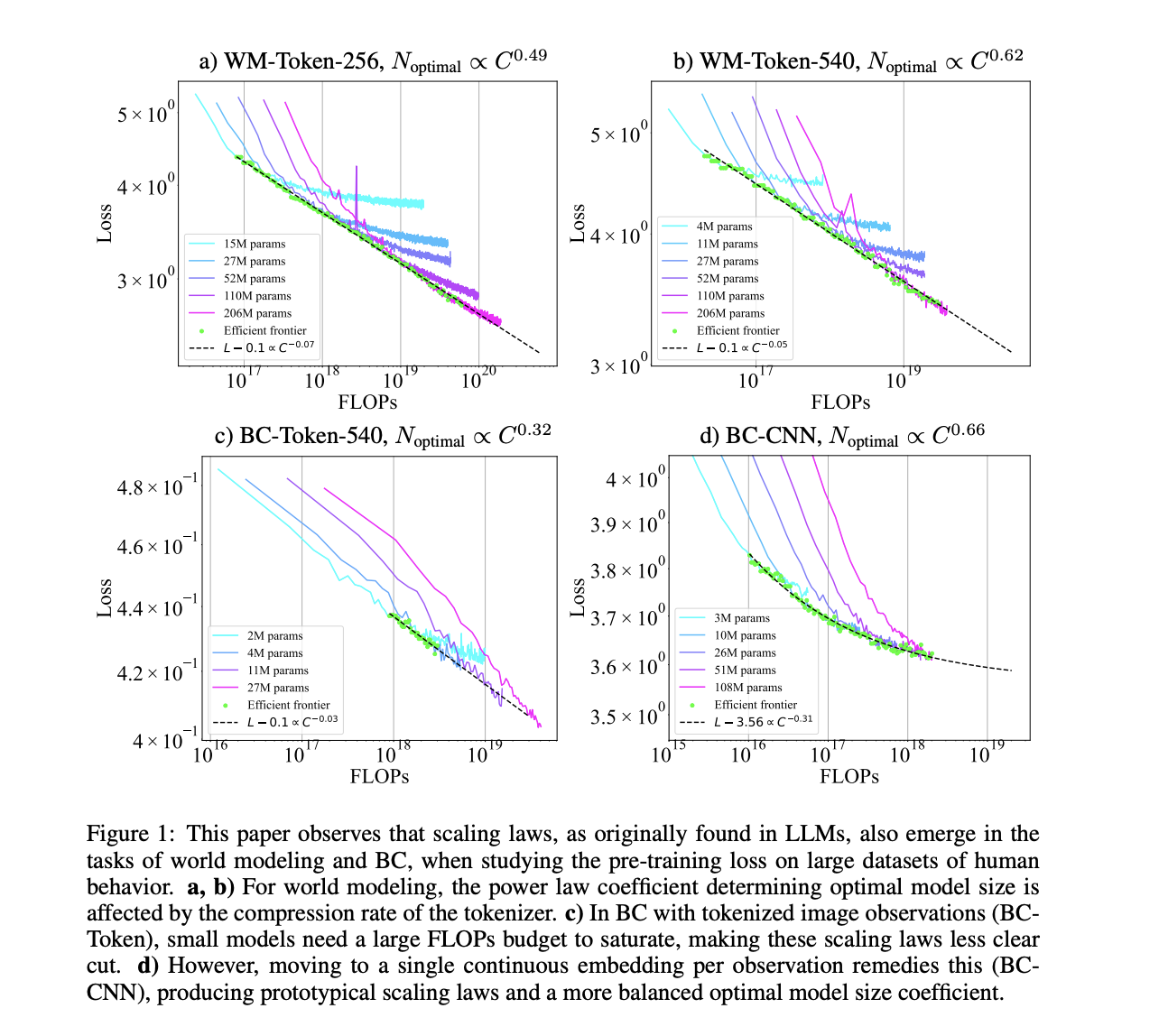

Scaling embodied AI involves balancing the size of the models and the amount of data they use. This is crucial to ensure that agents perform well without exceeding computational limits. Unlike language models, which have established scaling guidelines, the relationship between model size, data, and computational costs in embodied AI is still being understood.

Importance of Scaling

- Efficiency: Proper scaling leads to better performance without unnecessary resource use.

- Resource Allocation: Understanding scaling helps allocate computational resources effectively.

- Performance Optimization: Larger models can improve performance, but they need sufficient data to be effective.

Recent Research Insights

Researchers at Microsoft have developed new scaling laws specifically for embodied AI. They focused on two main tasks: behavior cloning (where agents mimic actions) and world modeling (where agents predict changes in the environment). Their findings include:

- Model Efficiency: By testing different model configurations, they identified how to enhance performance and reduce computational demands.

- Optimal Scaling: For world modeling, increasing both model and dataset sizes leads to better results.

- Task-Specific Strategies: Behavior cloning benefits from smaller models with larger datasets, while CNN-based tasks prefer larger models.

Key Findings

- Balanced Scaling: For world modeling, both model and dataset should grow together.

- Behavior Cloning Preferences: Smaller models paired with large datasets are optimal for tokenized observations.

- Compression Matters: Higher compression rates in tokenized data can lead to larger model requirements.

- Validation of Scaling Laws: Larger models confirmed the reliability of scaling laws in predicting performance.

Conclusion

This research enhances the field of embodied AI by applying insights from language model scaling. It allows for better prediction and management of resources, leading to more efficient and capable agents in various environments.

Next Steps

If you want to leverage AI for your business, consider the following:

- Identify Automation Opportunities: Find areas in customer interactions that can benefit from AI.

- Define KPIs: Ensure that your AI initiatives have measurable impacts.

- Select AI Solutions: Choose tools that fit your needs and allow for customization.

- Implement Gradually: Start with a pilot project, collect data, and expand wisely.

For further insights and support, connect with us at hello@itinai.com or follow us on @itinaicom.

Stay Informed

Join our community for the latest updates on AI solutions and advancements.