Language Model Scaling and Performance

Language models (LMs) are crucial for artificial intelligence, focusing on understanding and generating human language. Researchers aim to enhance these models to perform tasks like natural language processing, translation, and creative writing. Understanding how these models scale with computational resources is essential for predicting future capabilities and optimizing resources.

Challenges in Language Model Research

The primary challenge is understanding how model performance scales with computational power and data used during training. Traditional methods are computationally expensive and time-consuming, creating barriers for researchers and engineers.

Frameworks and Models for Language Model Performance

Existing research includes frameworks and models like compute scaling laws, Open LLM Leaderboard, LM Eval Harness, and benchmarks like MMLU, ARC-C, and HellaSwag. These tools help evaluate and optimize language model performance across different computational scales and tasks.

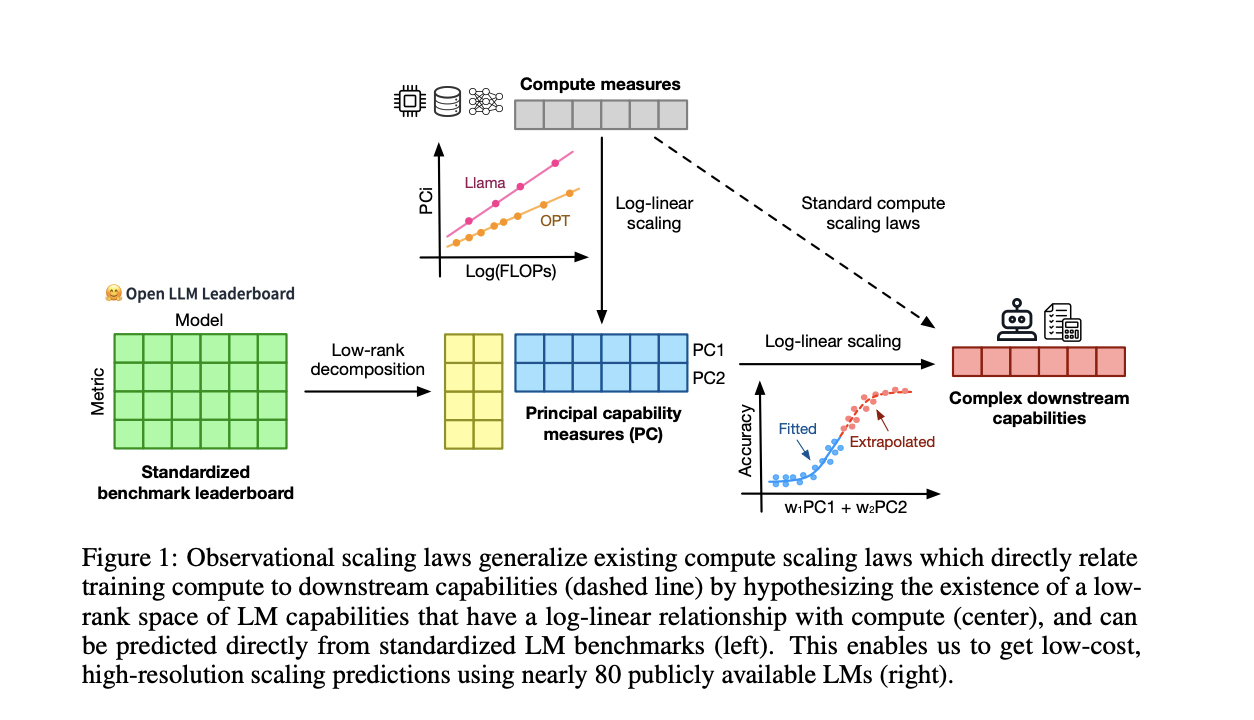

Observational Scaling Laws

Researchers introduced observational scaling laws to predict language model performance efficiently. This method leverages publicly available data from around 80 models, reducing the need for extensive training. The results showed high predictive accuracy for advanced model performance and post-training interventions.

AI Solutions for Business

AI can redefine work processes, automate customer interactions, and improve sales processes. Implementing AI requires defining KPIs, selecting suitable AI tools, and gradual implementation. For AI KPI management advice and practical AI solutions, connect with us at hello@itinai.com.

Practical AI Solution: AI Sales Bot

Consider the AI Sales Bot from itinai.com/aisalesbot, designed to automate customer engagement 24/7 and manage interactions across all customer journey stages.