Understanding Recurrent Neural Networks (RNNs)

RNNs were the pioneers in natural language processing, laying the groundwork for future innovations. They were designed to manage long sequences of data thanks to their memory and fixed state size. However, in practice, RNNs struggled with long context lengths, often leading to poor performance.

Challenges of RNNs

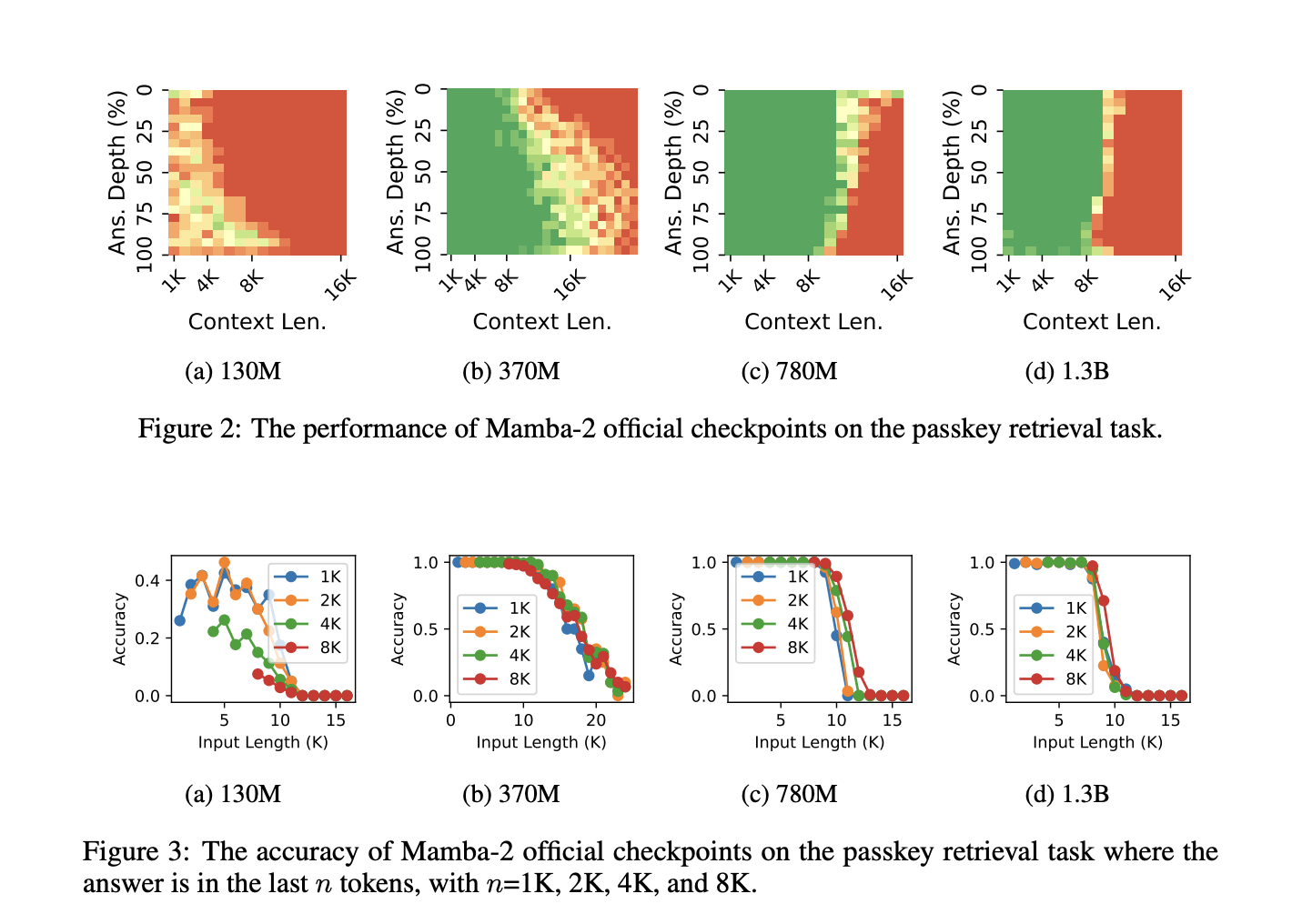

As the context length increased, RNNs’ effectiveness dropped sharply. For instance, the latest state-of-the-art RNN models like Mamba-1 performed poorly when handling sequences longer than their training data, often less than 10,000 tokens. Despite the increase in computational resources, RNNs failed to generalize well over long sequences.

The Rise of Transformers

Transformers and attention-based models emerged to address these limitations, demonstrating exceptional ability to process long sequences with thousands or even millions of tokens. Their advanced design and superior performance made them the preferred choice for language modeling.

Recent Research on RNNs

Researchers from Tsinghua University conducted a study to explore the issues with RNNs. They identified a critical problem called “State Collapse,” which hindered the performance of RNNs in long-context tasks.

Key Findings

- The memory limitations of RNNs mean they can only remember a finite number of tokens, leading to forgetfulness when the context length exceeds their training capacity.

- This behavior was likened to students cramming for exams, where lack of consistent study results in poor performance.

- The research revealed that certain outlier values in RNN memory states were responsible for this collapse, causing other memory channels to diminish.

Proposed Solutions

The authors suggested several methods to enhance RNN performance:

- Forget More and Remember Less: Reduces memory retention to enhance performance.

- State Normalization: Normalizes memory states to improve efficiency.

- Sliding Window by State Difference: Reformulates memory management into a sliding window approach.

- Continual Training: Trains RNNs on longer context lengths beyond their initial limits.

Results and Insights

The researchers tested these methods with Mamba-2, achieving significant improvements, including handling up to 1 million tokens. The 370M model of Mamba-2 exhibited near-perfect accuracy in key retrieval tasks, outperforming equivalent transformer models.

Conclusion

This study indicates that RNNs still hold potential, similar to how a student needs guidance to excel. With the right training and adjustments, RNNs can overcome their limitations in long-context modeling.

Get Involved

Discover more about this research and its implications. Follow us for updates on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Don’t miss out on our newsletter and our thriving ML SubReddit community!

Sponsorship Opportunities

If you’re interested in promoting your research or products to a large audience, consider our sponsorship opportunities.

Transform Your Business with AI

Explore how AI can enhance your operations:

- Identify automation opportunities within customer interactions.

- Define measurable KPIs for your AI initiatives.

- Select AI solutions tailored to your business needs.

- Implement AI gradually, starting with pilot projects.

For AI management advice, reach out to us at hello@itinai.com. Stay connected for continuous insights on leveraging AI through our Telegram and Twitter channels.