Enhancing Large Language Models’ Spatial Reasoning Abilities

Today, large language models (LLMs) have made significant strides in various tasks, showcasing reasoning skills crucial for the development of Artificial General Intelligence (AGI) and applications in robotics and navigation.

Understanding Spatial Reasoning

Spatial reasoning involves understanding both quantitative aspects like distances and angles, as well as qualitative aspects such as relative positions (e.g., “near” or “inside”). While humans perform well in these areas, LLMs often face challenges, particularly in recognizing complex relationships between objects. This indicates a need for better methods to enhance spatial reasoning in LLMs.

Limitations of Traditional Approaches

Conventional LLM methods rely on simple prompts but struggle with complex tasks, especially in datasets like StepGame and SparQA that require multi-step reasoning. Some strategies, like Chain of Thought (CoT) prompting and visualization of thought, have been developed, yet challenges remain due to limited testing and underutilization of effective methods.

A New Framework for Improvement

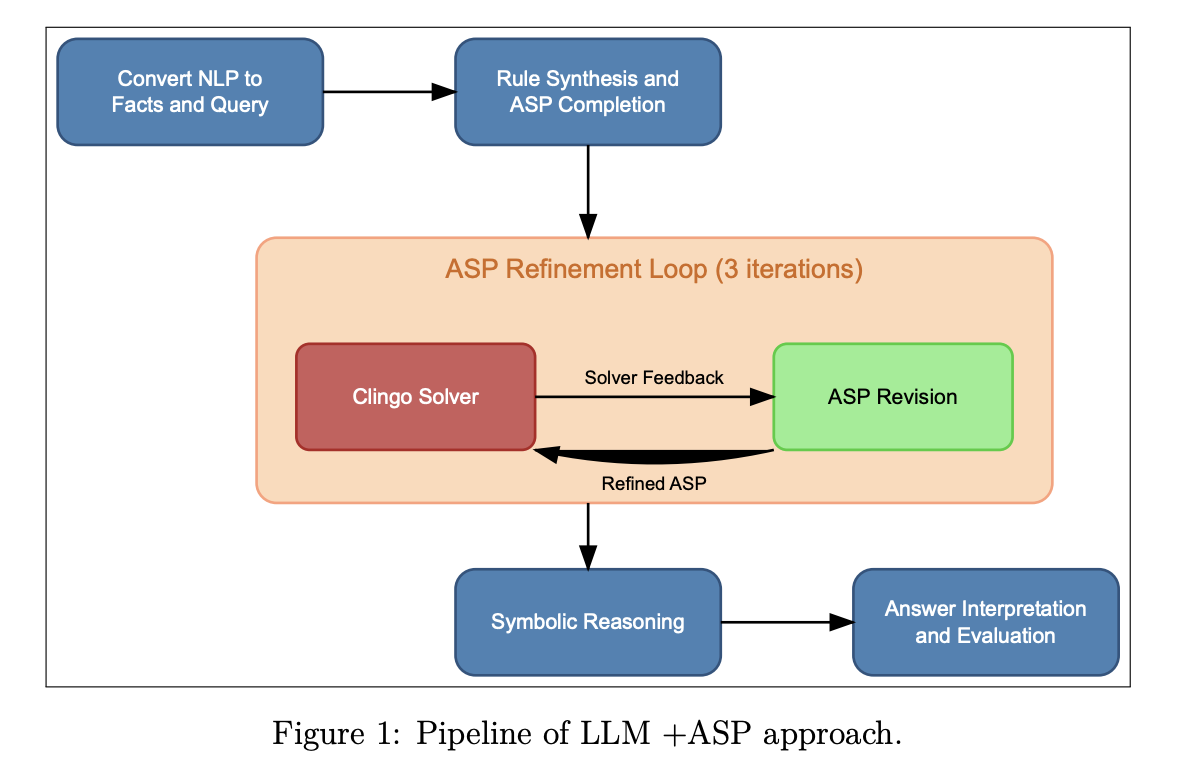

Researchers from Stuttgart University have introduced a systematic neural-symbolic framework that boosts LLMs’ spatial reasoning by merging strategic prompting with symbolic reasoning. This innovative approach incorporates feedback loops and ASP-based verification, improving performance on intricate tasks across various LLM architectures.

Research and Methodology

The study tested two datasets: StepGame, which includes synthetic spatial questions, and SparQA, which presents complex text-based questions. They evaluated three methods:

- ASP for logical reasoning

- Combined LLM+ASP pipeline with DSPy optimization

- Fact + Logical Rules method that embeds rules into prompts

Tools like Clingo, DSPy, and LangChain facilitated the implementation, with models such as DeepSeek and GPT-4 Mini being assessed using accuracy metrics.

Key Findings

The “LLM + ASP” method significantly improved accuracy in the SparQA dataset, especially for specific types of questions. The “Facts + Rules” approach exceeded direct prompting by over 5% in accuracy. Overall, the framework achieved:

- Over 80% accuracy on StepGame

- Around 60% accuracy on SparQA

- 40-50% improvement on StepGame and 3-13% on SparQA compared to baseline prompting

Future Directions

While the proposed framework shows promise, there is still room for improvement to enhance performance further on complex datasets. This research sets a foundation for future advancements in AI.

Get Involved

Explore the potential of AI in your organization. Here’s how:

- Identify Automation Opportunities: Find customer interaction points to benefit from AI.

- Define KPIs: Make sure your AI projects have measurable outcomes.

- Select an AI Solution: Choose tools that fit your needs.

- Implement Gradually: Start small, gather insights, and scale wisely.

For tailored AI KPI management advice, reach out to us at hello@itinai.com. Stay updated on AI insights through our Telegram and @ Twitter.

Stay Connected

Follow our research and developments by joining our Telegram Channel, LinkedIn Group, and our 55k+ ML SubReddit community. Don’t miss out on our newsletter for more updates!