Understanding Probabilistic Diffusion Models

Probabilistic diffusion models are crucial for creating complex data like images and videos. They convert random noise into structured, realistic data. The process involves two main phases: the forward phase adds noise to the data, while the reverse phase reconstructs it into a coherent form. However, these models often need many steps to denoise, which can slow down the process and affect efficiency.

Challenges in Current Models

A key issue with existing diffusion models is their inefficiency in producing high-quality samples. This is mainly due to the many steps needed in the reverse process and the limitations of covariance settings. Improving covariance predictions can speed up sampling while maintaining quality. Researchers are working on refining these approximations for better efficiency and accuracy.

Innovative Solution: Optimal Covariance Matching (OCM)

A research team from Imperial College London, University College London, and the University of Cambridge has developed a new method called Optimal Covariance Matching (OCM). This technique improves covariance estimation by directly deriving it from the model’s score function, eliminating the need for complex data-driven approximations. OCM reduces prediction errors and enhances sampling quality, making the process more efficient.

Benefits of OCM Methodology

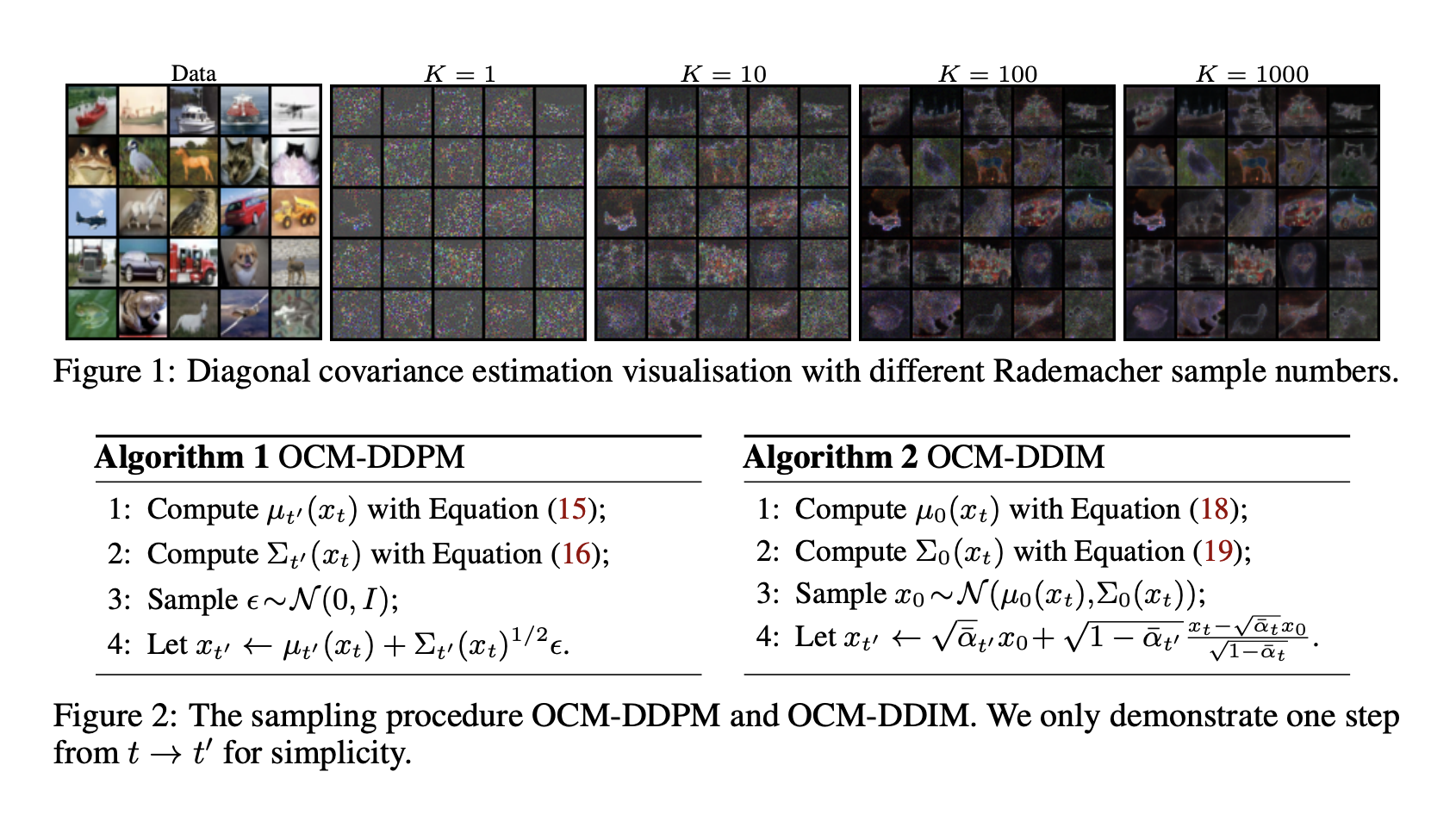

The OCM approach simplifies covariance estimation by training a neural network to predict the diagonal Hessian, which allows for accurate approximations with less computational effort. Traditional models often require heavy calculations, especially with large datasets. OCM reduces these demands, leading to faster sampling and lower storage needs. This method not only improves prediction accuracy but also shortens the overall sampling time.

Performance Improvements

Tests show that OCM significantly enhances the quality and efficiency of generated samples. For example, on the CIFAR10 dataset, OCM achieved a Frechet Inception Distance (FID) score of 38.88 with just five denoising steps, outperforming the traditional Denoising Diffusion Probabilistic Model (DDPM) which scored 58.28. With ten steps, OCM improved further to 21.60, while DDPM reached 34.76. This indicates that OCM not only improves sample quality but also reduces the computational load.

Conclusion

This research presents an innovative way to optimize covariance estimation, leading to high-quality data generation with fewer steps and greater efficiency. OCM merges computational efficiency with high output quality, making it a valuable advancement for applications requiring rapid and high-quality data generation.

Stay Connected

Check out the Paper and GitHub for more details. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. If you appreciate our work, subscribe to our newsletter and join our 55k+ ML SubReddit community.

Upcoming Webinar

[Upcoming Live Webinar- Oct 29, 2024] The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine (Promoted)

Leverage AI for Your Business

To evolve your company with AI and stay competitive, consider the following:

- Identify Automation Opportunities: Find customer interaction points that can benefit from AI.

- Define KPIs: Ensure measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start with a pilot program, gather data, and expand AI usage wisely.

For AI KPI management advice, connect with us at hello@itinai.com. For continuous insights into leveraging AI, follow us on Telegram t.me/itinainews or Twitter @itinaicom.

Transform Your Sales and Customer Engagement

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.