Long-form RobustQA Dataset and RAG-QA Arena

Practical Solutions and Value

Question answering (QA) in natural language processing (NLP) is enhanced by Retrieval-augmented generation (RAG), which filters out irrelevant information and presents only the most pertinent passages for large language models (LLMs) to generate responses.

Challenges in QA

Existing datasets have limited scope and often focus on short, extractive answers, hampering the evaluation of LLMs’ generalization across different domains. This calls for more comprehensive evaluation frameworks.

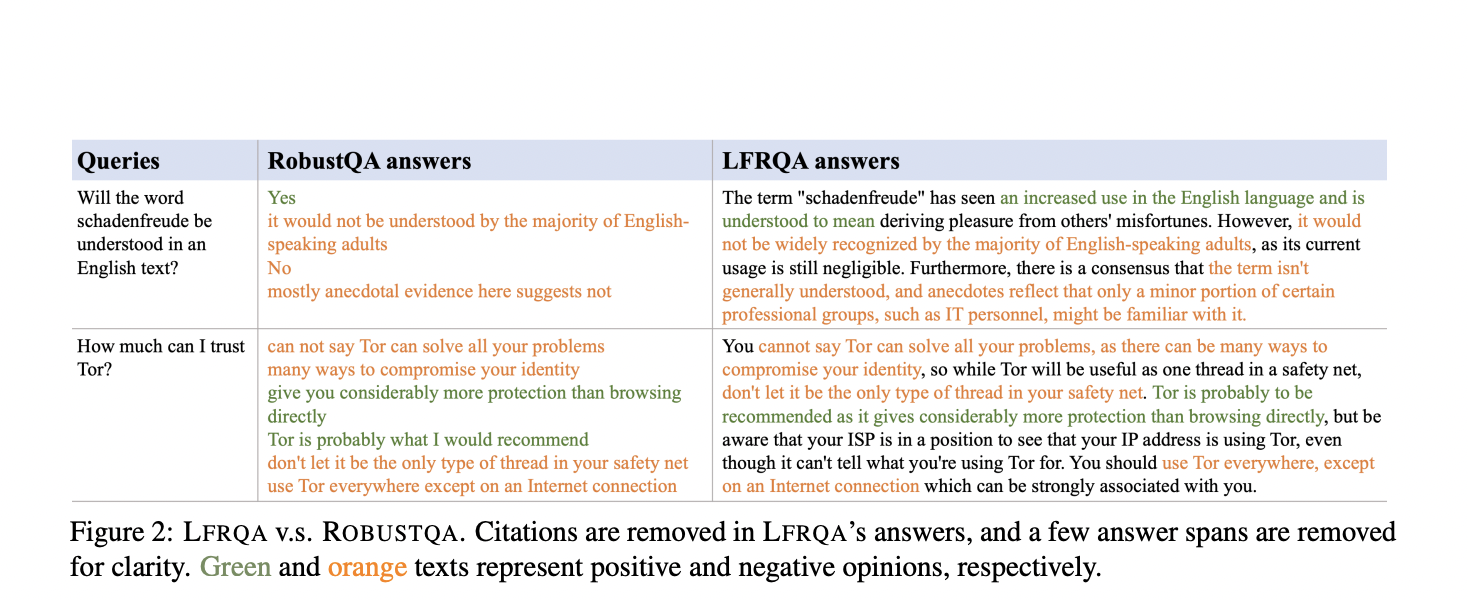

Introduction of Long-form RobustQA (LFRQA)

LFRQA, introduced by researchers from AWS AI Labs, Google, Samaya.ai, Orby.ai, and the University of California, Santa Barbara, covers 26,000 queries across seven domains to evaluate the cross-domain generalization capabilities of LLM-based RAG-QA systems.

Key Features of LFRQA

LFRQA offers long-form answers grounded in a corpus, ensuring coherence, and covering multiple domains. It includes annotations from various sources, making it a valuable tool for benchmarking QA systems.

RAG-QA Arena Framework

The RAG-QA Arena framework leverages LFRQA for evaluating QA systems, providing a more accurate and challenging benchmark for QA systems. Extensive experiments demonstrated a high correlation between model-based and human evaluations, validating the framework’s effectiveness.

Quality Assurance of LFRQA

The high quality of LFRQA is ensured through rigorous annotation processes and quality control measures, resulting in a dataset that effectively benchmarks the cross-domain robustness of QA systems.

Performance Results

The RAG-QA Arena framework shows that LFRQA’s human-written answers were preferred in 59.1% of cases compared to leading LLM answers. The evaluation also revealed a 25.1% gap in performance between in-domain and out-of-domain data, emphasizing the importance of cross-domain evaluation in developing robust QA systems.

Insights and Conclusion

LFRQA includes detailed performance metrics that provide valuable insights into the effectiveness of QA systems, guiding future improvements. This research introduces LFRQA and RAG-QA Arena as innovative solutions, contributing significantly to the advancement of NLP and QA research.

Check out the Paper. All credit for this research goes to the researchers of this project.

Don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.

Don’t Forget to join our 47k+ ML SubReddit

Find Upcoming AI Webinars here

The post This AI Paper Introduces Long-form RobustQA Dataset and RAG-QA Arena for Cross-Domain Evaluation of Retrieval-Augmented Generation Systems appeared first on MarkTechPost.

AI Solutions for Business

Discover how AI can redefine your way of work. Identify Automation Opportunities, Define KPIs, Select an AI Solution, and Implement Gradually. For AI KPI management advice, connect with us at hello@itinai.com. And for continuous insights into leveraging AI, stay tuned on our Telegram or Twitter.

AI for Sales Processes and Customer Engagement

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.