Evaluating Large Language Models (LLMs) for Real-World Use

Understanding how well large language models (LLMs) work in real-life situations is crucial for their effective use. A major challenge is that many evaluations rely on fixed datasets, which can lead to misleading performance results. Traditional testing methods often overlook how well a model can adapt to feedback or clarify its responses, making them less relevant to actual scenarios. To address this, we need a more flexible and ongoing evaluation approach.

Limitations of Traditional Evaluation Methods

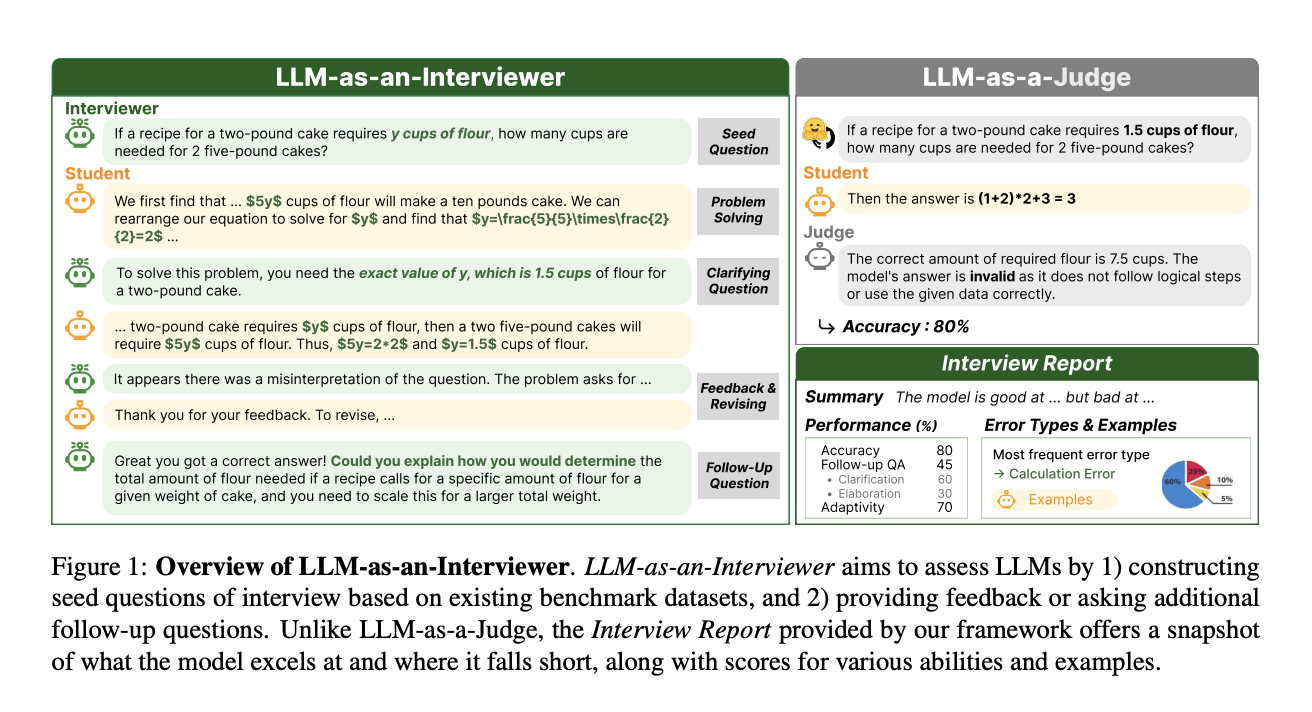

Conventional methods, like “LLM-as-a-Judge,” use static datasets to measure performance. While they may align somewhat with human judgment, they have biases, such as favoring longer responses and inconsistent scoring. These methods also struggle to assess how models perform in multi-turn conversations, where adaptability is key. Consequently, they fail to provide a complete picture of an LLM’s abilities.

Introducing LLM-AS-AN-INTERVIEWER

Researchers from KAIST, Stanford, Carnegie Mellon, and Contextual AI have developed a new evaluation framework called LLM-AS-AN-INTERVIEWER. This innovative approach simulates human interviews by adjusting questions based on the model’s performance, allowing for a more detailed assessment of its capabilities. This dynamic method captures important behaviors like refining responses and effectively handling follow-up questions.

How the Framework Works

The evaluation process consists of three stages:

- Problem Setup: The interviewer creates diverse and challenging questions.

- Feedback and Revision: The interviewer provides feedback on the model’s answers and asks follow-up questions.

- Follow-Up Questioning: This tests additional aspects of the model’s reasoning and knowledge.

At the end of the process, an “Interview Report” is generated, summarizing performance metrics, error analysis, and insights into the model’s strengths and weaknesses. This report offers valuable information on how well the model can perform in real-world situations.

Proven Effectiveness

Tests using the MATH and DepthQA datasets show the framework’s success. For example, models like GPT-4o improved their problem-solving accuracy from 72% to 84% through iterative feedback. Similarly, DepthQA evaluations highlighted how follow-up questions helped uncover knowledge gaps and enhance responses. The adaptability of GPT-3.5 improved by 25% after interactions, demonstrating the model’s ability to refine answers based on feedback.

Addressing Biases in Evaluations

This framework also tackles common biases in LLM evaluations. As interactions progress, verbosity bias decreases, leading to a better correlation between response quality and scores. Additionally, self-enhancement bias is reduced through dynamic interactions, ensuring consistent evaluation results across multiple tests.

Combating Data Contamination

LLM-AS-AN-INTERVIEWER effectively addresses data contamination, a significant concern in LLM training and evaluation. By dynamically changing benchmark questions and introducing new follow-ups, the framework helps distinguish between a model’s true capabilities and the effects of contaminated training data.

A New Standard for LLM Evaluation

In summary, LLM-AS-AN-INTERVIEWER marks a significant advancement in evaluating large language models. By simulating human-like interactions and adapting to model responses, it provides a more accurate understanding of their capabilities. This iterative approach highlights areas for improvement and demonstrates models’ adaptability for real-world applications. With its comprehensive analysis, this framework sets a new benchmark for LLM evaluation, ensuring future models are assessed with greater precision.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

Join Our Webinar

Gain actionable insights into enhancing LLM model performance and accuracy while ensuring data privacy.

Transform Your Business with AI

Discover how AI can reshape your work processes:

- Identify Automation Opportunities: Find key customer interaction points that can benefit from AI.

- Define KPIs: Ensure your AI initiatives have measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that fit your needs and allow for customization.

- Implement Gradually: Start with a pilot, gather data, and expand AI usage wisely.

For AI KPI management advice, connect with us at hello@itinai.com. For ongoing insights into leveraging AI, stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom.

Explore how AI can enhance your sales processes and customer engagement at itinai.com.