Understanding Large Language Models (LLMs)

Large Language Models (LLMs) are designed for tasks like math, programming, and autonomous agents. However, they need better reasoning skills during testing. Current methods involve generating reasoning steps or using sampling techniques, but their effectiveness in complex reasoning is limited.

Challenges in Current Approaches

Improving reasoning in LLMs often relies on imitation learning, where models mimic reasoning steps. While pretraining and fine-tuning can help, they struggle with complex reasoning tasks. Techniques like generating question-answer pairs improve accuracy but depend on external supervision. Simply scaling models with more data doesn’t always lead to better reasoning abilities.

Introducing the T1 Method

Researchers from Tsinghua University and Zhipu AI have developed the T1 method to enhance reinforcement learning (RL) in LLMs. This method broadens exploration and improves inference scaling.

How T1 Works

T1 trains models using chain-of-thought data, allowing trial-and-error learning. It encourages diverse reasoning by generating multiple responses and analyzing errors before applying reinforcement learning. Key features include:

- Oversampling: Increases response diversity.

- Dynamic Reference Model: Updates the model continuously to avoid rigidity.

- Penalties for Low-Quality Responses: Discourages redundant or overly long answers.

Results and Performance

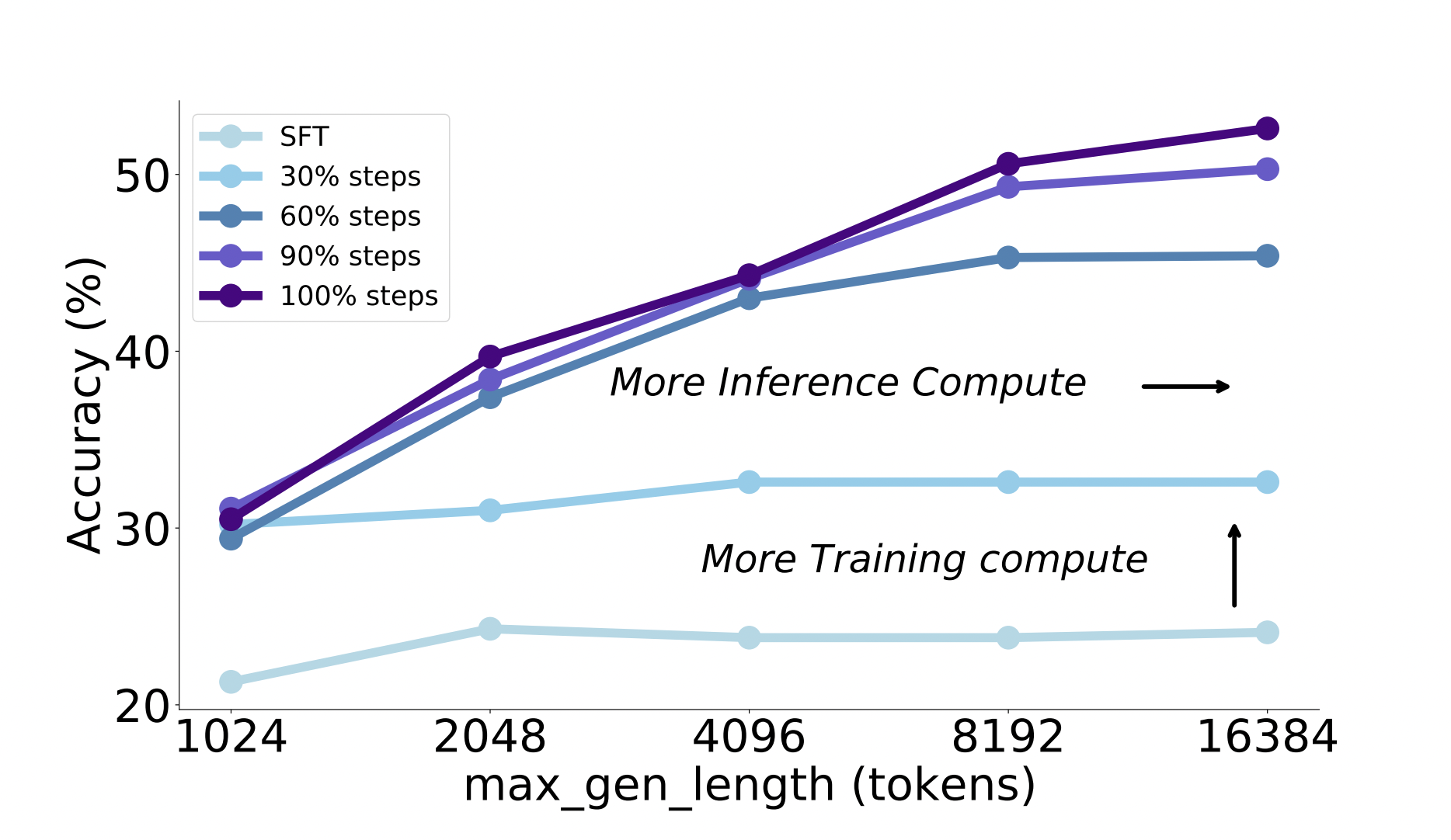

The T1 method was tested with models like GLM-4-9B and Qwen2.5-14B/32B, focusing on math reasoning. It showed significant improvements, with Qwen2.5-32B achieving a 10-20% boost over previous versions. Key findings include:

- Increased sampling improved exploration and generalization.

- Optimal sampling temperature stabilized training.

- Penalties enhanced response length control and consistency.

Conclusion

The T1 method successfully enhances LLMs through improved reinforcement learning, exploration, and stability. It demonstrates strong performance on challenging benchmarks and offers a framework for advancing reasoning capabilities in AI.

Get Involved

For more insights, check out the Paper and GitHub Page. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Join our 75k+ ML SubReddit for ongoing discussions.

Transform Your Business with AI

To stay competitive, consider these steps:

- Identify Automation Opportunities: Find areas in customer interactions that can benefit from AI.

- Define KPIs: Ensure measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start with a pilot project, gather data, and expand wisely.

For AI KPI management advice, contact us at hello@itinai.com. Stay updated on AI insights via our Telegram or Twitter.

Explore AI Solutions for Sales and Engagement

Discover how AI can transform your sales processes and customer engagement at itinai.com.