The Importance of Quality Data in AI Development

Key Challenges

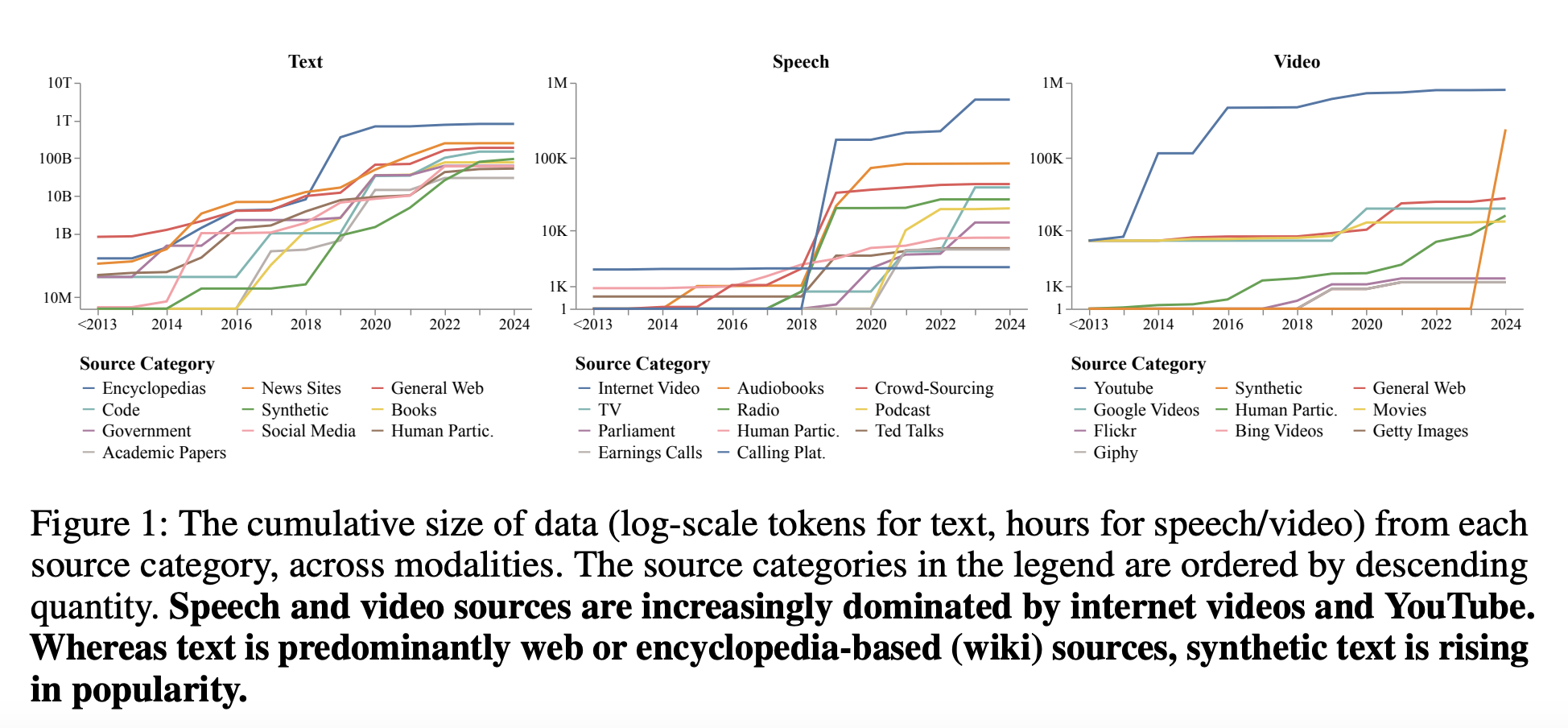

Advancements in artificial intelligence (AI) depend on high-quality training data. Multimodal models, which process text, speech, and video, require diverse datasets. However, issues arise from unclear dataset origins and attributes, leading to ethical and legal challenges. Understanding these gaps is crucial for creating responsible AI technologies.

Data Representation Issues

AI systems struggle with dataset representation and traceability, which hinders the development of unbiased technologies. Many datasets rely on a few sources, like YouTube and Wikipedia, which do not adequately represent underrepresented languages and regions. Additionally, unclear licensing practices create legal uncertainties, as over 80% of datasets have undocumented restrictions.

Need for Comprehensive Solutions

Efforts to improve data quality often focus on narrow issues, such as removing harmful content. However, a broader framework is needed to evaluate datasets across different types, including speech and video. Current platforms lack mechanisms for accurate metadata and consistent documentation, highlighting the need for a systematic audit of multimodal datasets.

Research Findings

The Data Provenance Initiative conducted a major audit of nearly 4,000 public datasets from 1990 to 2024. This study revealed that most training data comes from web-crawled and social media sources, with YouTube being a major contributor. The audit also found significant geographical imbalances, with North American and European organizations dominating dataset creation.

Key Insights for Developers and Policymakers

- Over 70% of speech and video datasets come from platforms like YouTube.

- Only 33% of datasets are explicitly non-commercial, while over 80% of source content is restricted.

- North American and European organizations create most datasets, with minimal contributions from Africa and South America.

- Synthetic datasets are on the rise, driven by models like GPT-4.

- There is a pressing need for more transparent and equitable practices in dataset curation.

Conclusion

This audit highlights the reliance on web-crawled and synthetic data, persistent inequalities in representation, and complex licensing issues. By addressing these challenges, we can create more transparent and responsible AI systems. This research serves as a call to action for all stakeholders to prioritize transparency and equity in the AI data ecosystem.

Get Involved

Check out the research paper for more insights. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Don’t forget to join our 60k+ ML SubReddit!

Transform Your Business with AI

Stay competitive by leveraging AI solutions. Here’s how:

- Identify Automation Opportunities: Find key customer interaction points that can benefit from AI.

- Define KPIs: Ensure measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start with a pilot, gather data, and expand usage wisely.

For AI KPI management advice, connect with us at hello@itinai.com. For continuous insights, follow us on Telegram at t.me/itinainews or Twitter @itinaicom.

Discover how AI can enhance your sales processes and customer engagement at itinai.com.