Multimodal Artificial Intelligence: Enhancing Efficiency and Performance

Challenges in Multimodal AI

Multimodal AI faces challenges in optimizing model efficiency and integrating diverse data types effectively.

Practical Solutions

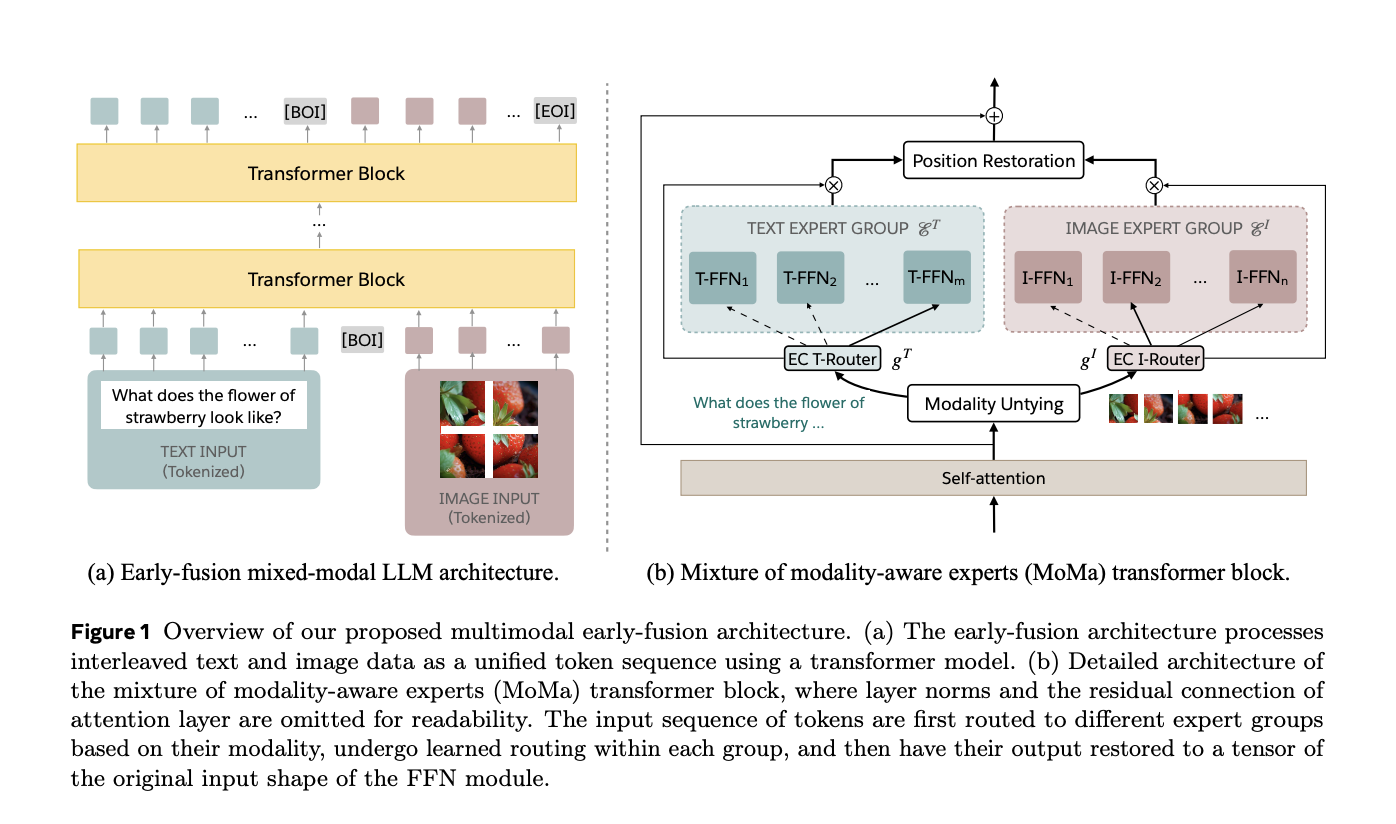

MoMa, a modality-aware mixture-of-experts (MoE) architecture, pre-trains mixed-modal, early-fusion language models, significantly improving efficiency and performance.

Value and Potential

MoMa’s innovative architecture represents a significant advancement in multimodal AI, addressing critical computational efficiency issues and paving the way for resource-effective AI systems.

Performance and Efficiency

MoMa achieved substantial reductions in floating-point operations per second (FLOPs), highlighting its potential to enhance the efficiency of mixed-modal, early-fusion language model pre-training.

Future Implications

MoMa’s breakthrough paves the way for the next generation of multimodal AI models, enhancing AI’s capability to understand and interact with the complex, multimodal world we live in.

AI Integration and Evolution

Discover how AI can redefine your way of work and sales processes, and identify automation opportunities to stay competitive and evolve your company with AI.

AI Implementation Advice

For AI KPI management advice and continuous insights into leveraging AI, connect with us at hello@itinai.com and stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom.