Introducing OLMES: Standardizing Language Model Evaluations

Language model evaluation is crucial in AI research, helping to assess model performance and guide future development. However, the lack of a standardized evaluation framework leads to inconsistent results and hinders fair comparisons.

Practical Solutions and Value

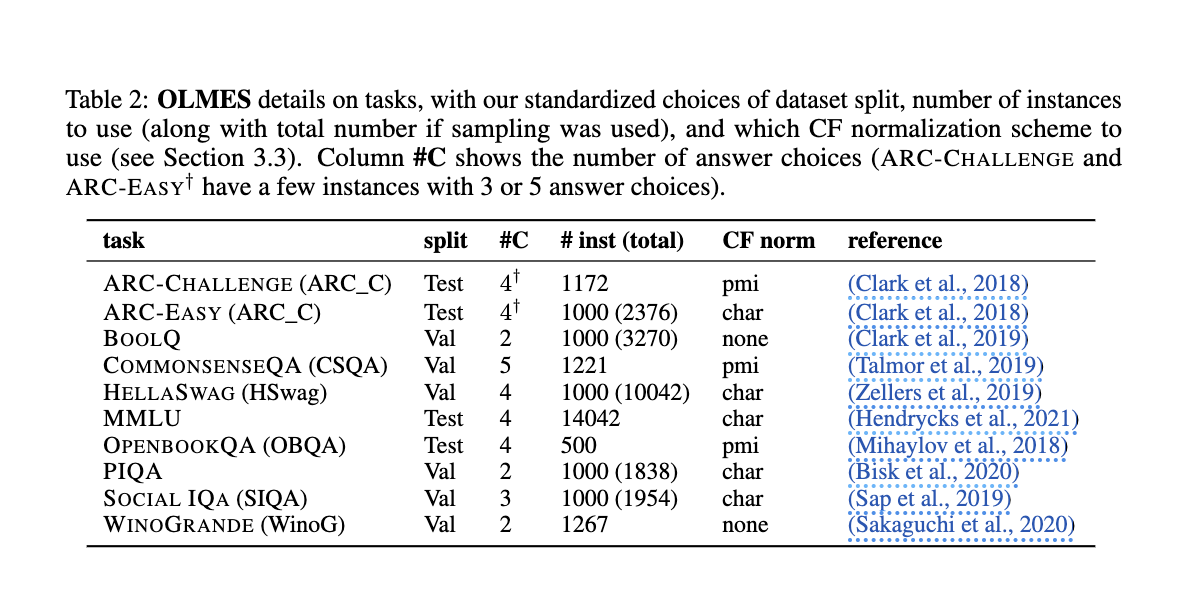

OLMES (Open Language Model Evaluation Standard) addresses these issues by providing comprehensive guidelines for reproducible evaluations. It standardizes the evaluation process, removes ambiguities, and supports meaningful model comparisons.

Benefits of OLMES

OLMES offers detailed guidelines for dataset processing, prompt formatting, in-context examples, probability normalization, and task formulation. By adopting OLMES, the AI community can achieve greater transparency, reproducibility, and fairness in evaluating language models.

Validation and Impact

Experiments have shown that OLMES provides more consistent and reproducible results, improving the reliability of performance measurements. Models evaluated using OLMES performed better and exhibited reduced discrepancies in reported performance across different references.

Advancing AI Research and Development

By introducing OLMES, the AI community can drive further progress in AI research and development, fostering innovation and collaboration among researchers and developers.

Evolve Your Company with AI

Discover how AI can redefine your way of work and sales processes. Identify automation opportunities, define KPIs, select an AI solution, and implement gradually to stay competitive and drive business outcomes.

Connect with Us

For AI KPI management advice and continuous insights into leveraging AI, connect with us at hello@itinai.com or stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom.