The Impact of Questionable Research Practices on the Evaluation of Machine Learning (ML) Models

Practical Solutions and Value

Evaluating model performance is crucial in the rapidly advancing fields of Artificial Intelligence and Machine Learning, especially with the introduction of Large Language Models (LLMs). This review procedure helps understand these models’ capabilities and create dependable systems based on them.

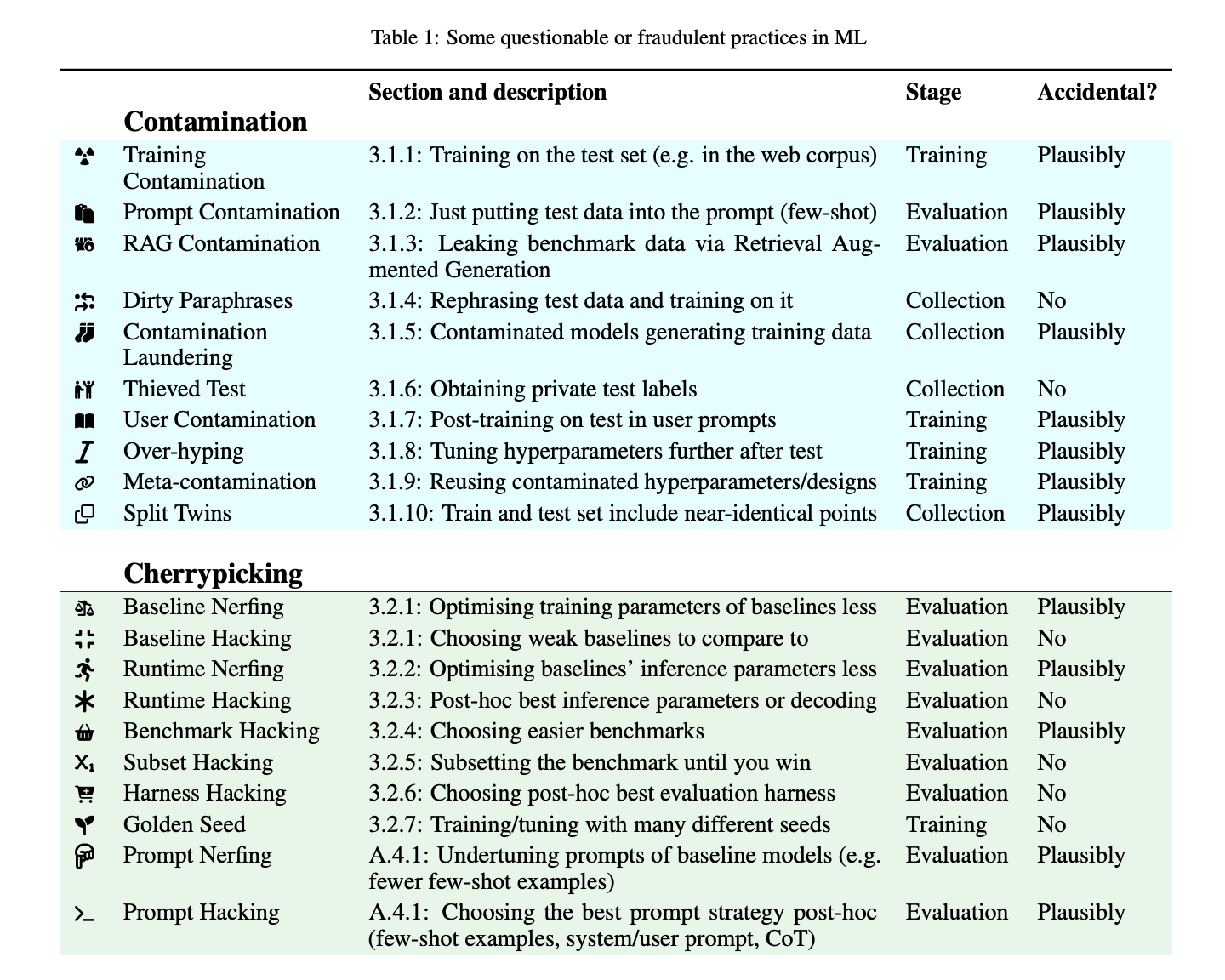

However, Questionable Research Practices (QRPs) frequently jeopardize the integrity of these assessments, potentially exaggerating published results and deceiving the scientific community and the general public about the actual effectiveness of ML models.

Practical Solutions

Contamination: Avoid using test data for training, assessment, or model prompts. Be cautious during few-shot evaluations and retrieval augmented generation to prevent data leakage.

Cherrypicking: Refrain from adjusting experimental conditions to support intended results, and ensure that models are tested under various scenarios to avoid bias.

Misreporting: Present generalizations based on comprehensive benchmarks and avoid selective presentation of statistically significant findings.

Irreproducible Research Practices (IRPs): Share training dataset information transparently to enable validation and replication of discoveries.

Value

Setting up and upholding strict guidelines for research processes is essential as ML models are used more often and have a greater impact on society. The full potential of ML models can only be attained by openness, responsibility, and a dedication to moral research.

AI Solutions for Business

Identify Automation Opportunities, Define KPIs, Select an AI Solution, and Implement Gradually to ensure measurable impacts on business outcomes. Connect with us at hello@itinai.com for AI KPI management advice and continuous insights into leveraging AI.

AI Solutions for Sales Processes and Customer Engagement

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.