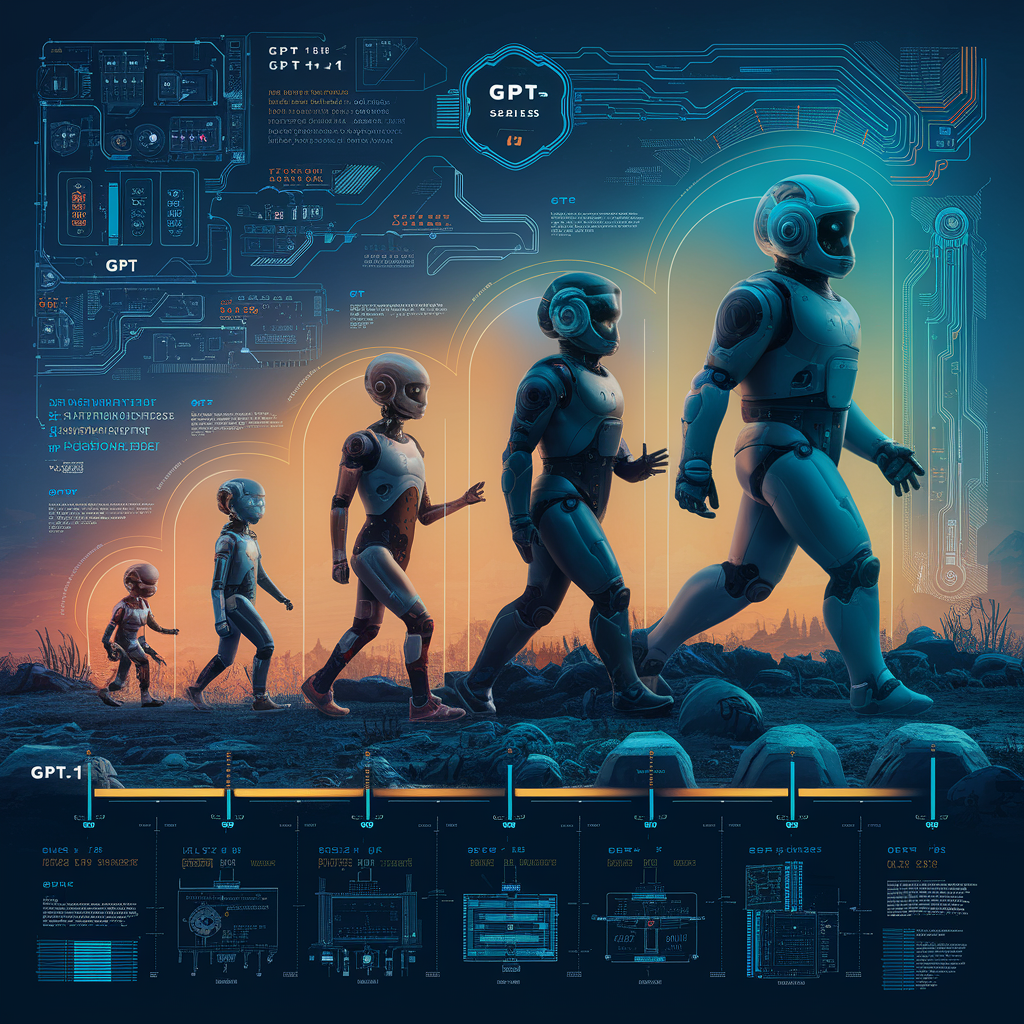

The Evolution of the GPT Series: A Deep Dive into Technical Insights and Performance Metrics

GPT-1: The Beginning

GPT-1 marked the inception of the series, showcasing the power of transfer learning in NLP by fine-tuning pre-trained models on specific tasks.

GPT-2: Scaling Up

GPT-2 demonstrated the benefits of larger models and datasets, significantly improving text generation, coherence, and context retention.

GPT-3: The Game Changer

GPT-3 demonstrated human-like text generation and understanding, excelling in zero-shot, one-shot, and few-shot learning scenarios.

GPT-3.5: Bridging the Gap

GPT-3.5 improved contextual understanding, coherence, and efficiency, addressing some of GPT-3’s limitations and offering better performance in conversational AI and complex text generation tasks.

GPT-4: The Frontier

GPT-4 achieved new heights in natural language understanding and generation, surpassing GPT-3 in coherence, relevance, and contextual accuracy.

GPT-4o: Optimized and Efficient

GPT-4o maintained the high performance of GPT-4 while being more computationally efficient, demonstrating improved inference speeds and lower latency.

Technical Insights

The Transformer architecture enables efficient handling of long-range dependencies, while understanding scaling laws and training efficiency drove the development of GPT models.

Performance Metrics

Metrics such as perplexity, accuracy, F1 score, and BLEU score evaluate the quality and accuracy of model predictions in NLP tasks.

Impact and Applications

The GPT series has had a profound impact on content creation, customer support, education, and research.

The Practical AI Solution

Consider the AI Sales Bot designed to automate customer engagement 24/7 and manage interactions across all customer journey stages at itinai.com/aisalesbot.