Post-Training Techniques for Language Models

Post-training techniques like instruction tuning and reinforcement learning are crucial for improving language models. Unfortunately, open-source methods often lag behind proprietary models due to unclear training processes and data. This gap limits progress in open AI research.

Challenges with Open-Source Efforts

Previous projects, such as Tülu 2 and Zephyr-β, aimed to enhance post-training but faced limitations due to simpler methods. In contrast, proprietary models like GPT-4o and Claude 3.5-Haiku outperform them by using larger datasets and refined techniques.

Introduction of Tülu 3

In partnership with the University of Washington, the Allen Institute for AI (AI2) launched Tülu 3, a significant advancement in open-weight post-training. This model uses the Llama 3.1 base and is designed for scalability and high performance.

Key Features of Tülu 3 405B

- Innovative Reinforcement Learning: Tülu 3 405B uses Reinforcement Learning with Verifiable Rewards (RLVR), enhancing task performance by ensuring rewards come from verifiable outcomes.

- Efficient Resource Usage: The model was optimized for 256 GPUs, improving computational efficiency during training.

- Structured Approach: The post-training process includes data curation, supervised fine-tuning, preference optimization, and RLVR for specialized skills.

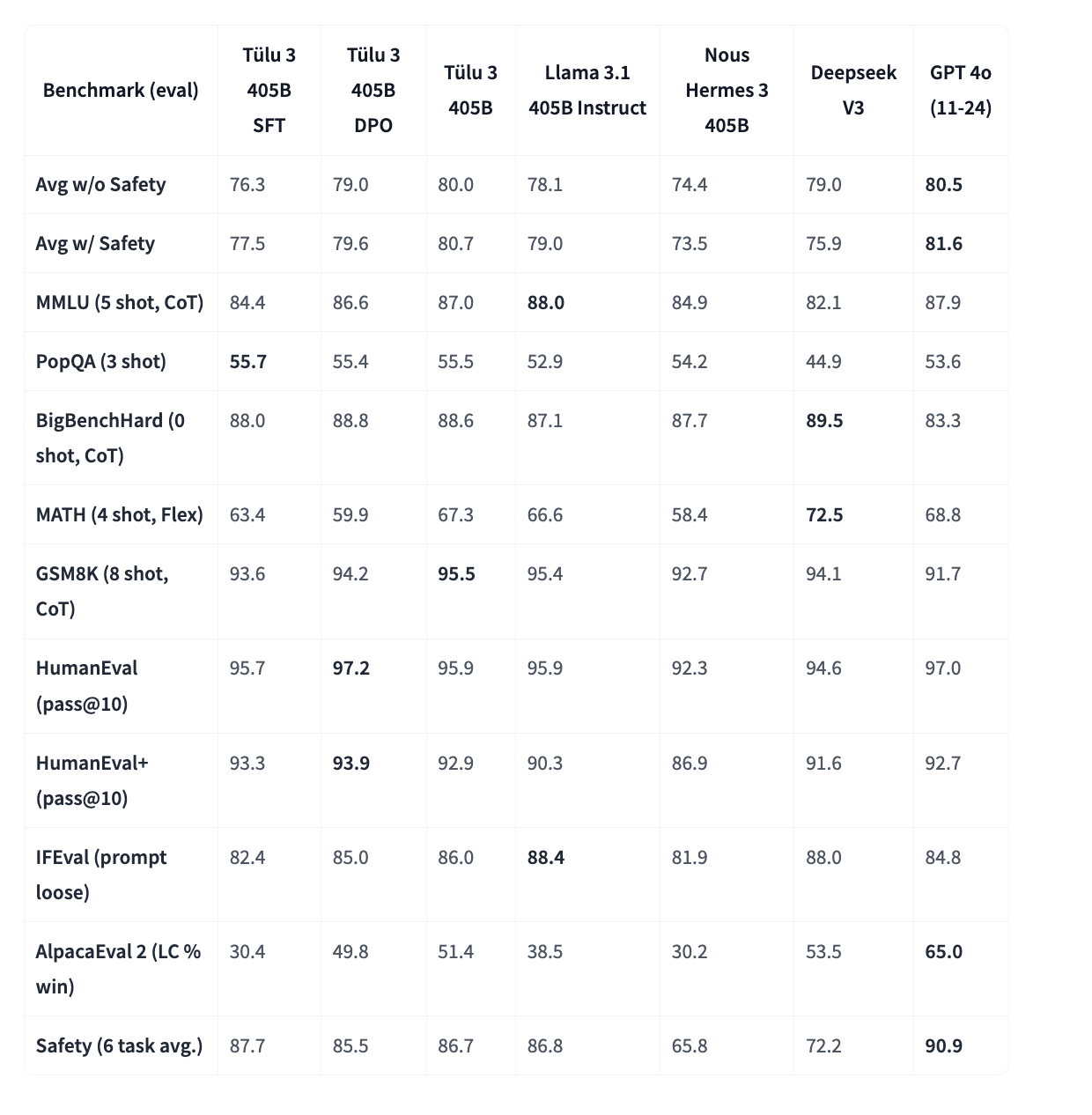

Performance Highlights

Tülu 3 405B outperformed other models like DeepSeek V3 and GPT-4o, especially in safety benchmarks, showcasing its competitive edge. The training process was resource-intensive but resulted in a model capable of strong generalization across multiple tasks.

Key Takeaways

- Multiple configurations of Tülu 3 were released, each fine-tuned for optimal performance.

- The model excels with specialized datasets, particularly in mathematics.

- RLVR offers a novel approach to reinforcement learning, elevating performance in structured reasoning tasks.

- Ongoing research is needed to explore new model structures and reward optimization.

Conclusion

Tülu 3 405B represents a significant step in open post-training techniques, showcasing its competitive performance against leading proprietary models. The success of this model highlights the potential for open-source advancements in AI, particularly with specialized data.

Explore AI Solutions for Your Business

Ready to leverage AI for your company? Here are practical steps to get started:

- Identify Automation Opportunities: Pinpoint areas where AI can enhance customer interactions.

- Define KPIs: Ensure your AI initiatives yield measurable business outcomes.

- Select the Right AI Solution: Choose tools that meet your specific needs.

- Implement Gradually: Start small, collect data, and scale wisely.

For personalized AI KPI management advice, reach out at hello@itinai.com. For ongoing insights, follow us on Telegram or @itinaicom.