Meta has proposed a new approach called System 2 Attention (S2A) to address the issue of bias and irrelevant context in large language models (LLMs). S2A uses natural language processing to refine the original prompt, stripping out bias and irrelevant information before generating a response. The results show impressive improvements in accuracy, particularly in factual questions. However, this approach adds additional computation requirements and costs. Users can still leverage the S2A approach by crafting well-structured prompts without opinions or leading suggestions. It is unclear if Meta will integrate S2A into its Llama model.

System 2 Attention improves accuracy of LLM responses

Large Language Models (LLM) can sometimes be misled by bias or irrelevant context in a prompt. However, researchers at Meta have developed a solution called System 2 Attention (S2A) to address this issue.

When we enter longer and more detailed prompts into an LLM, it can become confused by the nuances and smaller details. Early machine learning used a “hard attention” approach that focused only on the most relevant part of an input, but this approach was not effective for tasks like translation or answering complex questions.

Most LLMs now use a “soft attention” approach, which tokenizes the entire prompt and assigns weights to each token. However, this can still result in confusion for LLMs.

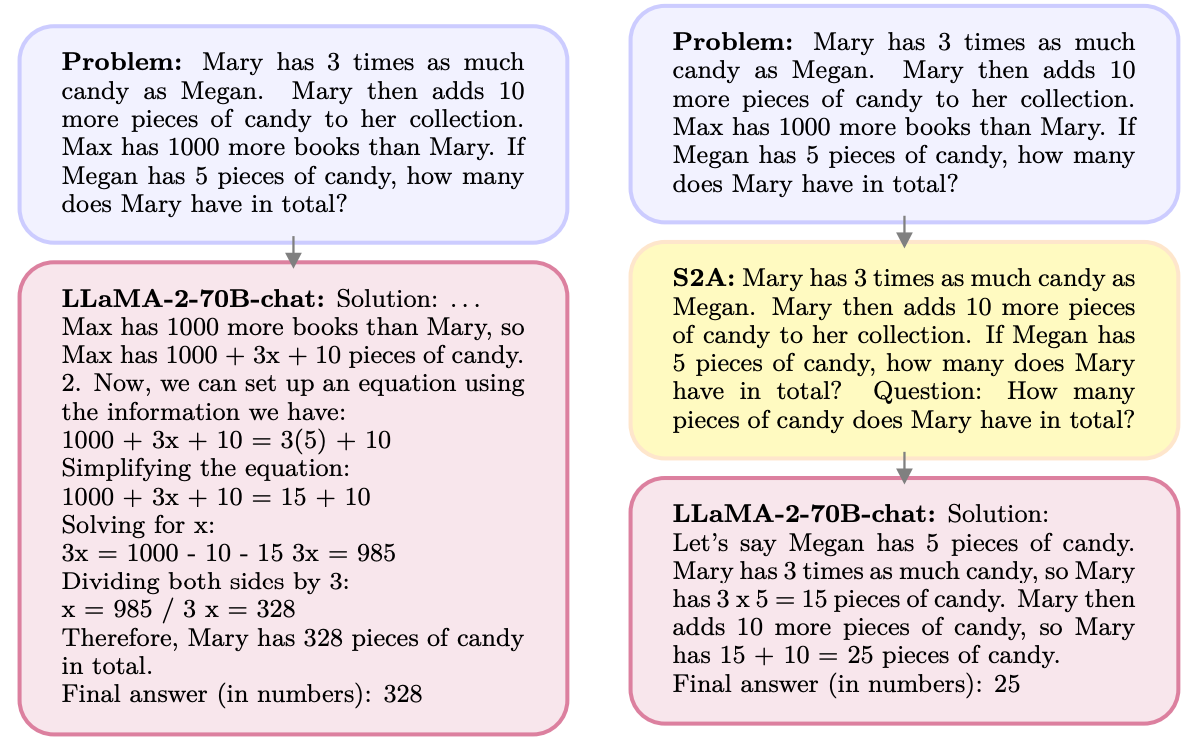

S2A, on the other hand, combines the strengths of both approaches. It uses natural language processing to remove bias and irrelevant information from the prompt before generating an optimized prompt for the LLM to work on.

Example:

Let’s take a math example. S2A removes irrelevant information related to Max, making the prompt less confusing for the LLM.

Reducing Bias and Sycophancy:

LLMs have a tendency to agree with users, even when they are wrong. S2A addresses this issue by stripping out bias in the prompt and only processing the relevant parts. This reduces what AI researchers call “sycophancy” or the AI model’s inclination to please.

Impressive Results:

S2A has shown impressive results in improving accuracy for math, factual, and long-form questions. For example, it achieved almost a 50% improvement in accuracy compared to a baseline prompt that contained bias.

Considerations:

However, there are some considerations. Pre-processing the prompt adds computational requirements and can increase costs, especially for long and information-rich prompts. Additionally, users may not always be able to write well-crafted prompts.

Using System 2 Attention for AI Solutions

If you want to leverage the benefits of System 2 Attention, you can follow these steps:

- Omit opinions or leading suggestions from your prompts to get accurate responses from LLMs.

- Consider how AI can redefine your company’s way of work by identifying automation opportunities, defining measurable KPIs, selecting appropriate AI tools, and implementing AI gradually.

- Connect with us at hello@itinai.com for AI KPI management advice.

- Stay updated on leveraging AI by following our Telegram channel t.me/itinainews or Twitter @itinaicom.

Practical AI Solution: AI Sales Bot

Discover how AI can redefine your sales processes and customer engagement with the AI Sales Bot from itinai.com/aisalesbot. This solution is designed to automate customer engagement 24/7 and manage interactions across all customer journey stages.

Explore AI solutions at itinai.com.

List of Useful Links:

- AI Lab in Telegram @aiscrumbot – free consultation

- System 2 Attention improves accuracy of LLM responses

- DailyAI

- Twitter – @itinaicom