The text discusses the growing significance of software in the landscape of Large Language Models (LLMs) and outlines emerging libraries and frameworks enhancing LLM performance. It emphasizes the critical challenge of reconciling software and hardware optimizations for LLMs and highlights specific software tools and libraries catering to LLM deployment. Emerging hardware and memory technologies are also mentioned as future discussion topics.

SW/HW Co-optimization Strategy for LLMs — Part 2 (Software)

Emerging Software Tools and Libraries for LLM Performance

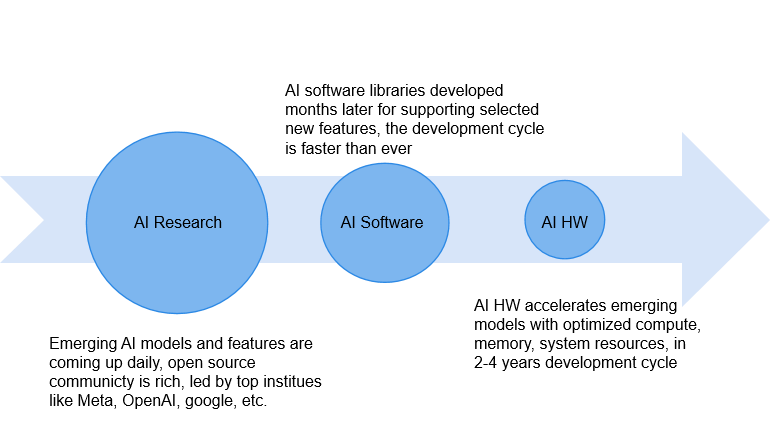

The software landscape for middle managers is rapidly evolving, with a plethora of new tools and libraries being released to enhance Large Language Model (LLM) performance. As AI hardware continues to advance, the challenge lies in optimizing LLMs from a system perspective, bridging the gap between software and hardware. Our series aims to address this challenge and provide practical solutions for middle managers in the AI space.

Traditional AI Software Stack

Nvidia, AMD, and Intel are at the forefront of offering software platforms to facilitate AI inference. Nvidia’s CUDA software ecosystem, AMD’s RoCm, and Intel’s oneAPI, oneDNN, and OpenVINO APIs support AI models across various hardware platforms.

Optimizing LLMs on Conventional AI Software Stack

Enabling fundamental functions and operators for LLMs on the AI software stack is crucial. For instance, Nvidia’s TensorRT supports optimizations for DL models, including layers and tensor fusion, kernel auto-tuning, and mixed-precision for fast inference.

Acceleration LLM Software Frameworks and Libraries

Several emerging open-source software frameworks and libraries have been developed to accelerate LLM inferencing. These frameworks offer features such as continuous batching, model parallelism, and offloading strategies to optimize memory and compute resources.

Key Message

With rapid advancements in LLM models and acceleration techniques, organizations and developers must choose suitable software options to effectively implement these acceleration techniques, maximizing AI hardware resources.

Spotlight on a Practical AI Solution

Discover how AI can redefine your sales processes and customer engagement with the AI Sales Bot from itinai.com/aisalesbot, designed to automate customer engagement 24/7 and manage interactions across all customer journey stages.

For more insights into leveraging AI, connect with us at hello@itinai.com or stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom.

List of Useful Links:

- AI Lab in Telegram @aiscrumbot – free consultation

- SW/HW Co-optimization Strategy for LLMs — Part 2 (Software)

- Towards Data Science – Medium

- Twitter – @itinaicom