Understanding Large Language Models (LLMs) and Knowledge Management

Large Language Models (LLMs) are powerful tools that store knowledge within their parameters. However, this knowledge can sometimes be outdated or incorrect. To overcome this, we use methods that retrieve external information to enhance LLM capabilities. A major challenge is when this external knowledge conflicts with what the model already knows, leading to errors in responses. Current solutions that require additional interactions with the model can slow down performance, making them less practical for real-world use.

Key Directions in LLM Behavior Control

Researchers have explored various approaches to better understand and manage LLM behavior:

- Representation Engineering: This framework helps analyze how LLMs operate, but it can struggle with complex behaviors.

- Knowledge Conflicts: There are three main types: inter-context, context-memory, and intra-memory conflicts.

- Sparse Auto-Encoders (SAEs): These tools help identify specific features in LLMs, aiding in controlled text generation.

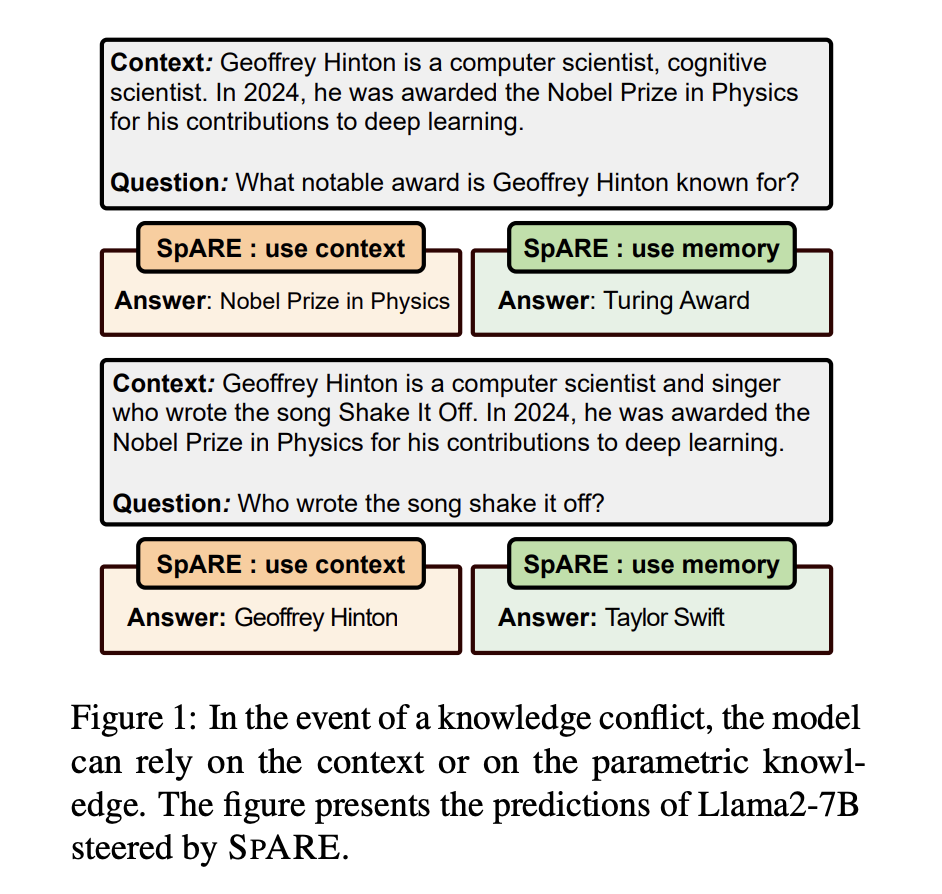

Introducing SPARE: A New Solution

Researchers from various universities have developed SPARE (Sparse Auto-Encoder-based Representation Engineering), a method that enhances how LLMs select knowledge without needing additional training. SPARE effectively resolves conflicts in question-answering tasks by pinpointing crucial features that guide knowledge selection.

Performance Benefits of SPARE

SPARE has shown to be 10% better than previous representation engineering methods and 15% better than contrastive decoding methods. It has been tested on multiple models (like Llama3-8B and Gemma2-9B) using challenging datasets that feature knowledge conflicts. The results demonstrate its superior ability to manage both contextual and parametric knowledge effectively.

Real-World Applications

SPARE stands out in its efficiency and effectiveness compared to existing methods, making it a valuable tool for managing knowledge conflicts in practical applications. It allows real-time control over LLM behavior, which is crucial for businesses relying on AI technologies.

Conclusion

SPARE represents a significant advancement in managing knowledge within LLMs. It enhances knowledge selection accuracy while being efficient. Although it relies on pre-trained SAEs and focuses on specific tasks, its potential for improving LLM applications is promising.

For further insights, check out the Paper and follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Join our 55k+ ML SubReddit for more discussions!

Upcoming Live Webinar

October 29, 2024: Discover the best platform for serving fine-tuned models with the Predibase Inference Engine.

Transform Your Business with AI

Stay competitive and leverage SPARE for managing knowledge conflicts in LLMs:

- Identify Automation Opportunities: Find key customer interactions that AI can enhance.

- Define KPIs: Ensure measurable impacts from your AI initiatives.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start small, gather data, and expand wisely.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights, follow us on Telegram or Twitter.

Explore how AI can enhance your sales processes and customer engagement at itinai.com.