Introducing Arctic Embed L 2.0 and M 2.0

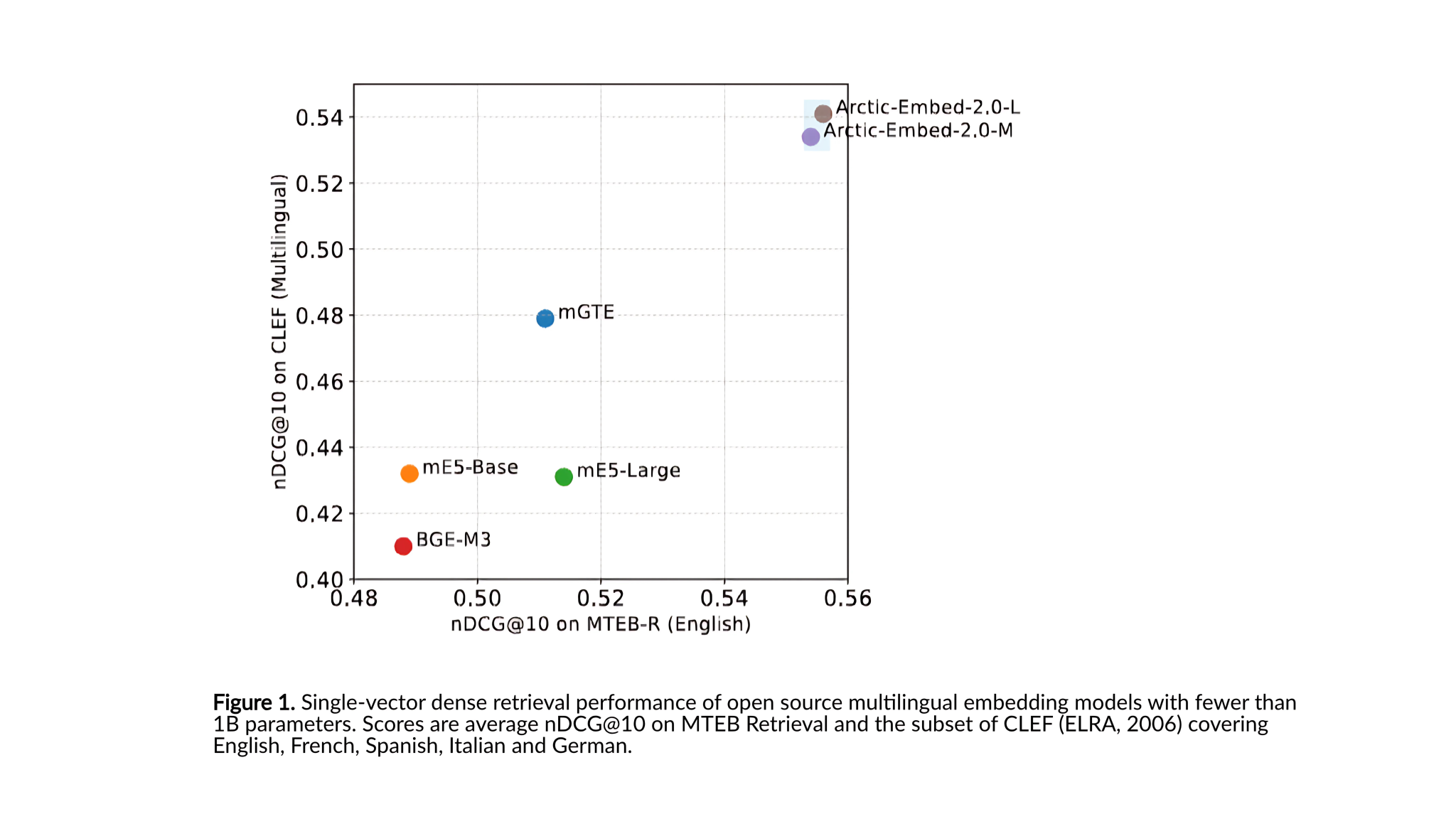

Snowflake has launched two new powerful models, Arctic Embed L 2.0 and Arctic Embed M 2.0, designed for multilingual search and retrieval.

Key Features

- Two Variants: Medium model with 305 million parameters and large model with 568 million parameters.

- High Context Understanding: Both models can handle up to 8,192 tokens for extensive context.

- Multilingual Strength: Excellent performance in multiple languages, surpassing some English-only models.

- Speed: The large model processes over 100 documents per second with quick query embedding.

- Compact Size: Embeddings can be compressed to 128 bytes, making them efficient for large-scale use.

Practical Solutions and Value

Arctic Embed 2.0 models help businesses:

- Streamline Workflows: No need for separate models for different languages.

- Enhance Retrieval Quality: Achieve top-tier results in both English and multilingual contexts.

- Cost-Effective Deployment: Efficient performance on affordable hardware options.

- Scalable Retrieval: Ideal for handling large document repositories.

Empowering Organizations

Released under the Apache 2.0 license, these models can be modified and applied across various industries. This reflects Snowflake’s commitment to making AI accessible to all.

Next Steps for AI Integration

To evolve your company with AI:

- Identify Automation Opportunities: Find key areas for AI integration.

- Define KPIs: Measure the impact of your AI initiatives.

- Select an AI Solution: Choose customizable tools that fit your needs.

- Implement Gradually: Start small, gather data, and scale up wisely.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights into AI, follow us on Telegram and Twitter.

Conclusion

The Arctic Embed L 2.0 and M 2.0 models set a new standard for multilingual AI solutions, helping organizations tackle language challenges effectively.