Energy-Efficient AI Solutions with Slim-Llama

Understanding Large Language Models (LLMs)

Large Language Models (LLMs) are key to advancements in artificial intelligence, especially in natural language processing. However, they often require a lot of power and resources, making them challenging to use in energy-limited situations like edge devices. This can lead to high operational costs and limited access.

Current Limitations

Current methods to make LLMs more efficient rely on general processors or GPUs, using techniques like weight quantization and sparsity optimizations. While these methods save some energy, they still depend heavily on external memory, which wastes energy and doesn’t provide the fast performance needed for real-time applications.

Introducing Slim-Llama

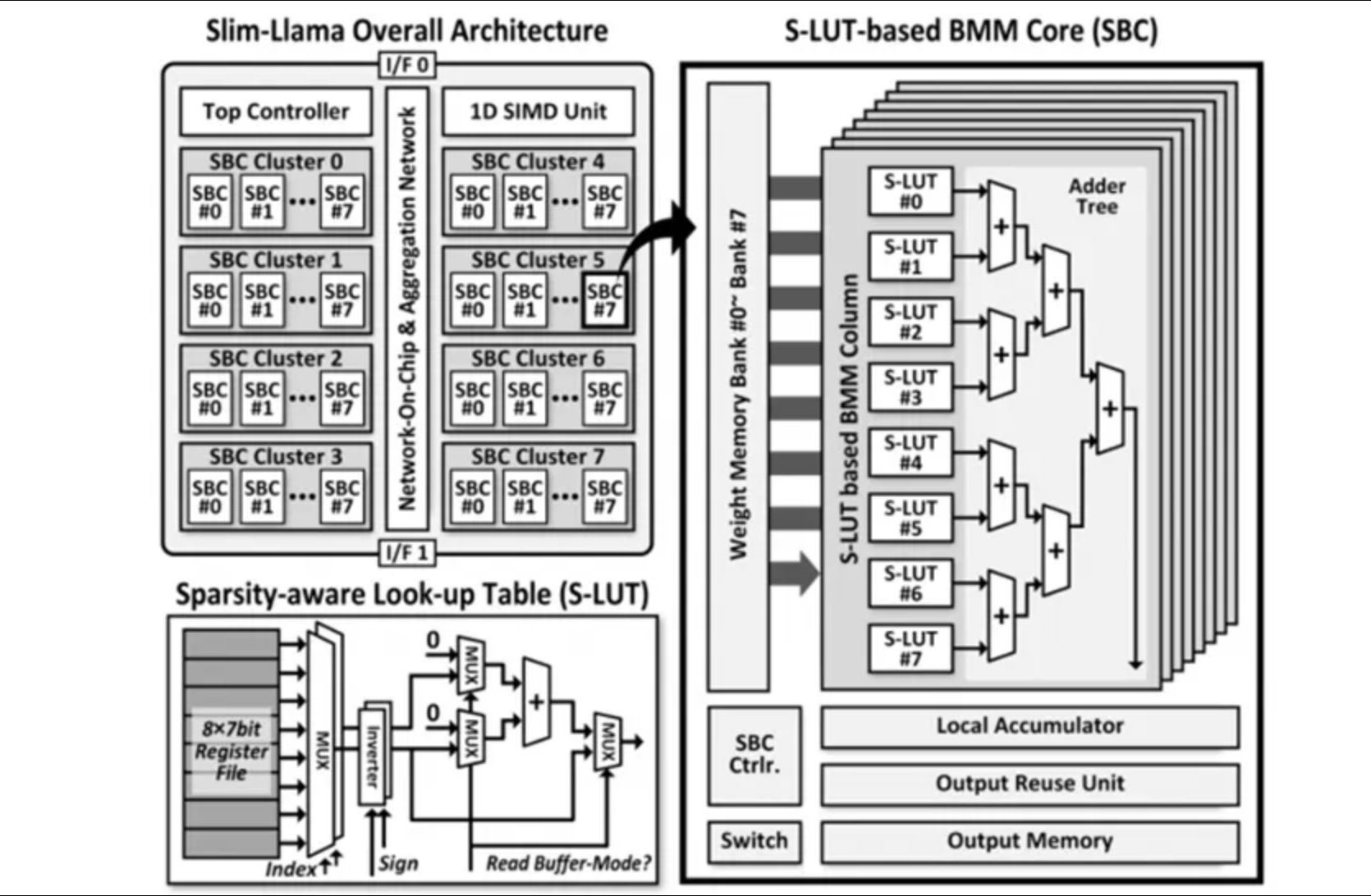

Researchers at KAIST have created Slim-Llama, an innovative Application-Specific Integrated Circuit (ASIC) that optimizes LLM deployment. Slim-Llama uses binary and ternary quantization to reduce model weight precision, cutting down on memory and computational needs while maintaining performance. It features a Sparsity-aware Look-up Table (SLT) for efficient data management and employs smart data flow techniques to further enhance efficiency.

Key Features of Slim-Llama

– Compact design using Samsung’s 28nm CMOS technology.

– 500KB of on-chip SRAM eliminates reliance on external memory, reducing energy waste.

– Supports bandwidth of up to 1.6GB/s at 200MHz for smooth data management.

– Achieves a low latency of 489 milliseconds with the Llama 1-bit model and supports up to 3 billion parameters.

Performance Highlights

Slim-Llama demonstrates exceptional energy efficiency, achieving a 4.59x improvement over previous solutions. Its power consumption ranges from just 4.69mW to 82.07mW, with a peak performance of 4.92 TOPS at 1.31 TOPS/W. This makes it ideal for real-time applications that require both speed and efficiency.

Transforming AI Deployment

Slim-Llama addresses the energy challenges of deploying large-scale AI models. It combines advanced quantization techniques and efficient data flow management, setting a new standard for energy-efficient AI hardware. This innovation not only enhances the deployment of billion-parameter models but also promotes more accessible and environmentally friendly AI solutions.

Get Involved

For more technical details, follow our updates on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Don’t miss out on insights from our 60k+ ML SubReddit community.

Elevate Your Business with AI

To stay competitive, leverage Slim-Llama and discover how AI can transform your business processes. Here’s how:

– **Identify Automation Opportunities**: Find customer interaction points that can benefit from AI.

– **Define KPIs**: Ensure your AI efforts have measurable impacts.

– **Select an AI Solution**: Choose tools that fit your needs and allow for customization.

– **Implement Gradually**: Start with a pilot project, gather insights, and scale up thoughtfully.

For AI management advice, reach out to us at hello@itinai.com. Stay updated on AI insights via our Telegram channel or Twitter.

Revolutionize Your Sales and Customer Engagement

Explore how AI can redefine your sales processes and customer interactions at itinai.com.