Understanding Large Language Models (LLMs) and GUI Automation

Large Language Models (LLMs) are powerful tools that help create intelligent agents capable of handling complex tasks. As more people interact with digital platforms, these models act as smart interfaces for everyday activities. The new field of GUI automation focuses on developing these agents to simplify human workflows based on user needs. This is a significant step forward in how we interact with computers, allowing for more precise and efficient execution of digital tasks.

Challenges with Early GUI Automation

Initial efforts in GUI automation relied on text-based agents using closed-source LLMs like GPT-4. These methods depended on text-rich data such as HTML inputs, which limited their effectiveness. Users mainly engage with interfaces visually, often using screenshots without structural details. The main challenge is to improve how computers perceive and interact with graphical user interfaces.

Addressing Computational Challenges

Training models for GUI automation faces several hurdles, especially with high-resolution screenshots that complicate processing. Many existing models struggle to handle this data efficiently, leading to wasted computing resources. Additionally, managing interactions between vision, language, and actions adds complexity, especially as actions vary across devices.

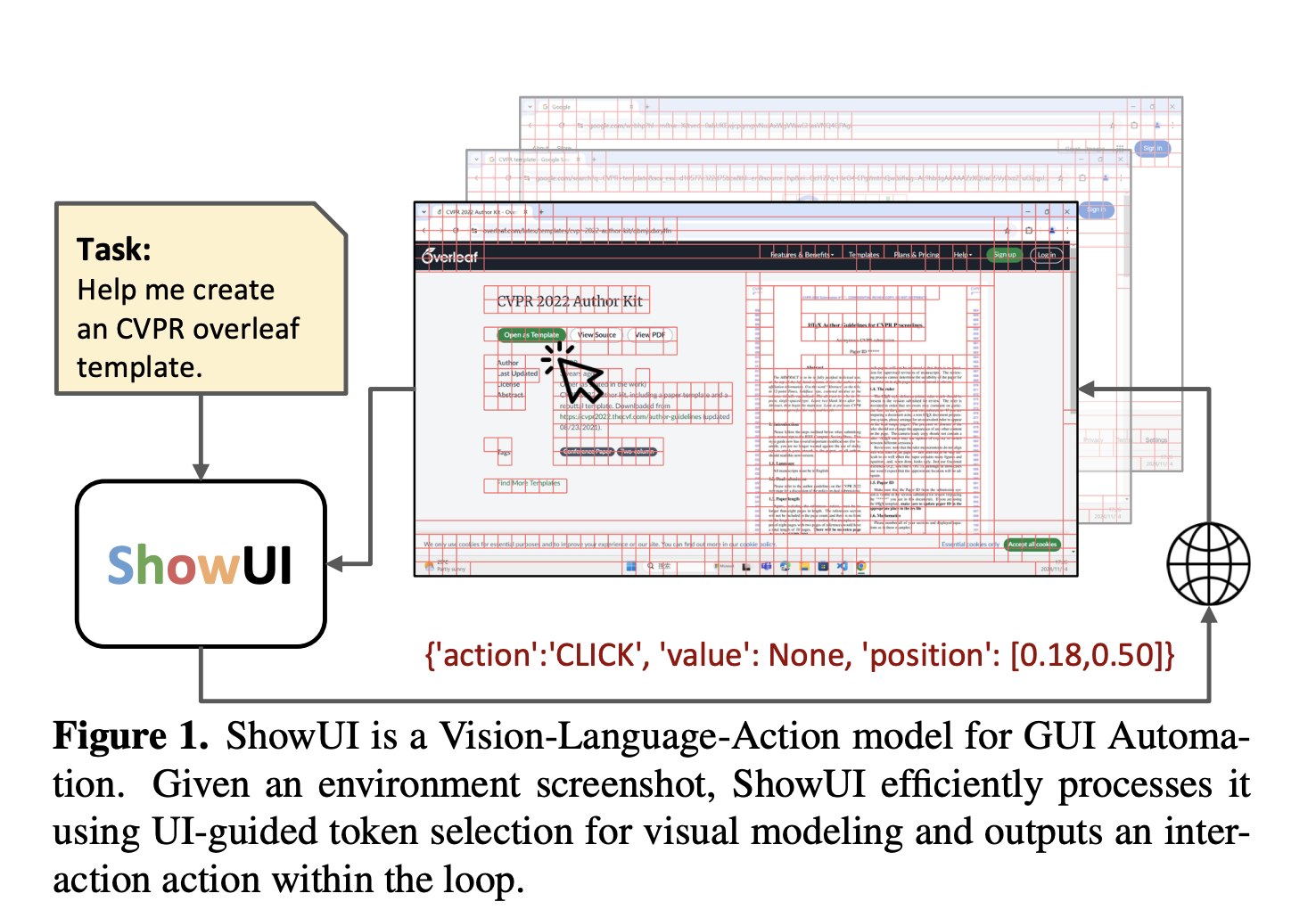

Introducing ShowUI: A Solution for GUI Automation

Researchers from Show Lab, the National University of Singapore, and Microsoft have developed ShowUI, a model designed to tackle these challenges in GUI automation. It uses three innovative techniques:

- UI-Guided Visual Token Selection: This method reduces processing costs by converting screenshots into connected graphs, identifying unnecessary elements while keeping essential features intact.

- Interleaved Vision-Language-Action Streaming: This technique enables flexible management of visual-action histories and allows the model to adapt to various device-specific actions.

- GUI Instructional Tuning: This approach carefully curates training data to ensure high-quality datasets that enhance the model’s performance.

Benefits of ShowUI’s Techniques

The UI-Guided Visual Token Selection significantly improves efficiency by reducing the amount of data the model needs to process while maintaining accuracy. For example, it can cut down token sequences from 1296 to as few as 291 in certain contexts.

The Interleaved Vision-Language-Action Streaming method allows for better navigation by standardizing actions across different platforms, making it easier to predict actions and manage interactions.

The GUI Instructional Tuning ensures that the model is trained on diverse and relevant data, improving its ability to understand and execute tasks in various environments.

ShowUI’s Performance and Future Potential

Tests of ShowUI show promising results, particularly in mobile navigation tasks, where it achieved a 1.7% increase in accuracy. Its ability to learn from diverse GUI data sets it apart from other models that rely on limited information.

ShowUI represents a major step forward in creating visual agents that can interact with digital interfaces more like humans do. Its innovative solutions enhance efficiency, manage complex interactions, and demonstrate impressive performance while being lightweight.

Explore AI Solutions for Your Business

If you’re looking to enhance your company with AI, consider how ShowUI can help streamline your operations:

- Identify Automation Opportunities: Find customer interaction points that could benefit from AI.

- Define KPIs: Make sure your AI projects have measurable impacts on your business.

- Select an AI Solution: Choose tools that fit your needs and can be customized.

- Implement Gradually: Start small, gather data, and expand your AI usage wisely.

For advice on AI KPI management, contact us at hello@itinai.com. For ongoing insights into leveraging AI, follow us on Telegram or @itinaicom.

Discover how AI can transform your sales processes and customer engagement at itinai.com.